Low latency - an important factor that ensures reliable operation and high performance of networks. Applications for real-time communication and streaming are highly dependent on the waiting time. Increasing of the delay of only a few milliseconds can lead to a distortion of the image and voice and financial losses.

Providers are trying to monitor network bandwidth and latency fluctuation, but the increase in the "width" of the channel often has no effect on the delay in the network. In this article, we look at the main causes of delay and ways to overcome it.

The delay and its impact on the quality of communication

In networks based on packet exchange connection between delay and bandwidth is complex and mixed in the definition. The waiting time is composed of the following components:

- serialization delay - the time that needs the port to transmit a packet

- propagation delay - the time that a bit needs to achieve receiver (determined by the laws of physics)

- overload delay - the time frame spends in the output queue of a network element

- transmission delay - the time network element spends on analysis, processing, and transmission of the packet

Traffic management

"Traffic management" – an ability of a network to handle different types of traffic with different priorities.

This approach is used in networks with limited bandwidth in the critical applications that are sensitive to delays. Management can mean traffic restrictions for specific services, such as e-mail, and the allocation of the channel under the operation of business applications.

For traffic and quality of communication management in an organization's network engineers recommend:

- Set up a network so that you can monitor and make traffic classification

- Analyze network traffic to understand the laws of work of applications

- Implement appropriate separation of access levels

- Conduct monitoring and reporting, to actively manage the changing of traffic distribution schemes

The most effective way to control traffic – is a hierarchical quality of service (H-QoS), which is a combination of network policies, filtering and managing of traffic capacity. H-QoS will not reduce the speed if all network elements provide ultra-low latency and high performance. The main advantage of H-QoS – reduce latency without the need to increase bandwidth.

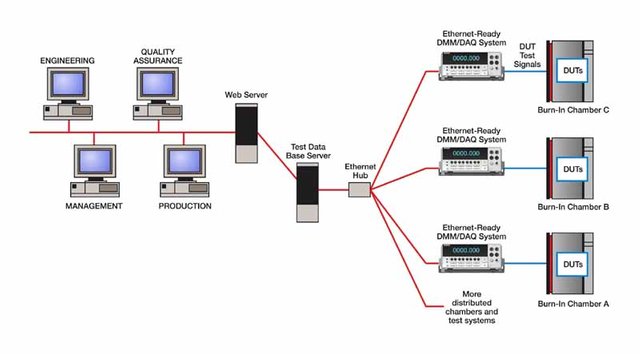

Using of NID

Network interface device (NID) make it possible to monitor and optimize traffic at low cost. Typically, such devices are installed in the territory of the subscriber: network towers and other transition points between the network operators.

NID provides control of all network components. If a device supports the H-QoS, the provider can not only monitor the operation of the network but also make individual settings for each connected user.

Caching

A relatively small increase in the passageway itself will not solve the problem of low performance of network applications. Caching helps to accelerate the delivery of content and optimize bandwidth. This process can be regarded as a technique of storage resources acceleration - the network works faster, like after the upgrade.

Typically, organizations use caching on several levels. It is worth mentioning the so-called proxy caching. When a user requests any data, its request can be made by a local proxy cache.

Proxy caches are a type of shared cache memory: working with a large number of users and are very good at reducing latency and network traffic. One of the useful options of using a proxy cache is the ability to remotely connect several employees to a set of interactive web applications.

Data compression

The main task of data compression - to reduce the size of files that are sent over the network. Somewhat it is similar to the caching and can give acceleration effect by increasing the channel capacity. One of the most common methods of compression - algorithm of Lempel - Ziv - Welch, which is used, for example, in the ZIP-archive and UNIX compression utility.

However, in some situations, the data compression can cause problems. For example, the compression does not scale well in terms of use of the resources of RAM and CPU. Also, the compression is rarely beneficial, if the traffic is encrypted. In most encryption algorithms, the output consists of a little repetitive sequence, so that such data can’t be compressed by standard algorithms.

For efficient operation of network applications, you need to solve problems with bandwidth and delay simultaneously. Data compression is aimed at the resolution only of the first problem, so it is important to use it in conjunction with traffic management techniques.

Today, engineers are constantly conducting research, trying to improve the performance and efficiency of networks, developing improved methods of management, which will eliminate the packet loss during the overloading of the ports, creating protocols for data link layer connection, capable of providing the shortest connection via Ethernet.

Maintaining of the high quality of the connection in the networks - an important task for modern organizations.

Follow me, to learn more about popular science, math, and technologies

With Love,

Kate

This post has been ranked within the top 80 most undervalued posts in the second half of Nov 21. We estimate that this post is undervalued by $4.90 as compared to a scenario in which every voter had an equal say.

See the full rankings and details in The Daily Tribune: Nov 21 - Part II. You can also read about some of our methodology, data analysis and technical details in our initial post.

If you are the author and would prefer not to receive these comments, simply reply "Stop" to this comment.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit