As you probably would assume naturally this area of AI usage should be the second biggest motor in pushing AI development. Historically it has always been the one of the biggest if not the biggest funding area for “State of the art” tech.

The military industrial complex has brought us oodles of new technical advancements and most people don’t even know about this. I won’t even try to touch this in any depth but I’m just saying Internet, GPS, Velcro, putting man on the moon based on ICBM (intercontinental ballistic missile) tech.

Of course this would be the number one breading ground for AI systems since in the business of killing people your looking for the kill count vs. dollars and some other metrics that could disgust you. How many of your enemies can be taken out with the highest cost efficiency. Don’t be shocked, it’s simply a business. When you delve into the realm of conflict and freedom research you have to get used to a different kind of jargon.

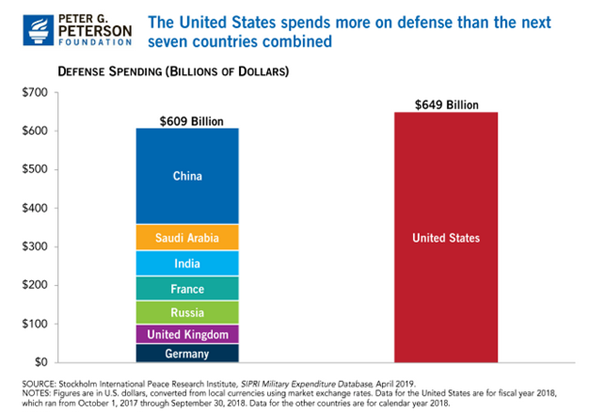

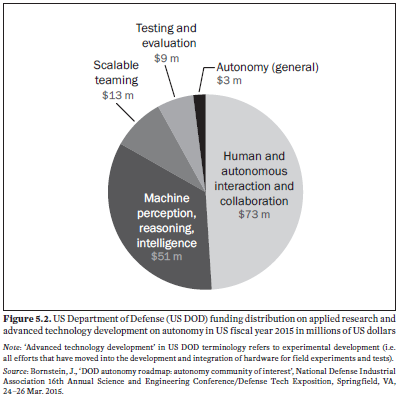

Let’s take a quick luck at the funding to help you understand what kind of dimensions of money are behind this.

Budget on global spending for military robots/AI

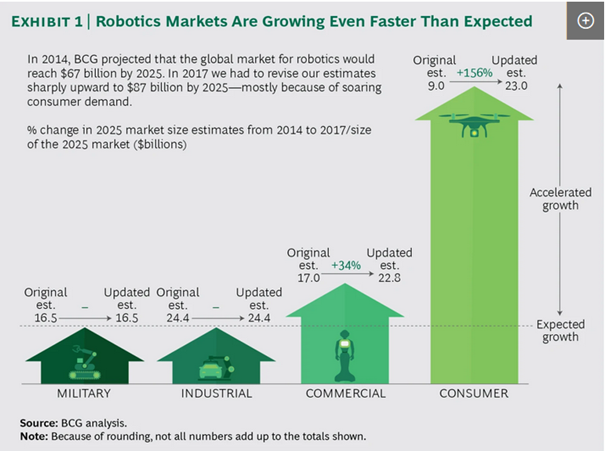

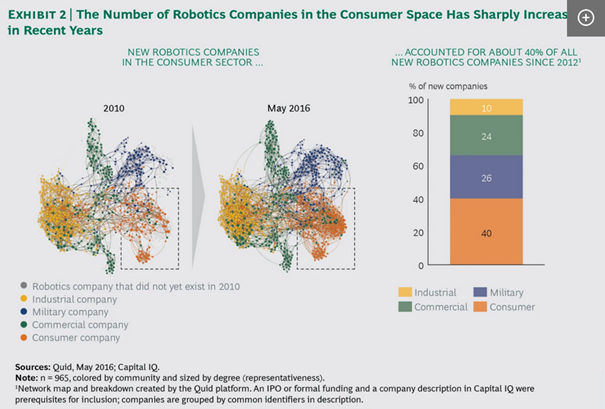

According to BCG, global spending on military robots grew from just $2.4 billion in 2000 to $7.5 billion in 2015. BCG projects it will reach $16.5 billion by 2025, which can explain why 26 percent of new robotics companies since 2012 have been focused on military applications. This growth mirrors some of what we’ve seen in the industrial robotics sectors as well.

As a result, autonomous military equipment is already being deployed to replace people in some of the most dangerous, physically grueling, and mentally taxing duties. Companies and militaries are continuously announcing new autonomous systems to keep soldiers out of harm’s way.

Militaries around the world are looking for ways to just autonomous system in every possible field including land, air, and sea. This article will look at some of the uses in each these areas.

For further reading on this check out:

https://emerj.com/ai-sector-overviews/military-robotics-innovation/

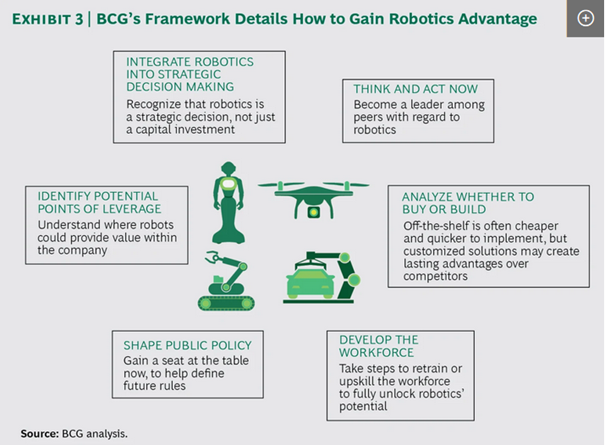

BCG overview markets and driving factors.

https://www.bcg.com/publications/2017/strategy-technology-digital-gaining-robotics-advantage.aspx

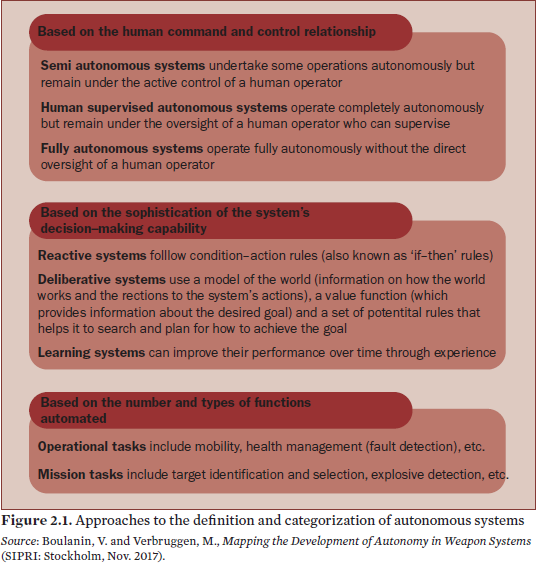

Let’s try to hone in into a definition of autonomous weapons systems with some categories

These numbers seem low in contrast to the total military expenses but first of all they are a little aged (2015) and a lot of military projects aren’t transparent in their goals and budgets. So called black projects are believed to be around 50-55 billion dollars per year (according to different sources in the conflict/peace research realm).

Of course you also have to look at the intelligence funding as well because much of AI development in this area has touching points to autonomous weapons systems.

You see it’s complicated, and I cannot come up with definitive up to date numbers on this, but it seems that somewhere between 70-150 billion dollars would be a reasonable, as an educated guess for these spending’s.

If you want do dig deeper on this check out Sipri for instance:

So this is a lot of money and of course it drives innovation in this space dramatically.

To show you one of many examples how AI is used for autonomous weapon systems see the following video.

Two fighter jets are releasing a swarm of intelligent drones. The autonomous drones then acquire there targets themselves and attack.

Should machines be allowed to decide on life and death?

One of the most renowned experts in the field of bioethics and autonomous weapons is Wendell Wallach.

He warns of a possible uncontrollable development and is committed to strive for a worldwide ban of autonomous weapons.

He says:

“Sometimes people do not fully understand what is meant with fully autonomous weapons. They think of a drone with facial recognition that takes out an terrorist from a distance or robotic soldiers on a battlefield. They sometimes do not appreciate that lethal autonomy can be used for almost every weapons system. This includes for instance atomic weapons or other high powered munitions. Autonomy is the ability to acquire a target autonomously and to destroy it while there is no or very little active human intervention needed."

Intelligent image processing, automatic target acquiring these AI technologies are already available.

See this little ad from the Kalashnikov weapons company for instance.

The worldwide arms race for autonomous weapons has already begun.

Wendell also says…

"The machines don’t make life and death decisions about humans. Humans make life and death decisions about humans. If we let this happen, to let machines decide who lives and who dies we undermine the basic principle of responsibility. Lethal autonomous weapons or self-driving cars are just the tip of the iceberg. What’s right under the surface is autonomy, autonomous systems in general. They undermine the basic principle of having somebody responsible. There must be an agent that is responsible, this can be a human or a corporation, somebody who is culpable and liable for any actions that are taken. I can’t imagine anything more stupid than for humanity to go down a route that undermines this responsibility. This would lead to a situation that nobody is responsible anymore if something really dire takes place."

In the past we recognized some tortuous path’s only in retrospect. The issue with this here might be that once we have given up control over this there’s no turning back!

We need a worldwide ban of autonomous intelligent weapons systems.

For further reading I recommend this:

https://news.yale.edu/2016/02/15/shaping-tomorrow-s-smart-machines-qa-bioethicist-wendell-wallach

https://www.yalelawjournal.org/comment/the-case-for-regulating-fully-autonomous-weapons

https://sputniknews.com/military/201602201035068410-killer-robots-un-us/

https://cacm.acm.org/magazines/2017/5/216318-toward-a-ban-on-lethal-autonomous-weapons/abstract

This again just scratches a tiny bit of surface of the weaponized AI sphere because it touches a full circle approach from target acquiring to destruction, elimination of target.

… but if you dissect the needed and available tech building bricks for this you can easily replace the physical part by completely conventional methods and weapons.

Of course in this case the pulling of the trigger is left to a human, which is in alignment with what anti autonomous weapons advocates ask for but you don’t need many abstraction levels to understand that in consequence the use of AI without “pulling the trigger” is already business as usual.

In short, AI systems with none lethal capabilities can easily be used to achieve lethality.

In their self-image the use of such tech is probably just by those who drive the development of autonomous weapon systems. You can even here arguments that they will “safe” life’s if you listen to the military industrial complex of any given sphere of influence (USA, Russia, China…) for instance. It’s only the life's of the enemies that are taken out with “surgical precision”.

Remember, what is socially acceptable in one environment can be threatened by death in the other and things also change over time. Just think a moment about this and try to reflect was has changed in your lifespan in your culture.

A dystopian little scenario, that covers much of what I’ve written here, is shown in the following video.

Just replace the cause for executions by machine with any given random trigger. Maybe meat lovers, people older than 70, people that didn’t pay their taxes or those who ran a red light, maybe some that have "voted wrong" or attended a rally.

You get the picture, right?

Again my two Satoshis on this...

Well, I guess professor Wendell Wallach is right!

Most don't even understand what makes out an autonomous weapon system even less they are aware that there are already "solutions" readily available. Sure popular culture has picked up on this since decades and we all have a general understanding what this does look like. Terminators, drones and so on simply a thing that really go full circle from target acquiring to destruction/elimination.

Only a few really understand that it's more what's under the surface that is the most dangerous.

IMO of course we need a ban of these FAW (fully autonomous weapons systems) but on the other hand, what can scare the living hell out of one, is the fact that you can easily foresee a situation where readily available tech is used to built something like that on a "script kiddie" level. I imagine that everybody could hammer something together based on what's available in a matter of a few weeks.

This will be definitely one of the most dangerous developments in the coming years.

Right now already we have the situation that there simply is no efficient protection against weaponized drones. I'm not talking the predator or Israeli big drone tech but consumer grade drones that can be easily weaponized (just think a flying Molotov cocktail or worse).

Now think one step beyond that with a little GPS and facial recognition...

I'm afraid this is going to be tough to regulate and control.

The weirdest thing to me seems to be the fact that these physical threats aren't the most dangerous IMHO. It's the enabling of all kinds of malevolent usage of AI that scares most together with the easing into the fact that individual privacy and freedom are "overrated" and they have to be sacrificed for "security" by the 3 letter agencies in the US or their counterparts all over the world.

Full transparency of everyone with 100% compliance to the rules is what's needed they might think and they will try to enforce. Sure, some might think if it's targeting criminals and terrorists that might be Ok!?

...but rules and laws change over time and AI tech won't be limited to free and open societies if such a thing does still exist nowadays.

What might be perfectly within the law today could be an issues tomorrow and this also goes for social acceptance of certain things or behaviors.

I think it's high time to raise more awareness to this and to enable more public discourse around these issues.

Articles in this series so far:

AI in the now... China, "security" and "efficiency" vs individual freedom?

AI in the now… autonomous driving cars… status quo... ethics?

AI in the now… media, communication, filter bubbles, weaponized social media…

Idea, credits...

I've been researching in this field out of pure curiosity for the last years with some, not much, effort.

I've read a lot of different papers from researchers/universities all over the world for ongoing research in AI.

Because of a lot points of contact to my day job in information security I also always try to look at this from an infosec/opsec/privacy perspective.

BTW...

I've found a recent documentation about this field from Ranga Yogeshwar and the German public broadcasting service NDR very interesting and balanced.

I picked up their red thread for this series of articles.

Here the links to their 2 part documentary (in German):

Künstliche Intelligenz und die Veränderungen im Alltag (1/2) | Doku | ND...

Künstliche Intelligenz und die Veränderungen in der Gesellschaft (2/2) |...

Please let me know what you think about this in the comments or in an tweet reply!

Gif from my friend https://twitter.com/smilinglllama!

Dear @doifeellucky

Interesting read. I'm really passionate (and thrilled) whenever I see word "AI"

Unfortunatelly this post is a bit to old to upvote it. Till next time.

ps.

Check out my latest publication..

It brought some real emotions. I've been downvoted by over half million SP (attack of few accounts), however I also received solid support and few strong upvotes and now I will be enjoying the biggest genuine payout in my lifetime ;)

Yours

Piotr

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

Hello Piotr,

thanks for reading my post!

I'll check it out!

Cheers,

Lucky

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit