1. 回顾强化学习方法

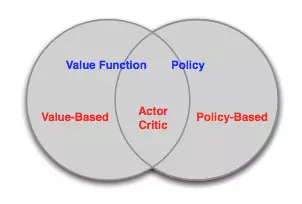

我们介绍了基于价值(Value-Based)的强化学习和基于策略(Policy-Based)的强化学习,有没有结合两者优势的强化学习方法呢?答案是肯定的,那就是Actor-Critic学习方法。三者的关系如下图:

基于价值的强化学习通过学习(近似的)价值函数并采用比如Ɛ-greedy执行方法更新策略;基于策略的强化学习没有价值函数,直接学习策略;Actor-Critic强化学习既学习价值函数也学习策略函数。

2. Actor-Critic方法简介

Actor-Critic的字面意思是“演员-评论”,相当于演员在演戏的同时有评论家指点继而演员演得越来越好。

基于Actor-Critic策略梯度学习分为两部分内容:

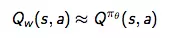

- Critic:参数化行为价值函数Qw(s, a)

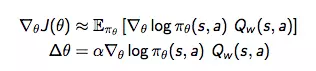

* Actor:按照Critic部分得到的价值引导策略函数参数θ的更新。

* Actor:按照Critic部分得到的价值引导策略函数参数θ的更新。

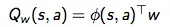

对于Critic的部分可以使用之前所讲到的MD,DP等方法进行优化,举一个简单的例子,假设价值函数是一个线性的近似函数:

- Critic: 使用TD(0)的方法更新权重 w

- Actor 通过策略梯度(Policy Gradient)更新θ

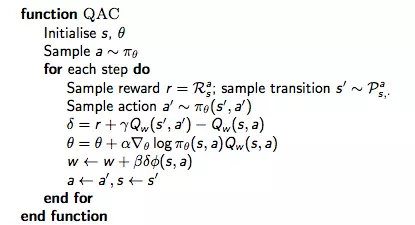

具体算法如下:

与上一篇文章中的蒙特卡洛策略梯度算法相比, 1. 每一步都更新而不是完成整个Episode再更新,这是TD(0)和MD算法的区别。2. 通过更新w,和θ同时更新了价值函数和策略函数。

Congratulations @hongtao! You have completed the following achievement on the Steem blockchain and have been rewarded with new badge(s) :

Click here to view your Board

If you no longer want to receive notifications, reply to this comment with the word

STOPTo support your work, I also upvoted your post!

Do not miss the last post from @steemitboard:

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit