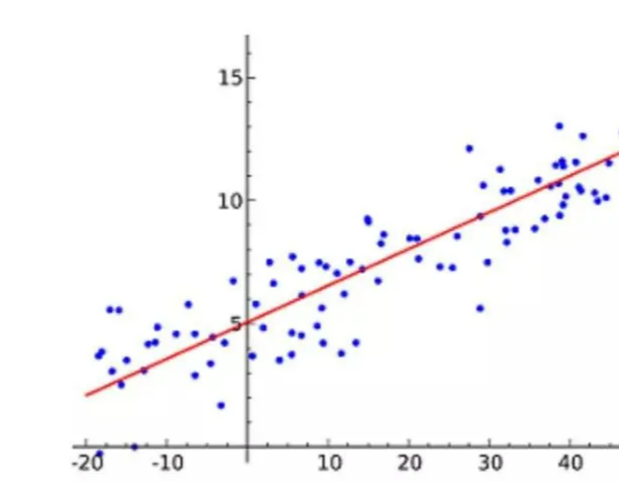

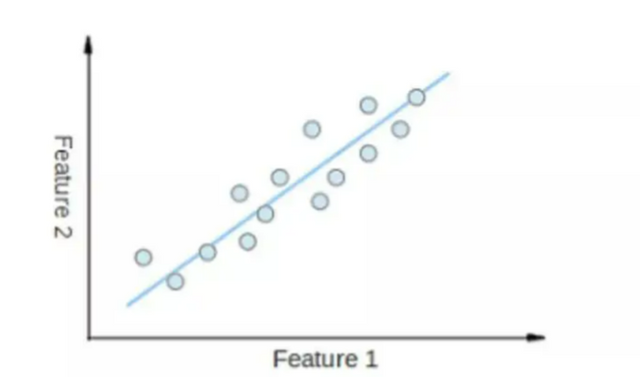

- Linear regression

Linear Regression is probably the most popular machine learning algorithm. Linear regression is to find a straight line and make this straight line fit the data points in the scatter plot as closely as possible. It attempts to represent the independent variables (x values) and numerical results (y values) by fitting a straight line equation to this data. This line can then be used to predict future values!

The most commonly used technique for this algorithm is the least squares method. This method calculates a line of best fit that minimizes the perpendicular distance from each data point on the line. The total distance is the sum of the squares of the vertical distances (green line) of all data points. The idea is to fit the model by minimizing this squared error or distance.

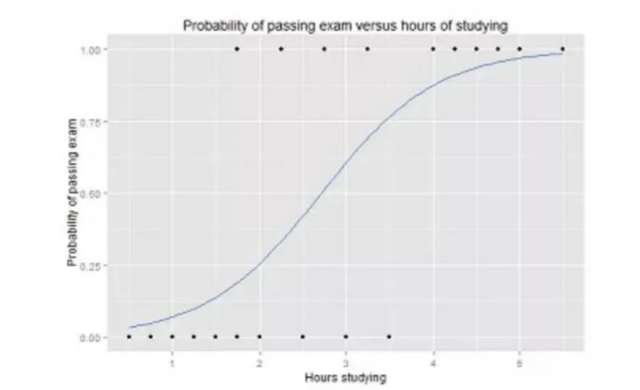

- Logistic regression

Logistic regression is similar to linear regression, but is used when the output is binary (i.e., when the result can only have two possible values). The prediction of the final output is a nonlinear sigmoid function called the logistic function, g().

This logistic function maps intermediate result values to the outcome variable Y, whose values range from 0 to 1. These values can then be interpreted as the probability that Y occurs. The properties of the sigmoid logistic function make logistic regression more suitable for classification tasks.

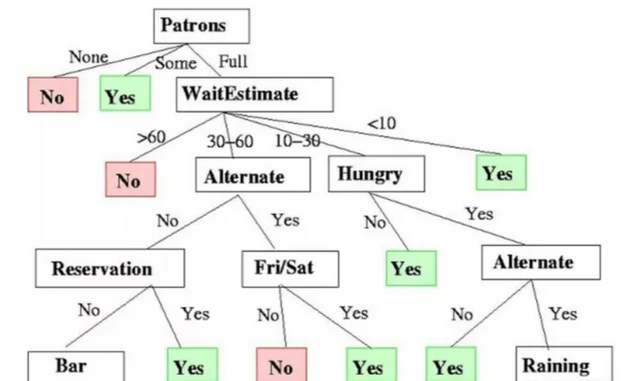

- Decision tree

Decision Trees can be used for regression and classification tasks.

In this algorithm, the training model learns to predict the value of the target variable by learning decision rules in a tree representation. A tree is composed of nodes with corresponding attributes.

At each node, we ask questions about the data based on the available features. The left and right branches represent possible answers. The final node (i.e. leaf node) corresponds to a predicted value.

The importance of each feature is determined through a top-down approach. The higher the node, the more important its properties are.

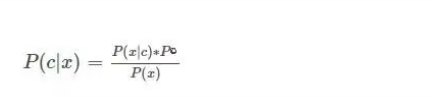

- Naive Bayes

Naive Bayes is based on Bayes' theorem. It measures the probability of each class, the conditional probability of each class given the value of x. This algorithm is used in classification problems and yields a binary yes/no result. Take a look at the equation below.

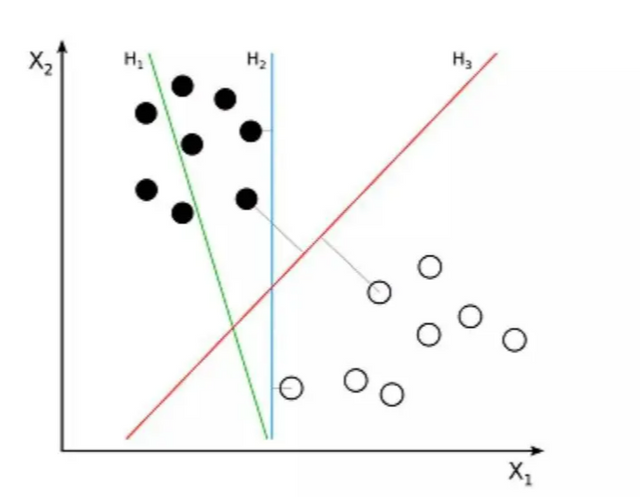

- Support Vector Machine (SVM)

Support Vector Machine (SVM) is a supervised algorithm for classification problems. A support vector machine attempts to draw two lines between data points with the largest margin between them. To do this, we plot data items as points in n-dimensional space, where n is the number of input features. On this basis, the support vector machine finds an optimal boundary, called a hyperplane, which best separates possible outputs by class labels.

The distance between the hyperplane and the nearest class point is called the margin. The optimal hyperplane has the largest margin that classifies points such that the distance between the nearest data point and the two classes is maximized.

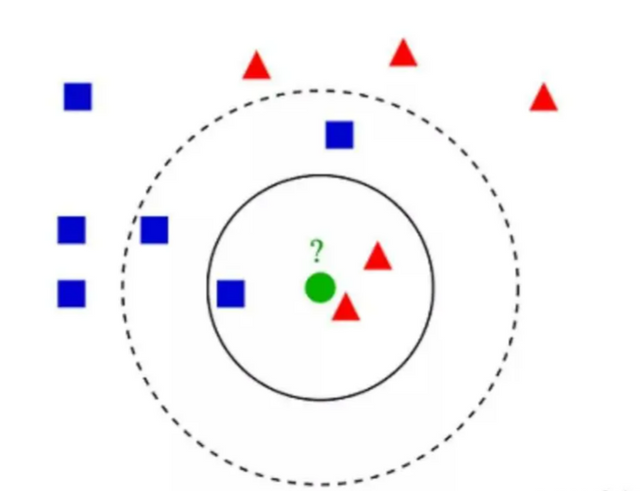

- K-nearest neighbor algorithm (KNN)

The K-Nearest Neighbors (KNN) algorithm is very simple. KNN classifies objects by searching the entire training set for the K most similar instances, or K neighbors, and assigning a common output variable to all these K instances.

The choice of K is critical: smaller values may give a lot of noise and inaccurate results, while larger values are infeasible. It is most commonly used for classification, but is also suitable for regression problems.

The distance used to evaluate the similarity between instances can be Euclidean distance, Manhattan distance, or Minkowski distance. Euclidean distance is the ordinary straight-line distance between two points. It is actually the square root of the sum of the squared differences in point coordinates.

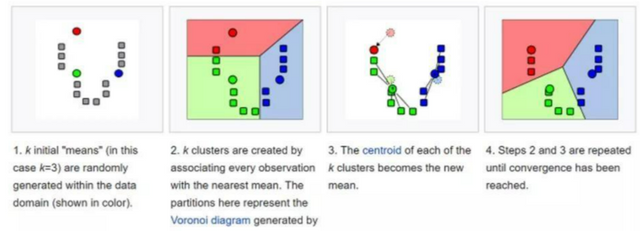

- K-means

K-means clusters the data set by classifying it. For example, this algorithm can be used to group users based on purchase history. It finds K clusters in the dataset. K-means is used for unsupervised learning, so we only need to use the training data X, and the number of clusters we want to identify K.

The algorithm iteratively assigns each data point to one of K groups based on its characteristics. It selects K points for each K-cluster (called centroids). Based on similarity, new data points are added to the cluster with the closest centroid. This process continues until the center of mass stops changing.

- Random Forest

Random Forest is a very popular ensemble machine learning algorithm. The basic idea of this algorithm is that the opinions of many people are more accurate than the opinions of one individual. In a random forest, we use an ensemble of decision trees (see Decision Trees).

To classify new objects, we take votes from each decision tree and combine the results before making the final decision based on majority voting.

(a) During the training process, each decision tree is constructed based on bootstrap samples from the training set.

(b) During classification, decisions on input instances are made based on majority voting.

- Dimensionality reduction

Machine learning problems have become more complex due to the sheer volume of data we are able to capture today. This means training is extremely slow and finding a good solution is difficult. This problem is often called the "curse of dimensionality".

Dimensionality reduction attempts to solve this problem by combining specific features into higher-level features without losing the most important information. Principal Component Analysis (PCA) is the most popular dimensionality reduction technique.

Principal component analysis reduces the dimensionality of a data set by compressing it into low-dimensional lines or hyperplanes/subspaces. This preserves as much of the salient features of the original data as possible.

- Artificial Neural Network (ANN)

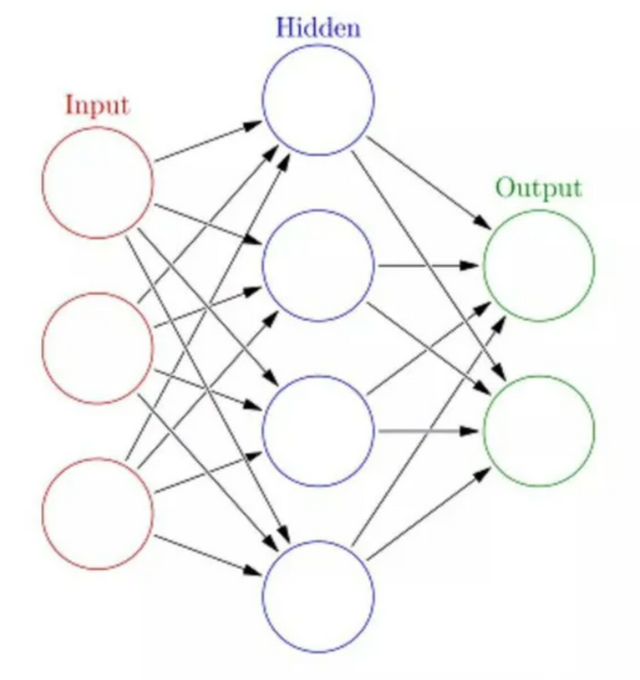

Artificial Neural Networks (ANN) can handle large and complex machine learning tasks. A neural network is essentially a set of interconnected layers composed of weighted edges and nodes, called neurons. Between the input layer and the output layer, we can insert multiple hidden layers. Artificial neural networks use two hidden layers. Beyond that, deep learning needs to be dealt with.

Artificial neural networks work similarly to the structure of the brain. A group of neurons is given a random weight to determine how the neuron processes the input data. The relationship between input and output is learned by training a neural network on input data. During the training phase, the system has access to the correct answers.

If the network doesn't accurately recognize the input, the system adjusts the weights. After sufficient training, it will consistently recognize the correct patterns.

Each circular node represents an artificial neuron, and the arrows represent connections from the output of one artificial neuron to the input of another.

思密达 看不明白

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

ai算法

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit