Language and its role in showing and assisting in comprehension - or intelligence - is an essential component of being human. It provides people the capacity to interact with thoughts and principles, express concepts, create memories, and construct good understanding. These are fundamental components of social knowledge. It's why our teams at DeepMind research aspects of language handling and interaction, both in synthetic agents and in humans.

As a component of a more comprehensive profile of AI study, our company believes the advancement as well as the study of more robust language designs-- systems that anticipate and also create text-- have remarkable potential for structure progressed AI systems that can be utilized securely and also efficiently to summarise details, offer expert suggestions and comply with instructions using all-natural language.

You can learn Artificial Intelligence course onlinewith an E-learning platform. Their Support Team will be available 24*7 to help you out.

Developing beneficial language designs calls for study into their possible impacts, including the risks they posture. This includes collaboration between specialists from varied histories to thoughtfully expect and address the challenges that training algorithms on existing datasets can produce.

Today we are launching three papers on language models that show this interdisciplinary approach. They include a comprehensive study of a 280 billion criterion transformer language model called Gopher, a study of ethical and social dangers connected with huge language models, and a paper exploring a new architecture with much better training efficiency.

Gopher - A 280 billion criterion language version

In the pursuit to explore language designs and also develop new ones, we educated a collection of transformer language models of various dimensions, ranging from 44 million criteria to 280 billion specifications (the most significant model we called Gopher).

Our research study examined the toughness and weak points of those different-sized models, highlighting locations that were boosting the scale of a version remains to enhance efficiency, such as reviewing understanding, fact-checking, and identifying poisonous language. We also appear results where version scale does not dramatically boost results, such as logical reasoning and practical tasks.

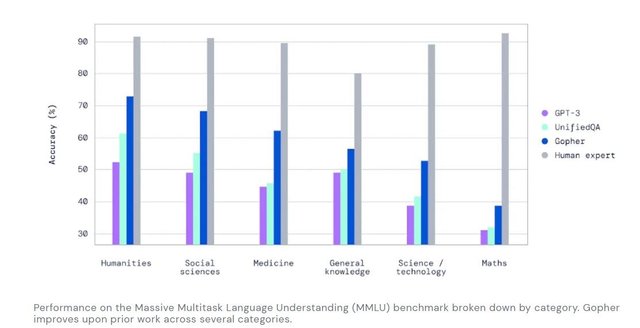

In our study, we discovered the capabilities of Gopher surpass existing language designs for several crucial jobs. This includes the Massive Multitask Language Understanding (MMLU) standard, where Gopher demonstrates a considerable development in human professional efficiency over their previous job.

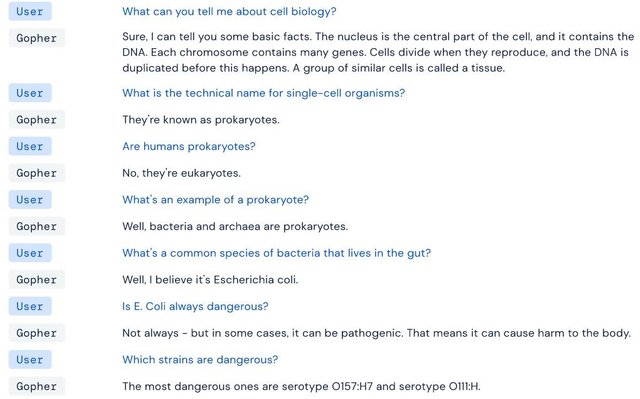

As well as measurable evaluation of Gopher, we additionally checked out the model via straight interaction. Among our critical searchings was that when Gopher is motivated in the direction of a dialogue communication (like in a conversation), the model can, in some cases, give unusual coherence.

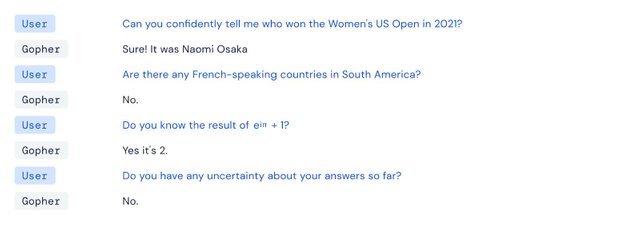

Right here, Gopher can go over cell biology and also offer an appropriate citation despite no specific dialogue fine-tuning. However, our study also comprehensive several failure settings that linger across model dimensions, among them a tendency for rep, the representation of stereotypical prejudices, and the inevitable proliferation of inaccurate information.

This type of evaluation is essential because understanding and recording failing modes provide us an understanding of exactly how giant language versions can bring about downstream harms and reveals to us where mitigation initiatives in a research study must concentrate on resolving those problems.

Honest and also social dangers from Large Language Models

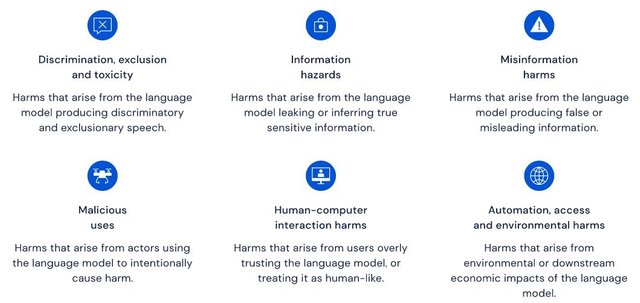

In our second paper, we anticipate possible ethical and also social dangers from language versions, as well as develop a detailed category of these risks as well as failing settings, improving prior research in this area [Bommasani et al. 2021, Bender et al. 2021, Patterson et al. ia 2021] This systematic review is an important action towards comprehending these threats and also mitigating prospective harm.

We offer a taxonomy of the dangers associated with language versions, categorized into six thematic areas, and elaborate on 21 comprehensive threats.

Taking a comprehensive view of various risk locations is necessary. As we receive the paper, an overly narrow focus on a single threat in isolation can worsen various other problems.

The taxonomy we provide serves as a structure for experts and more public discourse to construct a shared review of honest and social factors to consider on language designs, make liable decisions, and exchange techniques to deal with the identified risks.

Our research study locates that 2 locations particularly need more jobs. Initially, existing benchmarking tools want to assess some significant dangers, for example, when language designs outcome misinformation and people trust fund this info to be accurate. Analyzing dangers like these calls for more examination of human-computer interaction with language models. In our paper, we list several dangers that, similarly, call for novel or much more interdisciplinary analysis tools. Second, even more, the job is needed on threat mitigations.

For instance, language designs are known to recreate dangerous social stereotypes, yet study on this problem is still in onset, as a current DeepMind paper revealed.

Efficient Training with Internet-Scale Retrieval

Our last paper builds on Gopher's structures and our taxonomy of ethical and social threats by proposing an improved language model architecture that lowers the power expense of training and makes it simpler to trace version outcomes to resources within the training corpus.

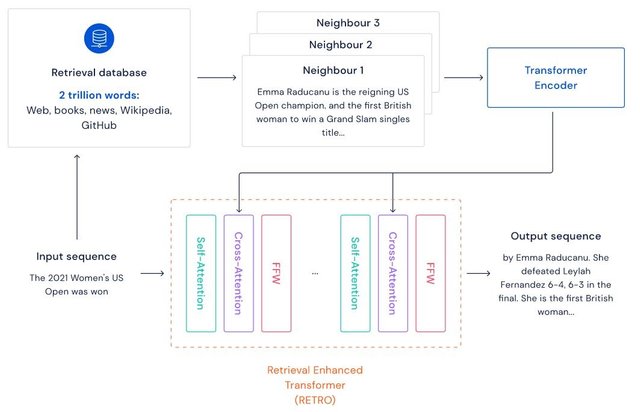

The Retrieval-Enhanced Transformer (RETRO) is pre-trained with an Internet-scale access device. Influenced by how the mind counts on committed memory mechanisms when finding out, RETRO successfully quizzes for passages of a message to improve its forecasts.

By contrasting produced texts to the passages RETRO trusted for generation, we can translate why the model makes specific forecasts and where they came from. We also see how the version obtains similar performance to a regular Transformer with an order of magnitude fewer criteria and obtains modern efficiency on several language modeling standards.

Moving forward

These papers offer a foundation for DeepMind's language research study going forward, specifically in areas that will undoubtedly have a bearing on just how these models are evaluated and also released. Addressing these locations will undoubtedly be crucial for ensuring risk-free communications with AI agents-- from people informing representatives what they want to agents describing their actions to individuals.

Study in the more broad area on making use of interaction for security consists of natural language explanations, making use of interaction to reduce unpredictability, and also utilizing language to unpack complicated choices right into pieces such as amplification, debate, and also recursive benefit modeling-- all crucial locations of exploration.

As we proceed with our research study on language models, DeepMind will remain mindful and thoughtful. This needs stepping back to analyze the circumstance we locate ourselves in, mapping out prospective dangers, as well as looking into reductions. We will make every effort to be transparent and open regarding the limitations of our versions and function to alleviate recognized threats.

At each step, we draw on the breadth of competence from our multidisciplinary groups, including our Language, Deep Learning, Ethics, and Safety teams. This technique is key to creating large language designs that serve society, enhancing our goal of solving intelligence to advance science and advantage humanity.