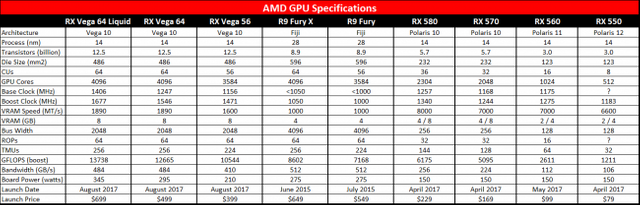

Vega. Two years of waiting to reach for the stars in the fastest of graphics cards is finally here. Let's be frank, this is what we wanted last year, right around the time that AMD was busy launching Polaris for the mainstream market. But Vega wasn't ready, not even close. AMD had to come to terms with 14nm FinFET, and Polaris was the chosen vessel for that. Still, Nvidia managed to launch a full suite of new GPUs using 14nm and 16nm FinFET over the course of around six months. Why did it take so much longer for AMD to get a high-end replacement for Fiji and the R9 Fury X out the door? I have my suspicions (HBM2, necessary architectural tweaks, lack of resources, and more), but the bottom line is that the RX Vega launch feels late to the party.Or perhaps the Vega architecture is merely ahead of its time? AMD has talked about numerous architectural updates that have been made with Vega, including DSBR, HBCC, 40 new instructions, and more. You can read more about the architectural updates in our Vega deep dive and preview earlier this month. In the professional world, some of these could be massively useful—professional graphics can be a very different beast than gaming. But we're PC Gamer, so what we really care about is gaming performance—and maybe computational performance to a lesser degree.Today, finally, we get to taste the Vega pudding. Will it be rich and creamy, with a full flavor that takes you back to your childhood… or will it be a lumpy, overcooked mess? That's what I aim to find out. And rather than beating around the bush, let's just dive right into the benchmarks. I'll have additional thoughts below on ways that Vega could improve over time, but this is what you get, here and now. Here are the specs for AMD's current generation of GPUs, including the Vega and the 500 series. I don't have the liquid cooled Vega 64 available for testing, but it should be up to eight percent faster than the air-cooled Vega 64, based on boost clocks.

If you look at the raw numbers, Vega holds promise—the Vega 64 has higher theoretical computational performance and more memory bandwidth than the GTX 1080 Ti—but AMD's GCN architecture has traditionally trailed behind Nvidia GPUs with similar TFLOPS. DX12 games meanwhile tend to track a bit closer to the theoretical performance, and certain tasks (like cryptocurrency mining) can do very well on AMD architectures. But the power requirements of AMD GPUs have also been higher than the Nvidia equivalents, and that remains true with Vega.Based on pricing, the direct competition for the Vega 64 is Nvidia's GTX 1080, while the Vega 56 will take on the GTX 1070. For the mainstream cards, if we ignore the currently inflated prices due to miners, the RX 580 8GB takes on the 1060 6GB, and the RX 570 4GB takes on the 1060 3GB. At nearly every major price, AMD now has a direct competitor to Nvidia's GPUs. And at every price point, AMD's cards use more power. The one exception is the top of the stack, where the GTX 1080 Ti (and Titan Xp) remain effectively unchallenged. And while there were hopes that AMD was going to blow our socks off with 1080 Ti performance at 1080 pricing, that unfortunately isn't going to happen.

Before we hit the benchmarks, let's talk about the testbed for a moment. You can see the full list of hardware on the right, and I still haven't really found a need to upgraded from my over-two-years-old Haswell-E rig. No surprise there, since I'm running overclocked and it was a top-of-the-line enthusiast rig when we put it together. I have made one change for 2017, and that's an overclock of the CPU to 4.5GHz, instead of the previous 4.2GHz. It's well within reach of my i7-5930K, and performance remains highly competitive at those clocks. I had considered swapping to the i9-7900X, but time hasn't permitted me to retest everything—and honestly, the 7900X is hardly any faster for gaming purposes.

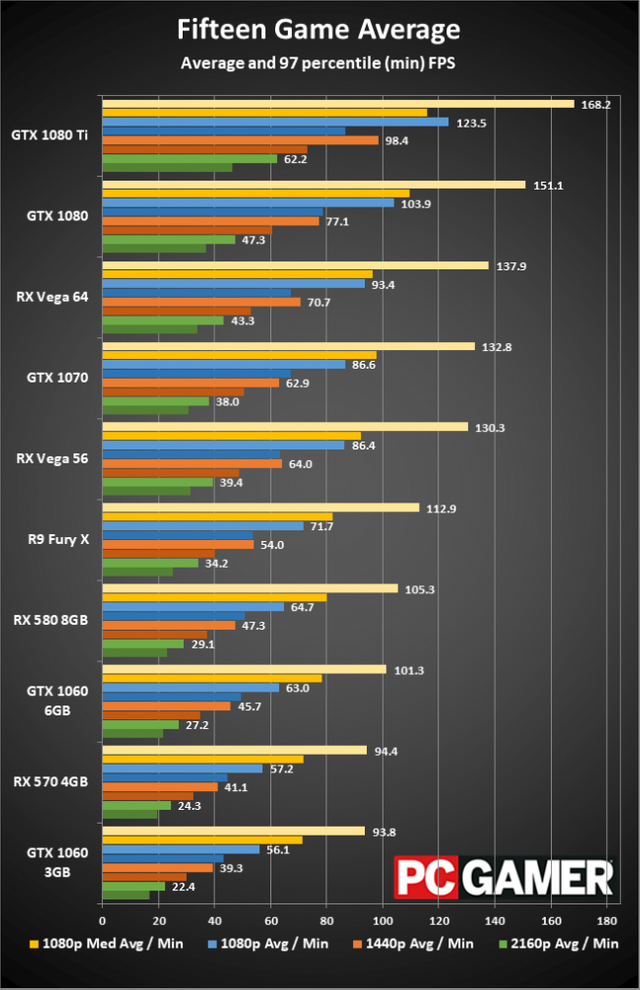

RX Vega vs. the World: a 15-round heavyweight battle

I tend to mix up the games I use for testing periodically, but we haven't really had anything recent that makes for a good benchmark (ie, reliable and repeatable results, in a game that lots of people are playing—and I know, several of the games I already benchmark fail to meet those criteria, but adding a new questionable game to replace an existing one doesn't really help). As such, the gaming benchmark suite remains largely unchanged.

I have switched from Ashes of the Singularity to the 'improved' Ashes of the Singularity: Escalation, which is supposed to be more multi-core CPU friendly. I've also added Mass Effect: Andromeda, simply because it's relatively demanding in terms of both CPUs and GPUs. Finally, I've dropped both Doom (Vulkan) and Shadow Warrior 2, but for different reasons. For Doom, the utility I use to collect frametimes (OCAT) broke with the Windows 10 Creators Update—it might work now, but it didn't for several months so I couldn't test newer cards. Shadow Warrior 2 meanwhile has some strange variability in framerates that I've noticed over time, where the dynamic weather can sometimes drop performance 10 percent or so—not good for repeatable results.

What I'm left with is 15 games, released between 2015 and 2017. There are multiple AMD Gaming Evolved titles (Ashes, BF1, Civ6, DXMD, Hitman, and TW: Warhammer) and also quite a few Nvidia TWIMTBP games (Division, GR: Wildlands, RotTR, and some technologies in Dishonored 2 and Fallout 4). There are also DX12-enabled games, quite a few of which I test in DX12 on all GPUs. The two DX12 games that I don't test using DX12 on Nvidia hardware are Battlefield 1 (slightly worse performance) and Warhammer (significantly worse performance), but Ashes, Deus Ex, Division, Hitman, and Tomb Raider are all tested in DX12 mode—it doesn't necessarily help Nvidia in many cases, but it doesn't substantially hurt Nvidia either. Here are the results of testing:

What about cryptocurrency mining?

Given the shortages on AMD's RX 570/580 cards, which are typically selling at prices 50 percent or more above the MSRP, many have feared—and miners have hoped—that the RX Vega would be another excellent mining option. I poked around a bit to see what sort of performance the Vega 56/64 delivered in Ethereum mining, and so far it's been pretty lackluster, considering the price and power use. The Vega 56 manages around 31MH/s for Ethereum, and the Vega 64 does 33MH/s. Overclocking the VRAM helps boost both cards closer to 40MH/s right now. So at launch, that's not super promising.

The problem is that most of the mining software has been finely tuned to run on AMD's Polaris architecture. Given time, we could see substantially higher hashrates out of Vega, and there are rumors that the right combination of VBIOS, drivers, and mining software can hit mining speeds more than double what I measured. Perhaps AMD is intentionally holding back drivers or other tweaks that would boost mining performance, and long-term AMD remains committed to gaming. All we can do is hold our breath and hope that mining doesn't drive prices of Vega into the stratosphere in the future.

Initial overclocking results

I'm not going to include a complete chart of overclocking results for RX Vega right now, mostly because I don't have them. I did some preliminary testing, and things are far more interesting on the Vega 56 card, as you'd expect. Simply raising the HBM2 clockspeed to 930MHz base (close to the same as the Vega 64's 945MHz) and cranking the power limit to 50 percent improved performance to where Vega 56 is effectively tied with Vega 64. Going further, I toyed with the percentage overclock slider and managed to push it up 20 percent higher. In both cases, system power draw while gaming basically matched the Vega 64—480W at the outlet.Here's the weird thing: in limited testing, all that added clockspeed did very little for performance. And yes, the clockspeed did go up (along with power draw), as Radeon Settings showed sustained clockspeeds of around 1900MHz. But again, this is only limited testing, so I need to go through the full game suite and verify that my clockspeeds are stable, and see if things are better in some other games.AdvertisementOne thing that didn't go so well with overclocking on the Vega 56 was pushing the HBM2 clocks higher. Maybe I have a card that doesn't OC as well, or maybe I need to find the right dials to fiddle with, but 950MHz resulted in screen corruption and a crash to desktop, and 940MHz showed some flickering textures. I suspect Vega 64 might have slightly higher voltages for the HBM2, or just better binning, but I don't expect VRAM clocks to be all that flexible.As for Vega 64 overclocking, I ran out of time. I'll be looking at that later this week, and I suspect there's at least a bit of headroom if you're willing to crank up the fan speeds. I did that for Vega 56 as well, where 1500 RPM base fan speed and a maximum of 4500 RPM kept the GPU below 70C during my initial tests. But while raising the power limit of Vega 56 by 50 percent went without a hitch, I suspect I'll need to be more cautious with Vega 64—295W plus 50 percent would put the power draw potentially in the 400W or higher range. Yeah, probably not going to work so well.

An architecture for the future

I've talked about the Vega architecture before, and really for most gamers the important thing will be how a graphics card performance. But looking at the Vega design, there are clearly elements that aren't really being fully utilized just yet. Just to quickly recap a few elements of the architecture that have changed relative to Polaris, here's what we know.AMD notes five key areas where the Vega architecture has changed significantly from the previous AMD GCN architectures: High-Bandwidth Cache Controller, next generation geometry engine, rapid packed math, a revised pixel engine, and an overall design that is built for higher clockspeeds. I've covered all but the last of these previously, so let me focus on the higher clockspeed aspect for a moment.In order to improve clockspeeds, everything has to become 'tighter'—the chip layout needs to be optimized, and in some cases work has to be split up differently. AMD talked about adding a few extra pipeline stages to Vega as one solution. I've discussed pipelines in CPU architectures before, and the same basic principles apply to GPU pipelines. Shorter pipelines are more efficient in terms of getting work done, but the tradeoff is that short pipelines generally don't clock as high. AMD didn't disclose full details, but while the main ALU (Arithmetic Logic Unit) remains a 4-stage pipeline, other areas like the texture decompression pipeline have a couple of extra stages. The result of all the efforts to optimize for higher clockspeeds is that Vega can hit 1600MHz pretty easily, whereas Fiji topped out in the 1000MHz range.AdvertisementOther changes are more forward looking. Take the HBCC, the High-Bandwidth Cache Controller, which is supposed to help optimize memory use so that games and other applications can work with datasets many times larger than the actual VRAM. AMD showed some demonstrations of what the HBCC can do in professional apps, including real-time 8K video scrubbing on the Radeon Pro SSG. For the RX Vega, however, by default the HBCC is actually disabled in the drivers. Maybe that will change with further testing, but AMD says the HBCC will become more useful over time. What about now?I turned on the HBCC to see what it does, or doesn't, do for current gaming performance. (Yes, I literally reran every single gaming benchmark, just in case there was an edge case where it helped.) The results were in line with what AMD said: HBCC didn't help performance in most games, with results being within the margin of error. Looking to the future, though, if AMD can get developers to build around its hardware, we could see games pushing substantially higher data sets. This is also early days for Vega, so the drivers could be tuned over the coming months. That's true for Nvidia as well, but GTX 1080/1070 have been out for over a year so most of the gains have already been found. We'll have to wait and see if Vega performance improves given more time.