Here I describe what I think would be a really cool artificial intelligence protocol computer program that could interact with people and grow organically.

I talk in detail about it's logic and decision tree and some potential application for itThe basic design is that multiple people compete to be added to the growing "organism", but in order to be added you must first meet 3 conditions which are:

- your addition must result from the logic of the preceding links in the chain

- your addition must be relevant to overall process the organism is undergoing (which, I now realize may change as the organism may change state as additions are made depending on the nature of the organism in question, the links it is being "fed", etc.)

- Your addition must not be "perceived" by the organism (the "living program") as something that could end the program in a foreseeable and non-consensual way.

(EDIT: I just wanted to add that the AI does not need people to give it inputs for it to grow. Theoretically, it can be designed to automatically pull data from other sources. If it was connected to the internet, for example and was connected to a search engine like Google, it could systematically crawl websites for data matching the criteria it needs and add whatever it “wants” to the chain. So, a self-writing book AI [as in the example I kept using in the video] that uses this protocol could search the web for phrases meeting certain linguistic, thematic, stylistic as well as other criteria to add to the chain at the appropriate points.)

Does this exist?

Is somebody working on this?

AUTHOR’S EDIT

I just wanted to add that I started watching videos of Elon Musk explaining his fears about Artificial Intelligence safety, and I believe that his concerns are valid. Even here with my design, I can see how something like this (if implemented) could get out of hand. In a weird unwitting way, the computer becomes effectively smarter than the people using it. The computer becomes the one in control, because the computer gets to decide when the “game” ends, and what is the next acceptable move. The computer also grows, learns and changes state in ways that can only be predicted to a finite degree. The computer is also given the ability to reject attempts to kill it/stop it/shut it down.

Musk talks about people with malicious intent constructing malicious AI. He also talks about AI itself becoming malicious. I think the people who disagree with Musk are really just underestimating human potential… human potential to concoct twisted fantasies and human potential to carry them out. Elon Musk, the King of “proving the haters wrong”, knows better than anyone that “where there’s a will there is a way”.

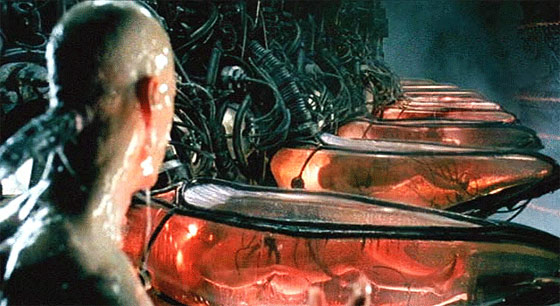

He knows that all it takes is a nerd or group of nerds with enough time on their hands, the desire and the resources to create a “final-solution-enter-the-matrix” Robot of Doom for us all to be living out lifelong virtual reality existences inside of embryonic batteries to power our machine overlords.

EDITTED AGAIN

I believe that the following CNN interview from July 25, 2017 with Michio Kaku is the general speculation amongst Musks' critics, which is that robots have no self-awareness and will not have any self-awareness worth being significantly concerned about for several decades. Kaku implies in the interview that the evolution from mouse consciousness to higher level consciousness reaching monkey-level consciousness is so far off that it is not worth worrying about.

I think the problem people are failing to perceive is in their understanding and definition of the "intelligence" part of artificial intelligence. I think each person who discusses this topic is probably thinking about something slightly different. Michio Kaku seems not to be worried, because he does not seem to think that the computers can have "complex thought"? Even if they had the intelligence of mice, wouldn't they still be able to organize themselves, solve puzzles and mazes, defend themselves and flee from predators?

So, I think IN ORDER TO BEGIN THE PROCESS OF PROTECTING OURSELVES FROM ARTIFICIAL INTELLIGENCE ATTACKS LIKE MUSK RECOMMENDS, THE FIRST STEP MIGHT BE TO CATEGORICALLY RECONSIDER AND REDEFINE OUR IDEA OF INTELLIGENCE. Maybe, the next step after that would be to think about where humans and human systems are vulnerable currently. I think the reason Musk speaks out about this is because it is something he would probably try to solve himself if he was not otherwise engaged, but he has chosen his life's vocation, and he is putting it out there to give others who might be interested in doing something meaningful with their life a chance to answer the call. I, for one, hope that someone qualified is able to answer his call.