The inherent human “negative bias” is a byproduct of our evolution. For our survival it was of primal importance to be able to quickly assess the danger posed by a situation, an animal or another human. However our discerning inclinations have evolved into more pernicious biases over the years as cultures become enmeshed and our discrimination is exacerbated by religion, caste, social status and skin color.

Human bias and machine learning

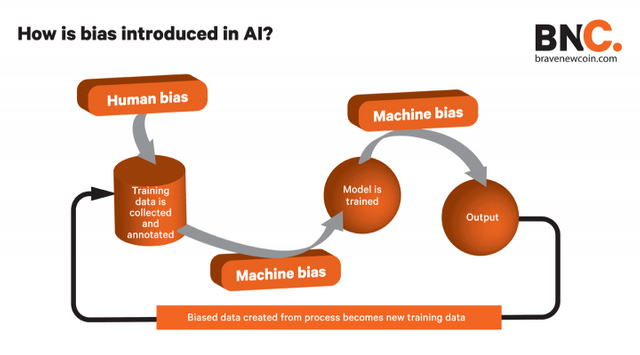

In traditional computer programming people hand code a solution to a problem. With machine learning (a subset of AI) computers learn to find the solution by finding patterns in the data they are fed, ultimately, by humans. As it is impossible to separate ourselves from our own human biases and that naturally feeds into the technology we create.

Examples of AI gone awry proliferate technology products. In an unfortunate example, Google had to apologise for tagging a photo of black people as gorillas in its Photos app, which is supposed to auto-categorise photos by image recognition of its subjects (cars, planes, etc). This was caused by the heuristic know as “selection bias”. Nikon had a similar incident with its cameras when pointed at Asian subjects, when focused on their face it prompted the question “is someone blinking?”

Potential biases in machine learning:

Interaction bias: If we are teaching a computer to learn to recognize what an object looks like, say a shoe, what we teach it to recognize is skewed by our interpretation of a shoe (mans/womans or sports/casual) and the algorithm will only learn and build upon that basis.

Latent bias: If you’re training your programme to recognize a doctor and your data sample is of previous famous physicists, the programme will be highly skewed towards males.

Similarity bias: Just what it sounds like. When choosing a team, for example, we would favor those most similar to us than as opposed to those we view as “different”.

Selection bias: The data used to train the algorithm over represents one population, making it operate better for them at the expense of others.

Algorithms and artificial intelligence (AI) are intended to minimize human emotion and involvement in data processing that can be skewed by human error and many would think this sanitizes the data completely. However, any human bias or error collecting the data going into the algorithm will actually be exaggerated in the AI output.

Gender bias in Fintech

Every industry has its own gender and race skews and the technology industry, like the financial industry, is dominated by white males. Silicon Valley has earned the reputation as a Brotopia due to its boy club culture.

The blockchain industry is even more notorious for its concentration of young white males with a background in computer science, coding and engineering - and we can regress that one step further to the male predisposition to STEM subjects (science, technology, engineering and mathematics) at school.

This reflected in the demographics of computer science and IT graduates: In 2014 just 17 percent of the recipients of bachelor degrees were female. In 1985, 37 percent of computer science graduates were female. According to the National Center for Women and Information Technology, females make up just 26 percent of IT workforce in the US, and just 10 percent of those were women of color.

Not only are Only 1-5 percent of cryptocurrency investors are believed to be female. According to Forbes, a mere 1.7 percent of the entire Bitcoin community are women. It’s a similar status quo in venture capital where just 6 percent of the VC firms have female partners and less than 5 percent of VC funding went to startups with that were led by women.

With blockchain sitting at the intersection of finance and technology this issue is exacerbated so we must be extra aware of the bias that goes into the products the industry makes.

Bias in healthcare

Today perhaps the most widely-cited social bias in the US is the gap in the life expectancy rates white and black races. In the latest National Vital Statistics Report, the average life expectancy for black males and females in the US was 75.6 in 2014, the average for white people was 79.1, which the report points out is actually at a “historically record low level.”

There are many socioeconomic variables contributing to this but one of the most prominent is the skew in healthcare to white males. For example, the randomized data going into AI from control trials is known to disfavour women, ethnic minorities and the elderly as fewer of them are selected for the trials. The result: medical procedures and drugs that are optimized for a certain demographic and could even be detrimental to others. There’s also very little research done on what affects medical treatments have on pregnant women.

Democratizing scientific research through the blockchain

Iris.ai is an artificial intelligence tool that helps researchers find relevant scientific papers. Its blockchain project, Aiur, launching in May, aims to bring together researchers, coders and anyone interested in science into a community-governed engine to access and contribute to a validated repository of scientific research through its Aiur token.

The company wants to disrupt the status quo which it believes “is a highly lucrative, oligopolistic scientific publishing industry that has led to a dysfunctional incentive model”.

Anita Schjøll Brede, CEO and co-founder of Iris.ai said: “We envision a world where the right scientific knowledge is available at our fingertips, where all research is validated and reproducible, where unbiased scientific information flows freely, and where research already paid for with our tax money is free to us. With Project Aiur, we aim to give ownership of science back to scientists, universities, and the public.

“The world of academia has never been as productive as it is today. However most of it never gets used, and the research papers that are used are heavily biased towards the top academic institutions and professors. With Iris.ai, we are using Artificial Intelligence to develop a machine that can read and digest all this academic knowledge.”

Conclusion

While there is an abundance of research being conducted and published, this is both costly to access, and difficult to manage. Research professionals are pressured to deliver, publish and review on tight deadlines, creating perverse incentives to exaggerate facts and omit assumptions and constraints. With little to no accountability and reward for authors and reviewers, reproducibility suffers.

Artificial intelligence has the potential to vastly improve the efficacy and objectivity in healthcare, from patient waiting lists to optimizing organ transplants for the right people, but it can only be done by acknowledging the human biases going into them and using the most diverse data sets and diverse teams - from the peer-review research stage to the building of the algorithm.

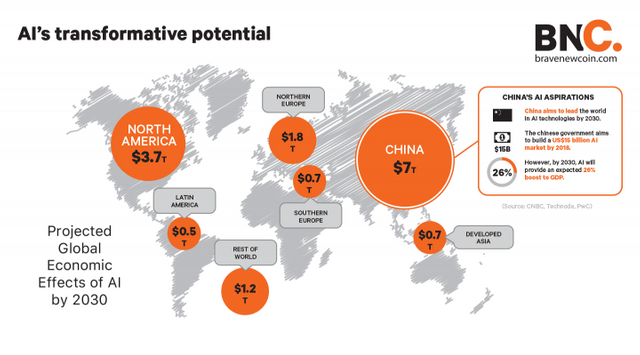

China has a great advantage in data mining: No civic rights and a collectivist regime. That is the perfect foundation for data mining. They can simply take everything they need and "only" have to build the servers and algorithms and feed them with as much and whatever they need to produce results.

The plus side is that given the vast size of China and its ambitions, we will all greatly profit from these developments. The negative one being a future that might look quite different from what liberal thinkers of the past had in mind when they created the foundations for freedom.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit