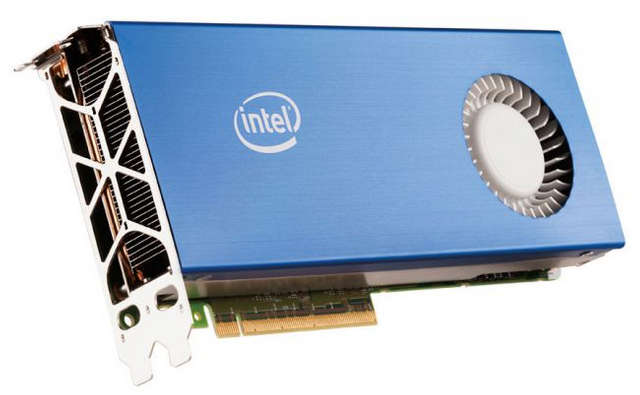

There's a bit of a rumor running around recently that Intel may be a bit farther ahead in the development of its new GPU than previously believed. The company may have the first showing of its new product line at CES 2019, that's in January.

You may think that's a long time, but it's really not. For developing a new GPU architecture, setting up a manufacturing chain, that is basically nothing. So there are two possibilities, one would be that the rumor is false... but considering how horrible the security is in the tech industry, it's not really hard to believe. The other possibility is that Intel was a bit farther along with the idea than we thought.

We know it's been working on a GPU at least since it nabbed Raja Koduri from AMD, but it has been making iGPUs for its CPUs for a long time. In the case that the new discreet GPU is nothing but a big version of what we now find in the Intel HD series, it really could be ready on a technical level by CES 2019, and probably ready for production by spring-summer 2019. But will that be a good enough GPU to compete with the established players? Predictions of possible performance indicate that a larger version of the iGPU could reach a similar performance level, on paper, to that of a RX 580, GTX 1060, somewhere around there.

On paper. Paper is not the same thing as real life. Because in real life, there is such a thing as a driver. A driver requires a lot of work to function properly. A lot of optimization, support for a lot of things that aren't Intel's property. Intel has experience making drivers for its iGPU, and they work mostly fine, but they don't really have the same kind of scrutiny on them as Radeon and GeForce drivers. If something works like crap on the iGPU, people aren't upset. I mean, it's the iGPU. What did you expect? So, Intel needs to improve its efforts in that field.

The good part is that, unlike AMD, Intel has a lot of money. Enough money to set up divisions dedicated to testing the drivers, validating them, optimizing them, and basically doing what Nvidia is doing... or, did. I mean, in the period I owned an Nvidia GPU, drivers were just crap, to the point where they broke Start Menu once.

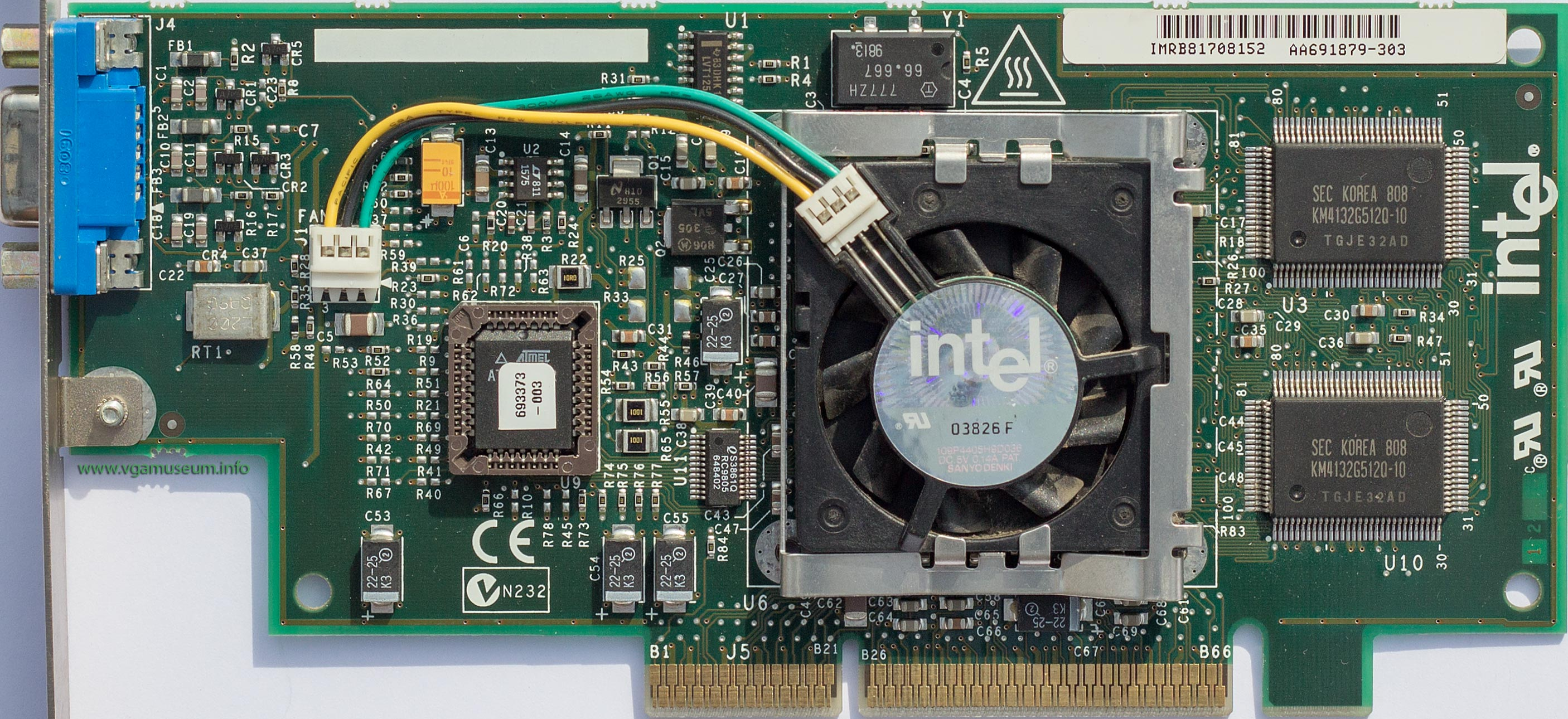

It's also important to note that this isn't the first time that Intel has tried to make a dedicated GPU. We had the old, old, Intel740... that was not really all that great.

It was then followed up with the promise of Larrabee. It was supposed to be a revolution, an x86 based GPU. It didn't really pan out for the consumer market for various reasons. But it did go on in the Xeon Phi, which is basically a big PCI-E CPU cluster that technically can work as a GPU, but it has the rendering/texturing stuff cut out of it.

Performance will be very important for the succes of this new GPU. But even if it's not at the level of a 1080ti, it will still be a disruption of the market. Instead of two choices, we'll have three. Now, this may not bode well for AMD, since they are not in the best situation currently on the GPU side. They can support more development because the CPUs are flying off the shelves... technically so are the GPUs because of the minders... but there's no market share to be had there. They're not going into the gaming ecosystem... until they're put on the Second Hand Market. With three companies, two of them having a lot more money, AMD may struggle a bit, but it also means that Nvidia needs to step up its game, because Intel does not kid around. It will go for the jugular. And Nvidia tried to preempt this with the GeForce Partner Program, to keep its video cards as the flagship main brand, and move AMD and any future competitor to smaller brand. The GPP however has now been canceled, because of outcry from EVERYONE and possibly the stinkeye from Intel. Let's face it, if there's one company that can do dirty tricks well, it's Intel. And since Intel still has the lion's share of the CPU market, you don't want to piss them off. AMD can rage all it wants that the competition is being unfair, it won't do much, Intel still owes billions in fines because of the anti-competitive things it did over the past few decades. But if Intel complains, it won't just get results, it will get revenge.

So this is where we are now. Waiting for more leaks. And there will be leaks. Information security in this industry is a joke. First gen may be rough, but that's never stopped them before. Just curious, would any of you be interested in an Intel GPU when they come out? Thinking of jumping ship to team blue, in case it looks good enough?

A third player in the GPU scene should be really good for the consumers, whilst AMD and nVidia do fight each other a lot and work to keep each other honest, I would sometimes like to see more choice.

The Larrabee GPU from Intel was something that I wish would have been developed fully, especially the concept that almost everything (if not everything?) was software based, instead of fixed function hardware. So, you need Dx12? Well it's software! You need Sharders? Software! That was awesome - in theory - as if the end user were to use a program that did not use, say Shaders, the cores that were previously assigned to Shaders would be able to go full power on the functions that you did need. (Unlike traditional GPUs where, if you don't use Shaders, then the hardware that controls Shaders is pretty much inactive.

Perhaps Intel with their new GPUs may have some success this time round - and whilst I doubt it would be a Larrabee style graphics card, it would be awesome if they tried things a bit differently.

As for jumping to team blue, the answer is simple. If they have a competetive card in both price and performance, then ofcourse I'd consider it.

P.S - I feel you with the nvidia 'used' to do good drivers. Currently I'm not a fan of either AMD (but their UX and UI is better 'right now' - I do not like having to sign up to nVidia for GeForce Experience) or nvidia drivers - have 2 systems (1 green, 1 red) and both have driver problems far too often.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

i hope that comes with liquid oxygen if its basef off of amd, amd is cute and all that when it comes to tweakers but practically having an extra stove in the summer in a small room isn't all that in my experience, but intel tends to be more expensive ofcourse, my main cpu is still amd but the other two are celeron and doing just fine, but after my last amd card burned up (again) i got an nvidia this time and i cant complain really

that heat man, i'll gladly give up a few invisible fps for 40 degrees less lol

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

The problem in addition to the benefits will always remain the energy consumption and the high temperatures that this generates. Thank you for sharing such great information.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit