Artificial Intelligence has been the game-changer in industries ranging from retail to healthcare to finance. However, with greater power comes greater responsibility-or so it goes, if all goes well. On the other hand, more and more firms are integrating AI into their operations, but very few companies are really showing transparency regarding the use of AI and its impacts on their customers and society. I'm going to go ahead and say it: Businesses need to be far more candid and transparent regarding their usage of AI.

Some may well say that AI is "just a tool," that there's no need to disclose the inner workings. Yet given AI's growing influence-from hiring practices to online recommendations-it's time for corporations to get real about how they're using it.

The Case for Transparency

It is easy to imagine a firm using AI to sift through applications. On the surface, that sounds efficient and fair; after all, a machine isn't biased, right? Wrong. In reality, the data fed into these systems often reflects human biases. Amazon, for instance, had to junk an AI recruiting tool that turned out to be discriminating against female candidates. So much for being objective!

That lack of transparency speaks to suspicion. The fact that companies will not disclose how AI makes those decisions means customers, applicants, and other stakeholders can't hold them accountable for some unforeseen outcome. Again, all about ethics, but a matter of trust, too: every time something about AI is veiled by a company, we catch ourselves asking: What are they afraid to tell us?

Artificial Intelligence and Data Privacy

The other leading reason why companies should be more transparent with regard to AI use is because of data privacy. For AI to function properly, it needs enormous amounts of data; but where does this data come from? The answer lies, in most cases, with us: our search history, habits of shopping, social networking interactions, and even biometric information.

But when companies use that information to train their AI models, they too often leave consumers in the dark about how their information is used and shared. Take facial recognition software. Companies like Clearview AI have collected millions of images from social media without users' permission. While this technology does have some legitimate uses-like in law enforcement-it's also fraught with privacy concerns. How many of these people would be comfortable with their photos being used this way if they actually knew it was happening? Not many.

The only way companies can avoid the backlash is by being more open about data sources, usage, and protection. It is only when companies are transparent that customers will not feel taken advantage of due to AI technology.

The Risks of Black-Box AI

Now, with AI, one of the largest concerns is its tendency to be what we call a "black box." Most sophisticated AI is so complex in its nature that sometimes even its developers are unable to explain how exactly it makes certain decisions. Say, for instance, a bank uses AI to evaluate applications for loans and rejects your application. There, if the bank itself fails to explain why the AI decided that, ethical and legal considerations do arise.

In fact, some countries notch it up another step with regulation. The European Union enacted the General Data Protection Regulation, boasting the right to explanation for decisions where AI is involved. However, wherever no such regulations are around, people are often very much in the dark. With a minimum level of transparency, customers might find themselves building resentment and mistrust because they would then be led to believe that they were being treated unfairly.

Companies should be ready to lift the curtain and explain their AI processes to customers. They will have nothing to hide if the technology is fair and accurate. And if they do not know how the AI makes decisions, then perhaps it's time to hit pause and reconsider using that technology in the first instance.

Business Case for Transparency

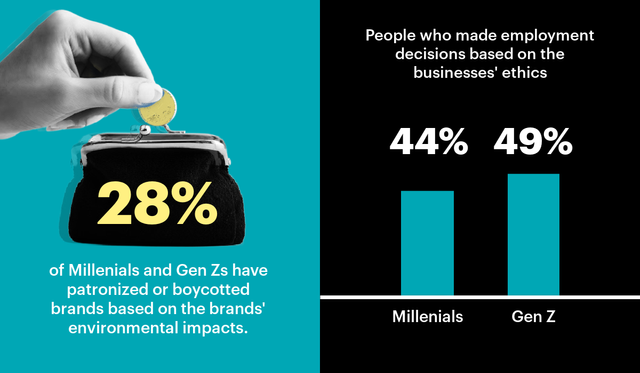

Being transparent isn't just the ethical thing to do; it's good business. The more companies can be open about their use of AI, the better their relationships with customers will be. According to Edelman, 81% of people say they need to be able to trust a brand in order to buy from it. AI transparency could go a long way toward fostering that level of trust.

Consider Apple's approach to privacy, positioning itself as the champion of user data protection fosters loyalty from their customer base. Now, extend this to AI: Imagine if more firms were being as transparent with AI, articulating how they're using it and how customers benefit. It could be that the ability to be transparent might win the competitive advantage.

Counterarguments

Because some would say, for competitive reasons, AI processes should be protected. Once they start to disclose too much, they may enable competitors to replicate technologies that give them an edge in the market. But not necessarily disclosure to every line of code, rather being as transparent as possible so that customers understand how AI affects them.

Others fear transparency would overwhelm the customer. AI is complex, and most customers do not want to understand the technology behind it. Duly noted. Yet, there are ways to explain things in a simplified manner where people will have a pretty good understanding without needing the fine details.

My Take

In today's world, where every aspect of our lives is dictated by artificial intelligence, it's an obligation for corporations to be just as open and transparent. This involves clarity on where they are using AI, what data they are collecting, and how this data is being protected. Every organization that doesn't practice openness regarding its usage of AI risks losing something even more important than its reputation: customer trust and respect.

We don't have to wait for regulation to push through transparency. Companies, if they so wish, can be proactive and light the way to a future where AI is deployed responsibly and ethically. So let's start holding companies accountable by pushing them open up about AI. It's time for transparency.