(L)oad the data - safely, without corruption, without judgement, without "cleaning".

(E)xtract new information from the data - generate new properties from this.

(T)ransform - build views, construct optimizing indexes, mine the data for deeper structure, construct property-relational topological maps.

(AKA -- "Your 'L' is in the WRONG PLACE")

Bottom line! - please, for the sake of all that is holy, DO NOT DESTROY THE DATA!

I know there are those who believe they know "what" the data looks like in the future - no one does. We also do not know how reports will be built. We do not know which metrics, 5 years from now, will be most relevant - we can guess, but the agile business allows flexibility. No person, save God, can know the future state of information beyond a "likelihood" - nobody.

Also, to be blunt, we really don't know what "garbage" is. I know Kimball and Inmon probably think they know - or can give good prescriptions (to be Roman - proscriptions), but they don't really know either. Garbage today is gold tomorrow and if you don't believe me you haven't been doing this very long. Even really stupid data corruption - like the HL-7 message whose field contained nothing but 3000 chars of white space mainly because the nurse at that station had fallen asleep at the ADT keyboard - yes, even the stuff that seems stupid should be preserved. You never know what you might learn later on. Of course, there are ALWAYS exceptions and certainly data warehouses shouldn't be "data hoarders" but this is where a modicum of common sense should be sufficient.

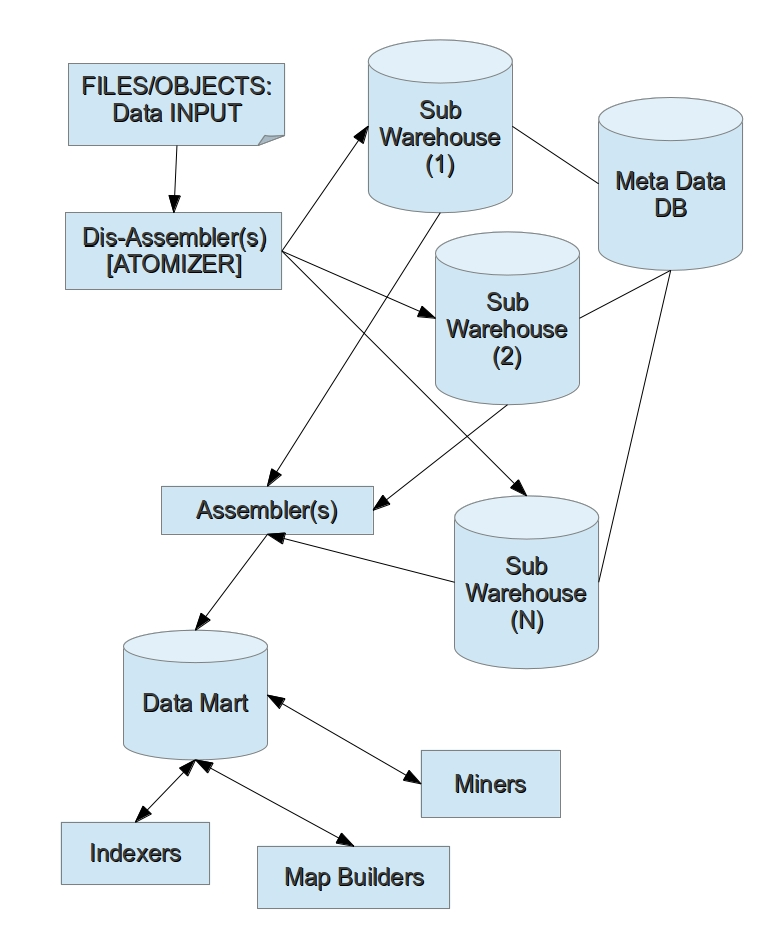

What are the principle components of a LET Data Warehousing and Data Mart provider architecture?

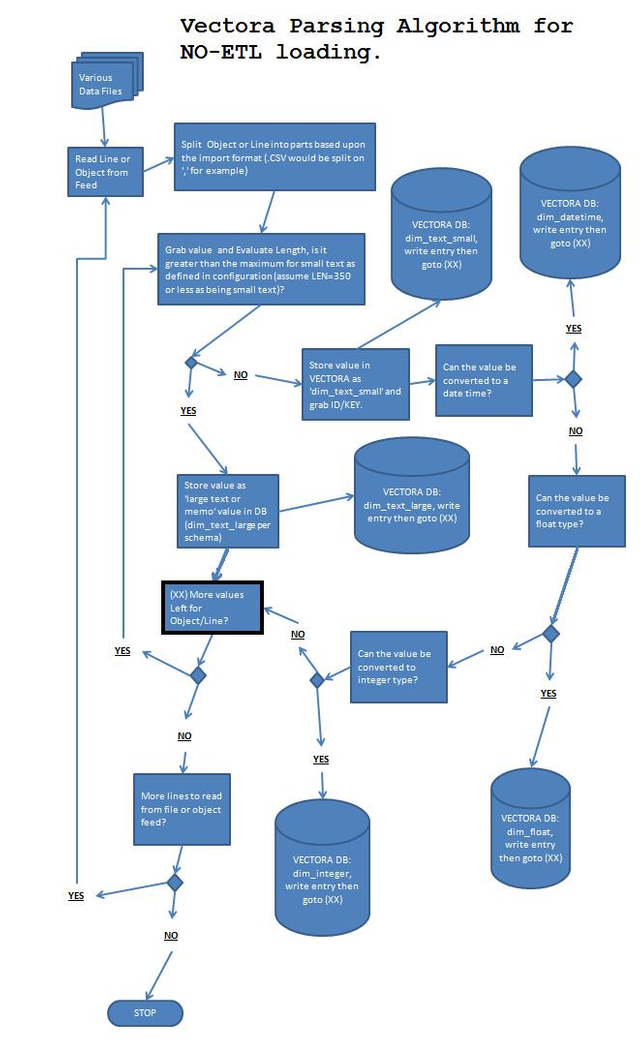

Dis-assemblers (or Atomizers): Assume common <interface> implementation - these processes consume files of many formats and converts the data to dictionaries of key/value pairs. These dictionaries are given a common ID managed by the dis-assembler. Dis-assembler name PLUS this ID as STRING would be the universal ID. The values in each key/value pair are tested: small text size or large? can be converted to double or not? can it be treated as date/time? For any test that passes, an additional tuple is generated. Common ID is then hashed to determine which sub-warehouse will hold the record. Hash(n) where (n) is the total number of databases used as sub warehouses. Within each sub warehouse, the data is stored as a simple (Common ID, Value[typed], date/time stamp) pair in a table with the name of [Object Name/Property Name] - all values are strongly typed. Obviously, you will want to generate an object name property if you do not want anonymous data types persisted. You may want to implement a common <interface> around the <algorithm> which consumes the key/value pair and translates to "possible" extended values - since this is an open ended architecture - aka "coalescing types". DO NOT STORE NULL DATA! - No empty pigeon holes, please. Also, the date time stamp can be stored as an integer constructed from the YYYYMMDDHHmmSSsss parse of date time down to millisecond - though it is unlikely that value space versioning needs to get more precise than the day, hour or minute (not likely seconds - unless real-time data is involved). The common ID allows updates to be slotted correctly per an original OBJECT undergoing a versioning update. The HASH(n) for sub warehouse storage locations can be, and perhaps SHOULD BE, replaced with a directed <functor> for "placement" of an object or file record into a meaningfully organized data warehouse organized around rational business relevant partitions.

BASELINE ALGORITHM FOR "Coalescing Types":

Assemblers: Following a common <interface> implementation - these processes, triggered on a schedule most commonly, follow a standard configuration file to construct standard database Data Mart tables from data stored in one of the (n) sub warehouses. A meta-data database, containing . The Assemblers are also responsible for keeping the table "up to date" and dropping the tables if required. These tables represent the baseline data for any reporting architecture. Views might be built off of these, and you might use assemblers to do this as well, but this data should be primarily seen as the "refreshed" primary data from the warehouse.

Indexers: Following a common <interface> implementation - these processes, likely triggered on a schedule, build out "indexing tables" or "bridge index tables" between the different tables in the Data Mart. These are <abstract> indexes that will leverage the built in indexing of the specific database vendor. These bridge tables only store a Common ID TUPLE (ID,ID,....) that allows many objects to be joined in different kinds of relations - with different levels of uniqueness.

Map Builders: Following a common <interface> implementation - these processes will construct topological maps of all possible and <functor> transform supplied connections between different records. This can be seen as the generalized map of the "data verse". It should be stored as EDGE tuples of (Node1,Node2,Cardinality) and displayed using efficient visualization like Microsoft GLEE - potentially 3D immersion searchable GRAPHS where the researcher can actually interact with the fully connected value space and then use these (VALUES) from the graph to drill-down into the data deeper.

Miners: Following a common <interface> implementation - will scour the data, using various <interface> compliant data mining algorithms, to generate special tables in the Data Mart which display mining results. These miners may (and probably should) leverage pre-existing technology by using Façade and Adapter patterns. These miners ONLY access the data mart! These miners only send results to the data mart!

The key concept here is this: transformation of the data, into useful values for specific business cases, happens LAST. Last is good. Last means we have time to make better judgements on how we want to see the data - last might even be in the "reporting layer", like SSRS.

Business rules, indexing relations, semantic structures, functors, topological closures, mining results, views, reports - this is the "volatile" region of any Business Intelligence architecture and should be designed to allow for volatility without irreversibly damaging the Data Warehouse records. A corollary to this is: objects in the Data Mart should be "report ready" and "query ready" and "validated" and "audit-ready". The Data Mart SHOULD NOT require further complex transformation - of course there are many benign and common transformations that are totally appropriate (formatting, mapping, some calculations). Assemblers should do most of the "heavy work" for transformation.

Transformations and "semantic relation" building (semantic relation is one that has special meaning in a business context) must happen in the Data Mart - not before entry or AT the Data Warehouse. These relations and transformations may feel permanent - but they are not. They have value as long as the current flurry of business interest and then the interest passes. And, maybe it is biomedical research, or genetics, or something else really complex and cool: interest IN a a relation or transform is always mediated by "need". If you don't need it, why hold on to it? (sure, you might serialize to file a snapshot of it before you delete it, but then you do start seeming like a data hoarder).

It is all "contingent" - see (static paradox).

In order to focus our efforts on supporting the changing business domain, we must first address our concerns - describe those values which guide our effort.

Which positive features of the data warehouse are we most interested in?

Which features are essential to supporting the business analyst and the decision maker?

I have enumerated a list of 'values' or moral goals which we can use to evaluate and to measure any particular data warehouse - all of these would need to be translated into an objective measure for proper comparison:

- Maximum fill: a data warehouse MUST be designed to reduce (to a minimum) the number of empty 'holes' given some universe of 'pigeons'.

- Finite: to the extent possible, it only stores unique facts - if necessary it will keep track (via counting) of unique facts.

- Compact: it does not store repeating or duplicating data and where possible will slice these repeating sequences off from a parent and create a new child table/entity. This can be done automatically based upon the entropy state of the table itself.

- Targeted: This is tricky, but basically we want to reduce the number of 'moves' a fact makes to an absolute minimum while moving to its final destination in the warehouse -- and then reduce how much churn happens after that. This means we reduce file i/o, network traffic and other 'general' resource allocations so that other processes can more effectively share these resources too.

- Frugal: Related to targeted. A good DW will only use the resources from the CPU it must and will not store or spawn resources that are not necessary to the function of the DW. A good data warehouse will only save a unique object once (related to compactness and maximum fill)

- Decentralized/Federated: a good data warehouse is naturally federated into smaller db/server deployments. For example, a hospital system might have 100 facilities, you can use this information plus a temporal feature (like date of service) to organize your DW into smaller cells or units. This will also make your analysts happy, because their queries will run faster.

- Rational: does not create dimensions based on field names, but rather on the nature of the data - those characteristics that make it open to heterogeneous data sources. It recognizes that the value space is a slowly changing dimension - in terms of time and space. The value space is increasing at a decreasing rate.

- Focused: not everything belongs in a data warehouse, there are MANY algorithmic operations which would be better implemented outside the scope of the DW, in another process.

- Friendly: design a system which makes the life of the interface/report (both input and output) designer easier - not more difficult

- GO Like, Not Chess Like: while the value space may be relatively finite, the relational space is potentially infinite - especially if we allow for multiple connectedness (or more than one arc between nodes). The DW should therefore allow for open-ended generative growth through flexible version and not restrict itself to a confining solution space. Chess designed databases work, but only in finite and specific domains.

- Testable/Verifiable/Audit-able: a well designed data warehouse is open to inspection, audit ready (given an appropriate amount of resources), testable (both in terms of ongoing, but more importantly at the start to validate design -- you should ALWAYS validate design!) By being open a data warehouse is allowed to evolve, by having simple open-ended rules, it can evolve flexibly -- because the world is always changing. Data is not perfect, but you can have an accurate representation of the imperfection.

- Fast: it is our belief, that if you implement the above, you will achieve speed. But, there is something important about calling out this feature as being not simply 'important' but probably the MOST important feature.

- Accepting: a good data warehouse will attempt to store data 'as is' without obliterating or molesting or messing with the essential form of the source data -- ETL is butchery!

- Don't re-invent the wheel: if there are features/behaviors that the OS or other systems are ALREADY doing, don't duplicate it if it works -- integrate with it.

- Safe Navigation: Allow for SAFE high level investigation, but prevent drilling into sensitive/PHI/HIPAA protected data. De-ID should be seen as a process of hiding and showing sub-trees within the super tree of the ontology.

- Fluid: The distinction between FACTS and DIMENSIONS is somewhat arbitrary and context sensitive. As with living language, living data must accept this and learn to manage this. Put another way: one person's fact is another person's dimension.

- Coalesce versus Conform: Data should be allowed multiple separate interpretations (dimensions/types), and allow the report/view designer to choose the appropriate one. We conform the value space and NOT the space of relations.