Credit Card frauds have been prevalent for a long time now. Even with the advancement in security features one can never be too sure whether he/she is safe from these frauds. I, Jagrit Singh, and another classmate of mine, Bhuvnesh Rana, decided to tackle this problem as part of a college project we worked on together. We created a python application that can detect fraudulent customer from the bank records using self-organising maps (SOMs) in credit card applications.

INTRODUCTION TO SOM

A self-organizing map (SOM) is a type of artificial neural network that uses unsupervised learning to build a two dimensional map of a problem space. The key difference between a self-organizing map and other approaches to problem solving is that a self-organizing map uses competitive learning rather than error-correction learning such as backpropagation with gradient descent. A self-organizing map can generate a visual representation of data on a hexagonal or rectangular grid. Applications include meteorology, oceanography, project prioritization, and oil and gas exploration. A self-organizing map is also known as a selforganizing feature map (SOFM) or a Kohonen map. The aim is to make all the nodes in the network respond differently to different inputs. A self-organizing map makes use of competitive learning where the nodes eventually specialize. When fed input data, the Euclidean distance, or the straight-line distance between the nodes, which are given a weight, is computed. The node in the network that is most similar to the input data is called the best matching unit (BMU). As the neural network moves through the problem set, the weights start to look more like the actual data. The neural network has thus trained itself to see patterns in the data much the way a human see. The approach differs from other AI techniques such as supervised learning or error-correction learning, but without using error or reward signals to train an algorithm. Thus, a self-organizing map is a kind of unsupervised learning.

BASIC STRUCTURE

The self-organization process involves four major components:

Initialization: All the connection weights are initialized with small random values.

Competition: For each input pattern, the neurons compute their respective values of a discriminant function which provides the basis for competition. The particular neuron with the smallest value of the discriminant function is declared the winner.

Cooperation: The winning neuron determines the spatial location of a topological neighborhood of excited neurons, thereby providing the basis for cooperation among neighboring neurons.

Adaptation: The excited neurons decrease their individual values of the discriminant function in relation to the input pattern through suitable adjustment of the associated connection weights, such that the response of the winning neuron to the subsequent application of a similar input pattern is enhanced.

WORKING

Each data point in the data set recognizes themselves by competing for representation. SOM mapping steps starts from initializing the weight vectors. From there a sample vector is selected randomly and the map of weight vectors is searched to find which weight best represents that sample. Each weight vector has neighboring weights that are close to it. The

weight that is chosen is rewarded by being able to become more like that randomly selected sample vector. The neighbors of that weight are also rewarded by being able to become more like the chosen sample vector. This allows the map to grow and form different shapes. Most generally, they form square/rectangular/hexagonal/L shapes in 2D feature space. Each node’s weights are initialized. A vector is chosen at random from the set of training data. Every node is examined to calculate which one’s weights are most like the input vector. The winning node is commonly known as the Best Matching Unit (BMU). Then the neighborhood of the BMU is calculated. The amount of neighbors decreases over time. The winning weight is rewarded with becoming more like the sample vector. The neighbors also become more like the sample vector. The closer a node is to the BMU, the more its weights get altered and the farther away the neighbor is from the BMU, the less it learns. Best Matching Unit is a technique which calculates the distance from each weight to the sample vector, by running through all weight vectors. The weight with the shortest distance is the winner. There are numerous ways to determine the distance, however, the most commonly used method is the Euclidean Distance.

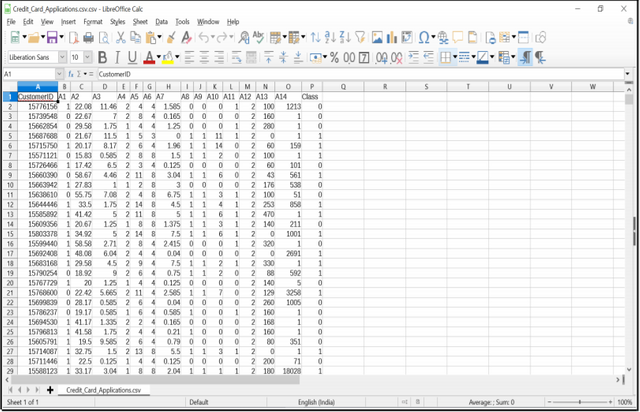

A snapshot of a part of the dataset that is provided to the application is given below:

RESULT

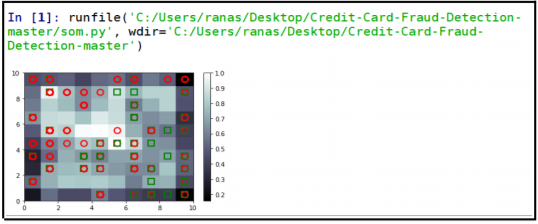

The generated self-organising map is given below:

The red circles denote the fraudulent credit card transactions. And the colour transition in the back represents the probability of occurrence of a fraud.