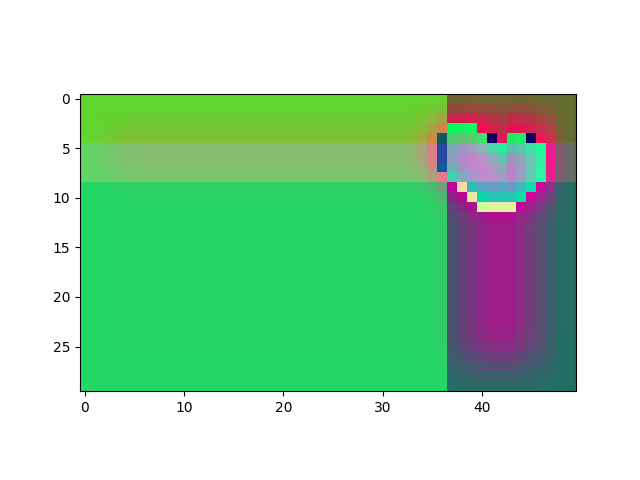

This post follows my previous one (https://steemit.com/grammatrix/@maicro/floorplan-grand-thoughts-on-using-a-style-transfer-algorithm) and is part of the series on my machine learning model, floorPlan (https://maicro879342585.wordpress.com/2021/08/18/grand-floorplan/). Throughout this article, I'm going to show you how my model came up with this little heart:

After studying Bethge Lab's picture style transfer model, as outlined in my previous post, I went ahead to build my own version of the style transfer model. I have explained the infrastructure in which this model is going to work, please feel free to look it up on the model page given above. I looked at what I needed a style transfer model to do, and figured that I don't need something so large as those designed for a painting, while I may need to go in to do quite a bit of tweaking. So I've decided to code up one from the ground up.

For my "typical" floor plan, I'm allowing a dimension of 30ft by 50ft, and each pixel in my model would be for 1sqft. Of course these parameters are easily adjustable, while the larger the floor plan, the more memory... it will take. In general, my floor plans would be much smaller than a picture, therefore my model would require much less computational resources than a typical computer vision problem. Furthermore, the CNN could also be largely simplified, not to mention we should be expecting mostly simple lines and block patterns for a floor plan. Therefore, I am only coding in a handful of convolutional layers, and wouldn't really need the pooling layers.

For the cost function, what I'm learning from Bathge Lab are as follows: the content loss should be calculated on the final layer, whereas the style loss should be determined through gram matrices and weight-averaged over the last few layers. Meanwhile, the relative weights among the layers for style loss, and between the content and the style losses could also be important hyper-parameters. Anyways, for now, I'm trying to construct a cost function that looks like how it is done by Bathge Lab.

My code can be found here (https://github.com/nancy123lin/GAN-with-a-Grid/blob/main/styleTransfer.py).

I implemented my model in theano. As a starter, I simply wrote two convolutional layers (given that my picture size is only 30x50). Since I wanted to work with simple lines and shapes, there could be fewer layers of filters with larger sizes. That said, these hyper-parameters are always subject to future adjustments in the applications.

As for the loss functions, I basically carried out the style transfer loss functions step-by-step. Style loss was calculated on both of the convolutional layers in my baseline model. Should I need to add more CNN layers, I would still try 2 or 3 layers of style loss to start with, since I don't think the patterns should be very complicated.

As for the "data", I generated "pictures" that are horizontally or vertically divided into two regions in each of the l dimensions/layers. By the way, I built the model so that the number of input channels could be other than 3. I just have to make sure that the filter dimensions match the input of the filters each convolution of the way. For the initial synthesized picture, I could fix it at 0.5, randomize each pixel, or randomize the same way the input pictures were generated. That is a story for the further calibration stage though.

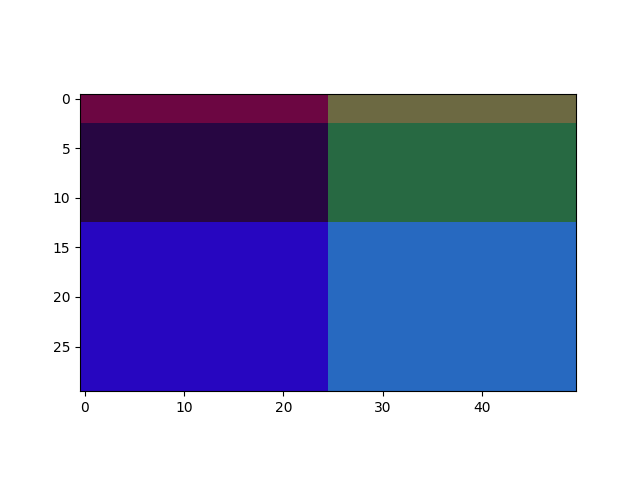

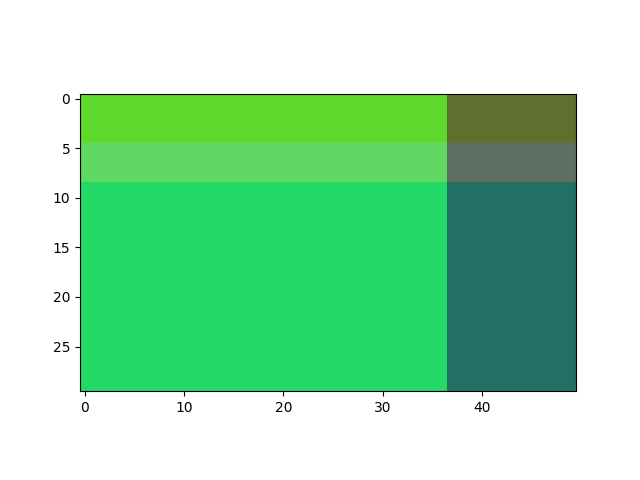

Let's see some case 0 results of my model taking a few casual steps. Here're the randomly generated content input:

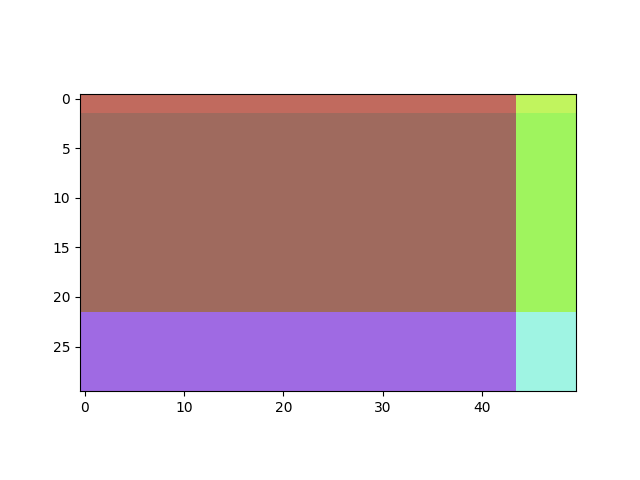

And the style input:

Last but not least, the "white noise" synthesized picture input:

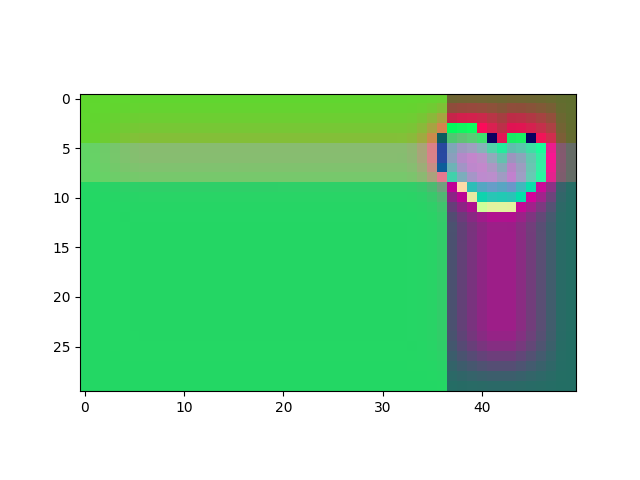

And voila, the picture with the little heart at the beginning of the post was churned out:

I only ran the training for a few thousands of iterations, so a lot of what could happen haven't played out yet. Also, there are lots of improvements to be made, which would be the next stage for this project. Nevertheless, I was able to learn a few things from the early early results.

First of all, the model does try to recreate purple rectangles as were shown in the content and style inputs. Furthermore, it is aware that there should be strips about the width of the middle bend in the content picture and the right-hand-side of the style picture. Now, look at the heart: the outcome has slanted lines, even curves, although the input only had horizontal and vertical lines. Through adding up the different losses, the model somehow realized that there are certain efficiencies in such geometries. That exact logic would actually serve me well in architectural design applications.

On the other hand, limitations of the model are also obvious. When there are big blocks of colours, sometimes the model doesn't know how to change that. Also, I have to be wary of whether what makes mathematical sense for the model actually makes real-world sense when it is viewed from a floor plan perspective.

While I see minimization at work, fading rate is an issue to be worked on down the road. I somehow managed to make it learn something very fast at the beginning, but it tended "not to move out of a local minimum (or saddle point)". I'm not sure if those were indeed local minimums, but if there were, backward propagation is not the best algorithm to get out of them. It could also be that the filter weights have gone to extreme values and become practically "inactive". There are a number of things we could try down the road.

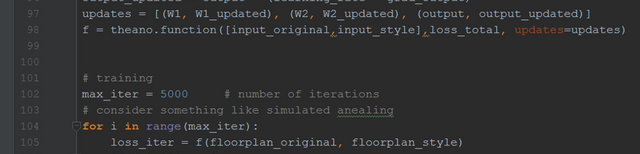

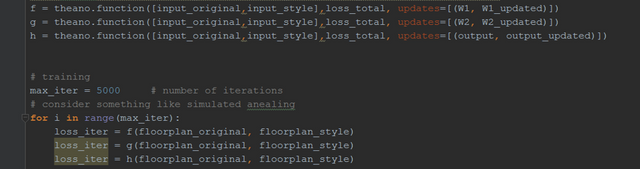

On thing that came into mind as I was doing some case 0 testing though, is how to update the parameters. At certain point, I kind of figured that updating the filter weights and the synthesized picture at consecutive stages could help avoid extreme values. While it turned out that wasn't the problem I was looking for, I decided to leave stage-wise parameter updates anyways. That means my model is evaluated multiple times in "one" iteration though. Please feel free to comment if you have experience training models this way.

The next steps for this model is fine tuning the CNN and twisting it to work for floor plans. Meanwhile, I will spend some time developing other models that I have an idea for. If you are interested in collaboration, feel free to reach out to me. You can also sponsor me to prioritize this project or work on something similar (https://maicro879342585.wordpress.com/2021/07/29/sponsor-maicro/).