A growing set of problems that I see in the decentralization movement and cryptocurrency space is the disregard for ethics and the lack of careful consideration of defense of human rights in designing technologies. So I'm going to attempt to join a discussion started by Vlad Zamfir but perhaps others like Dan Larimer on the necessity of prioritizing ethics and protection of rights. We may not all agree on which rights we value but in my opinion we need to come up with ethical standards and best practices with regard to the design of these platforms. Decentralization is a contributor to the greater good when it defends human rights and is used in ways which produce measurably good consequences for the world but when these technologies fail to do so then they become dangerous, destructive, and illegitimate.

Consequentialism is only one approach to ethics

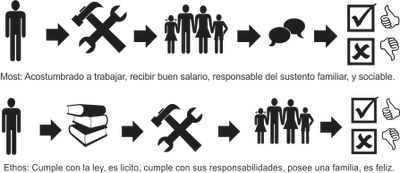

Generally speaking there are two approaches to dealing with ethics which are 1) consequentialist and 2) deotologist. When we speak of libertarianism there are generally two varieties, the consequentialist libertarians who are libertarian only when libertarianism is producing better consequences than the alternative policies and the deontologist libertarians who believe in principles such as the non-aggressive principle. Consequentialists arrive at libertarian policies through carefully measuring outcomes and comparing them while deontologist libertarians are libertarian for libertarian sake, that is to say they choose to follow strict rules like the non-aggression principle or if Christian the "do onto others as you would like others to do onto you" golden rule.

This is a topic I have blogged about in the past Natural Rights Libertarianism vs. Consequentialist Libertarianism. It is not important to me which path or approach to ethics people decide to take as long as there is an approach being taken and ethics are deeply considered during every aspect of the design process. All the transparency in the world on developers doesn't trump an ethically designed formal specification because the developers aren't going to be perfect but the design can factor in human imperfection in it's formal specification so that it always trends toward a more ethical status quo. In essence the mechanism design, the formal specification, the UX, all have ethical dimensions which have to be carefully considered not just in the sense of where human beings are now ethically, but where our species is going and what capabilities we may have in 10 or 15 years.

Ethically aware blockchains and crypto-platforms are a must

There has been and still is a debate about immutability vs hard fork. The problem with that situation is that the blockchain wasn't designed to be ethically aware. It's simply not human to have something which cannot be stopped nor is it necessarily wise. It's important to always have the ability to correct a mistake and this applies not just to ethics but also to how life works in general. When smart contracts have no fail safe, when they are not allowed to fail, then manual consensus mechanisms take over and human beings have to hard fork. If Ethereum were designed from scratch to not have to hard fork ever then at least partly this could be avoided.

In general, the technologies we create have to be ethically aware. And it's not good enough to simply put our own ethics onto it as if we somehow at our current state of understanding have reached the zenith. It instead is better to create systems which can evolve along with our understanding of ethics and even contribute to improving our ability to understand ethics and for this reason among many others, it is important to have artificial intelligence capabilities baked in by design.

Why do we need to augment our intelligence?

In my posts about the exocortex or about AI many people might not understand where exactly I'm headed with these posts. Many might think it's science fiction or they perhaps just don't get the value proposition. The value of an exocortex, of agent based AI, of bots, of various forms of decentralized artificial intelligence, is because it is the only means we have of managing complexity. By augmenting our intelligence we also can augment our ability to be ethical and it is the tool which enhances the ability of the tool maker to make decisions about how to ethically design future iterations of the tool. In essence, what we gain from an exocortex/AI functionality is the ability to have a moral calculator which understands what we designate as our system of morality and which can help each one of us to make increasingly less bad decisions over time according to our criteria.

We need a blockchain which is aware of human rights and which helps us defend them

In my opinion it is not enough to just produce a blockchain which automates all sorts of business transactions but which leaves human beings to fend for themselves. It is important to create a technology whether it involve a blockchain data structure or not, which can be aware of the moral dimensions, the ethical dimensions, the legal dimensions, of decision making, of design. If we think about this then we can find that it is very possible to encode deontology in the form of deontic logic, we learn that all laws can be imported in such a way that the apps, bots, AI, are aware of the different laws in different jurisdictions at a level beyond what any human currently understands, and where AI can also be aware of the ethical dimensions, priorities, moral views, and make precise recommendations, or take precise actions on behalf of the network and or community of people it represents.

Human rights can be represented on a knowledge base in a language that AI can understand and use. Laws are kinds of rules which get enforced by society in a specific process. Rights get enforced by society as well in a specific process. It is possible to create agents (bots/AI) which can act on our behalf, but which can do so in a way which is more ethical than we can currently imagine, while also falling within the bounds of the law as it is currently written. An agent AI can have the knowledge of top lawyers, theologians, game theorists, and communicate back and forth with you to negotiate an action to take.

Conclusion

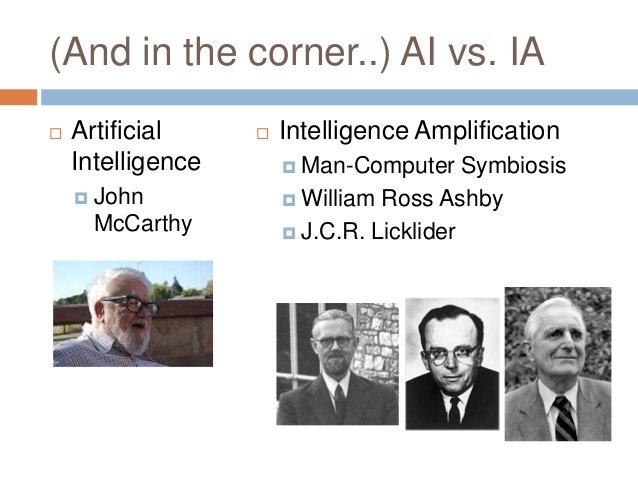

Decentralization is only valuable when it produces enhanced security, liberty, and happiness. If it's costing more than it's benefiting then it's no longer an ideal solution. It is up to us to do what we can to make sure these technologies produce benefits which always vastly outweigh the costs. In order to do this we have to put more resources, research, effort, into philosophy, ethics, rights protection, and security. It's not enough to simply create a decentralized platform and then simply walk away to let the chips fall, but it is important to consider how the community can govern the platform while considering the current very limited state humanity is in culturally, ethically, politically, and seek ways to transcend these limits using the platform itself. In a sense a feedback loop is needed between the human and the AI, so that we get what is known as IA (intelligent amplification) in the areas most critical to benefiting human rights protection.

References

- https://steemit.com/technology/@dana-edwards/thinking-outside-the-brain-why-we-need-to-build-a-decentralized-exocortex-part-2

- https://steemit.com/ai/@dana-edwards/personal-agents-what-are-expert-systems-do-expert-systems-benefit-from-decentralization

- https://steemit.com/crypto-news/@dana-edwards/attention-based-stigmergic-distributed-collaborative-organizations

- https://steemit.com/steemit/@dana-edwards/personal-preference-bot-nets-and-the-quantification-of-intention

- https://en.wikipedia.org/wiki/Intelligence_amplification

Image 1: https://www.flickr.com/photos/psd/1806225034

Image 2: https://commons.wikimedia.org/wiki/User:Leonardoagelviz

Great post again Dana

I had a look at your other piece too, like you I'm a consequentialist. I think the deontological approach breaks apart as soon as it has to reconcile clashing principles within the same framework, what it's really doing is quantifying and weighing up the pros and cons of the outcomes - basically moral views either converge towards consequentialism or become somewhat incoherent. For example, if an you have to program an AI driven car to choose between hitting a young child or an old man during an imminent accident, a deontological approach would either have to defer to an outcome based assessment (consequentailism), or be unable to resolve the problem.

Artificial intelligence will most certainly help resolve moral dilemmas. As soon as we recognize that the well being of sentient creatures to the extent they can experience happiness and suffering is at the core of the moral question, then AI will definitely help optimize our pathway in getting there, as we live in a deterministic universe.

I also agree that the decentralization of AI is one of the most important endeavors we should pursue, considering the rise of AI is virtually inevitable, the most effective way to prevent this super power to fall into the hands of malicious actors is probably to decentralize it and have it available to everyone. Blockchain can certainly help in that respect.

As to the larger question of whether the use of decentralized systems itself is beneficial, like you I'm a little skeptical. I think an implied premise here is that we generally consider a more even distribution of wealth and power to be morally desirable, due to the economic effect of diminishing marginally utility (well being is increased if 10000 people have $100k than a single person with $1B and 9999 others have $0) which I agree with. However, I don't know if decentralized technology automatically gets us there. It seems that it can merely shift the centralization of wealth and power from a physical necessity to one of consensus. So one cannot control power by playing the middle man as we can cut him out completely, but the resulting distribution of wealth and power can be just as concentrated as if there were a middle man providing the platform.

Blockchain technology is certainly revolutionary but it itself is not going to supplant our need to reflect on our own incentives and moral behavior.

Once again thanks for sharing your insightful views.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

My view on wealth, consider AI as the generator of future wealth. Access to that AI becomes critical for all.

My view on ethics amplified by AI

If we go with a consequentialist approach then we who think in this way realize our decisions are shots in the dark. We cannot see what we are doing. We cannot know the ultimate meaning of the words we say. We cannot predict the effect of our behaviors on others. If I were to think about how my behavior could effect every other person, personality, worldview on this planet, I would quickly lose my mind.

And to be honest a lot of people have lost their minds. John Nash is a good example of a consequentialist thinker who lost his mind because he tried to process every possible intention behind everyone. The issues here which I realize are that as humans we are involuntarily ignorant and to put a definition on that I mean to say we have limited rationality (bounded rationality), we have a very limited ability to process information, we aren't good at dealing with complexity, and our attention is very limited as we live very short periods of our lives as fully conscious and aware.

So what does this mean? It means we need help because the world only is becoming more complex every day as new people are born, new technologies change how we interact, new knowledge from scientific results change our understanding, and 100% of us are lagging behind because no one can keep up with the rate of change in terms of knowledge generation, complexity, or the biggest issue of all attention scarcity which no one can bypass no matter if they have an IQ of 500 or 50.

Agent based AI (intelligent agents) are how we can deal with complexity. On an individual level it will be these agents which can process the current state of the world for each of us. Just as it would be to give a partially blind person glasses so they can see better and make better decisions, to have an exocortex in the form of intelligent agents which can think on your behalf would have a similar effect. To a certain extent we have something like this forming with Google but the problem of course is Google isn't our mind, doesn't belong to us, and isn't beyond being censored.

So what is the main issue here? Governments exist to protect human rights but governments violate human rights during war. As a result our human rights are constantly diminished. Human beings create technology and one of the best reasons to create a technology is to protect rights. In this case it would be a defensive technology while offensive technologies which are definitely going to be created by governments are going to violate our rights. The feedback loop we gain from symbiosis between human and AI is critical in designing technologies because if you want something to be ethical, and if you want something to be effective, while also being efficient, secure, and even profitable, then you need to analyze a lot of data, compute, simulate, at a level beyond the reasoning capabilities of any particular human or group of humans.

If for example you wanted to create an autonomous network which you want to be able to formally guarantee cannot violate human rights without being shut down, or which you want to be able to guarantee will follow the community social norms, or which you want to guarantee will not break it's contract, then you need AI just to build such a multi-agent autonomous network. So basically, in more simple terms, we need to decentralize AI before we can learn to create safer, more ethical, future iterations of AI, and we need to in my opinion put a focus on AI which protects or at least avoids violating human rights.

By AI we could be talking about intelligent agents, bots, autonomous agents, smart contracts which learn, etc.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

And I wasn't very specific but what I meant in terms of what an AI can help us design could be as simple as helping us design the right mechanism design for a future blockchain community. We get the mechanism design wrong and it fails as we see with several blockchain communities today, but if we get the mechanism design right then it can be a better community than anything we ever dreamed of.

Someone posted that my ideas are based on faith but not really. Once you have the right mechanism design in place then your humans are going to act human, and the incentives will just be set up in such a way that it's in their best interest to take on pro-social behaviors. In that sense you can protect human rights using mechanism design. AI would simply aid in the creation of these mechanism designs by helping humans to generate them. AI also would help humans to generate formal specifications which could for example guarantee privacy for people who want that, or guarantee the data is always under the control of it's owner as designated by whomever controls 100% of the private key.

Reference

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

Yes, I agree, much of the computer science here is over my head, but I see that AI can certainly aid in helping us discern a morally ideal path.

I would slightly contend a rights based approach, for I don't think that's entirely compatible with consequentialism. I would add a qualifier that rights to the extent that they lead to overall flouring and well being ought to be championed. It's a pedantic point I suppose, but I can be like that lol

Tau does seem interesting in its approach to building a decentralized AI network. I lack to expertise to judge how feasible it is to write a programming langue that is consistent and complete by sacrificing some expressiveness, and where that could ultimately lead once achieved. You on steemit.chat? I'm there with the same name, just have some tau questions and don't want to bother Ohad all the time as he's the only dev right now.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

My own opinion on that is that it's not easy to decide who and what "all" is. I would say with regard to my view on rights, I promote human rights because it's how I would like to be treated and how the people I care about want to be treated. So when I measure consequences, I first look at the consequences to myself as an individual, then I look at the consequences to individuals I know, who care about me and or who I care about, and finally the consequences to complete strangers. So the rational reason to support human rights is that you want to have them and it's a good measure of success or failure of a government if you know how much or how little rights you have. I believe in measuring success and learning from failure. How do you measure wellbeing or define it in law?

I am not on Steemit chat but I can say if you want to track Tauchain there are only a few people who know enough to talk about it. I know quite a bit because I'm in daily contact with Ohad and know what is planned. A whitepaper, a presentation, and a specification for the Tau Meta Language are in development.

The computer science is "theoretical computer science" so a lot of it was unfamiliar to me as well. Certain aspects of the Tau design I still do not completely grasp such as the transfer theorem.

The research Ohad has conducted is breathtaking and if you would like to follow his research path you can check: https://github.com/naturalog/tauchain/blob/f7c846ecbf6de969283bf0d5749ef7274b1a8c6d/design.mso

That ends at Dec 11 2016. Research is being conducted continuously. Ohad and I share research papers and discuss results on nearly a daily basis. Some of the topics I understand, some I barely understand, and some I cannot grasp at all. But from what I do understand, which is the main part of how the Tau language will work, I do know the theory behind it looks good.

The new Tau design is not restricted to MSOL (monadic second order logic), as you can switch the logic.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

That is an interesting concept--that of combining neutral technology with knowledge/intelligence about ethics. There are all kinds of interesting ramifications in considering whether or not that is even possible. I think to the extent that ethics are adequately represented by rules, technology could be made to be "ethical" in that sense. To the extent that true ethics is dependent on spiritual growth of the soul... that might be more difficult (impossilbe?) to pull off with AI.

I think you might enjoy this book I recently read. It's called Return to Order by John Horvat. I found it to be thought provoking. It considers the problems with today's society and some possible guiding principles in resolving the issues.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

It is interesting to read how two posts at an hour apart can have links: one philosophical, the other, facts.

https://steemit.com/newsleaks/@everittdmickey/how-algorithms-and-authoritarianism-created-a-corporate-nightmare-at-united

https://steemit.com/crypto-news/@dana-edwards/decentralization-is-only-a-means-to-an-end-how-can-we-protect-human-rights-through-technology-moral-amplifiers

Similar situations occur every day:

*A truck driver who blocked a highway for hours

*A lone wolf that kills dozens of people

*A whale with an erratic behavior that bungles an organization!

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

it's a through post that is very useful to the idea debating. I'm as an adjudicator for a debate champions and it's very useful to add my knowledge. Thanks for ur post.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

interesting, even if it sounds a bit "faith" to me ;)

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

Faith in what? The community? I do believe there are millions of people around the world who care about human rights and who are looking for technologies to help protect human rights. I mean I could be wrong but I would think most human beings like having their rights protected and don't care what tools are protecting it.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

faith in "ethics" and need of ethics. there are so many different ethical ideas, as many as human beings are.

I understand what you talk about. But everyone - except a minority of thinkers - cares about his own individual needs and rights, before of all. And that is what technology is developed for: fulfill individual needs and get money from it.

of course, people becomes also community, when it is strongly needed. And communities can grow up thanks to tech media, too. But ethical issues are not fixable "ex ante", because they are determined just by an individual or communitarian value system.

(I hope my english allows me to explain what I mean ^-^)

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

The reason governments exist is to protect rights. Not all governments are good at protecting rights and collectively governments conspire to violate our rights in the name of war.

The ability to create technologies which protect rights electronically is great. Cryptography can in many ways protect rights. AI can in many ways act ethical even if the simple minded humans behind the AI aren't wise enough to figure out why a certain decision is right or wrong.

When you can amplify the ability of people trying to do good to do good then you'll have more effective do-gooders. Of course there will always be people who don't care about the future, themselves, or you, but those people you speak of aren't going to outnumber the people who do. Many people want to become better people but don't know how, or are limited by their brains.

I include myself, when I say ignorance is often involuntary. Ethics do not require sacrifice of self interest but do require considering long term interests, the interests of others, etc. Proper execution of ethics is not easy and many humans simply aren't capable of dealing with the complicated world we have now. We require help from tools and the same way we can use tools to land on the moon or dive under the ocean we can use tools to enhance our decision making capacity one individual at a time.

Our value systems need to evolve but we can only evolve them when we have a deeper understanding on the consequences of our choices. Most of us make choices with very limited ability to grasp the consequences and even the most thoughtful people can only calculate so many possibilities in a short time frame. What if you could encode your ethics into your "digital agent" which can act as you would act, only with an ability to actually process the "big data" which you cannot process? Again I only speak for myself but if the "big data" gives me new insights onto the nature of society, reality, myself, I'm going to adopt new behavior based on new information I learn and of course change my values.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

Again, I see faith and dogmatic believes in your words.

I can just repeat that no one can see what's better for another's future.

And I would add that ethics comes from behaviour, not behaviour from ethics. You can't plan it, neither for yourself!

But I mostly agree with your words about the necessity to raise awareness among people about the changes that new technologies brings in one's rights and their protection. Raising awareness is all one can try to do, IMHO.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

I'm not sure what I have faith in? Unless you mean science and technology? That really isn't faith though because that is the only thing humans have to separate ourselves from other species. So I guess I expect there to be continued progress and you're right there is the possibility that we could abandon science and technology but if we don't? Then we can leverage science and technology.

Why not use technology to help humanity adapt to the changes that come from technology? And for the rational humans the game theory can be worked out, and irrational humans may rely on faith as you say, but in either case we want humans to make better quality decisions.

I'm not sure I can translate the meaning of what you say above. Ethics to me are just based on logic like anything else if it's anything involving rules and if it doesn't involve rules then it's based on emotions. But to say people cannot plan ahead, I would not agree with that. We can and do plan, but we aren't very good at it because our brains are limited. Why adhere to these artificial limits which keep us from being as ethical as we can be? And by ethical I really leave it up to the individual to define their own ethics but any formal ethics besides emotivism, can be defined in language, with logic behind it.

A list of right and wrong is deontology and the logic is called deontic logic. While measuring the consequences of every decision is called consequentialism and deals more with statistics, risks assessment, probability, etc. An individual can determine whether to fly in a plane based on the risk statistics or based on faith in a higher power, based on how they feel about heights, or based on the dream they had, or a book which told them, but the point is if it's consequentialism then it's always going to have to be about measuring the probabilities, the probable outcome of flying in a plane is landing safely.

Deontology could have a rule that "the Divine law says no one may fly in a plane". This would mean it is always wrong to fly in a plane no matter what the risks are, or the probabilities. In theory a deontologist can be an atheist, but choose to follow simple rules as a way to manage the complexity of decision making. It's in essence a short cut and to some degree most people have to make them at times if we want to avoid going crazy.

Can we know every intention of every person, know every risk statistic of every activity, of every decision, can we know all of it in time to make decisions? No we can't. But the difference between ourselves and an AI is that an AI can know all of this and make decisions. AI or "digital agents" can calculate the precise risk factor for every decision it makes for us.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

thx for your answer. best wishes for your faith, even if you don't see it ;)

I can't argue, it would be too long.

(you are young, aren't you? ;) )

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

It's not really about faith or about me. Anyone who wants to work toward a better world should be given the tools to help them in that effort. It's really about what people want to work on today. You are implying people who hope for a better world and are willing to work toward it are doing it out of some sort of faith? But people who work for self interest and greed reach the same conclusion that it's better for themselves to have a better world in the future with less unnecessary conflict. You can reach a conclusion that decentralization is in your best interest for your future just like you can reach a conclusion that having human rights is also in your best interest because you're not looking forward to your human rights being violated in your future. So faith in what exactly? The hope for a better future is nearly universal because very few people want to work hard to create their own hell. If the tools get created then you can decide for yourself if it improves your life but if no one creates the tools because no one believes it's possible to engineer a better life then that is one less option for you.

Since you don't explain what you mean by faith, it must not be very relevant.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

I believe that technology should be used in any way possible to help us understand whatever ethical system we believe to be following, and, as you mentioned, even to help guide us in that journey. But this is not exactly equivalent with "ethically aware" technology. I see many issues with trying to embed ethics into all our technical tools, but the most obvious to me is that ultimately, it is up to the person using the tool and not the creator.

Let me illustrate with an example. Lets say that I create a content publishing platform for the web, and I share the code. This tool is ethically agnostic. It does not know what kind of content will be published. But, lets say that we care about ethics, so we embed a fact-checking tool with our publishing platform, to help authors publish more accurate information. Well, If anyone wants to use our platform to publish factual inaccuracies, all they have to do is take the fact-checking tool off of the source code. We could decide not to share the source code, but this has all sorts of ethical issues within itself, that is why the free software movement exists.

The fact that it is hard for us to embed an ethical tool within an agnostic tool does not diminish the value of either, but it does point to the fact that it is, probably, a better use of our resources to simply publish the useful, agnostic tools, and then create tools to fight the improper uses of the agnostic tools once the ill effects materialize. Otherwise we might find our selves spinning our wheels forever trying to safe guard all our tools for improper uses that might never exist. The first option seems more consequentialist to me.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

The source code isn't where it's important to be ethical. It's in the formal specification which ultimately can become a source code and then executable but it's not the source code. The formal specification is a description of the intended behavior of the publishing platform. In that description of behavior you would be able to make a decision whether to have the capability to moderate the platform or to leave it unmoderateable. To make it a platform which can be moderated is allowing the ethics of the community to determine which content is visible on the platform, which content becomes popular, which content becomes rewarded, etc. In a completely agnostic platform you would have all kinds of content with no collaborative filtering mechanism and as a result less people will find the sort of information they like and more people will be confronted with information they don't want to be exposed to. So by design you want to give as much control over these decisions to the users, as to what they would like to see, or what they want on the platform, and you could even in the formal specification detail it as a right of the user to filter content according to their preferences whether collectively, individually, or in any human-machine combination.

A formal specification isn't necessarily agnostic because you decide which features to prioritize. So for example if you put in a consensus mechanism then the platform appears agnostic, but what you're really doing is giving the users control over the ethical element of the platform through the consensus mechanism. You're giving the user the ability to take features out, put features in, change whatever they need to change to make sure the platform stays in an acceptable range of ethical.

It's not our tools. The tools belong to the world. The tool maker just has to design the tool to be capable of learning about the user in such a way that it can be "ethically aware" in the sense that it has a model of what a human is, has a model of what the various human religions are, has models of the different philosophical views, schools of thought, social norms, traditions, laws, etc. This knowledge can allow the platform to help the user to adhere to their own rules because it's aware that humans adhere to these rules. The platform does not make rules for humans, or determine the rules, it simply can reason over it's knowledge which is supplied by humans.

So just as you can supply Wikipedia with the knowledge of humanity, then maybe a platform can use that knowledge to understand little facts about humanity and when it's time to redesign the platform it can be aware of these little facts and help humans to make better design decisions. For example a platform which is aware of human rights can give suggestions to help make it's formal specification better for those who care about human rights. But how would it know if the users care about human rights? The consensus process would reveal what the users care about which is why consensus is so important.

If the majority of users of Steemit express support for human rights according to a process defined in the formal specification then once consensus is reached the platform would know to suggest design improvements according to this priority. That is possible using AI which reasons over a knowledge base.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

Have you heard of extended mind theory? The idea is that your tool becomes an extension of you, it's a part of your mind. So your ethics embedded into it only is a digital self representation. Agent based AI for example must have a set of goals and priorities and if it's your personal agent then it's going to have your goals, priorities, and ethics. By ethically aware I mean we need a platform which can contain knowledge of human experience, laws, social norms, ethics, in it's knowledge base, with an ability to reason over it, and help people to make higher quality decisions.

Not embed ethics in the tool but in the AI itself and in the design. By design I mean the mechanism design which is not the source code but the incentive structure. For example Bitcoin attempted to be decentralized because the mechanism design promotes certain priorities and security guarantees. Of course it failed because it's becoming centralized but you can see how mechanism design works by rewarding the participants on the platform who play the game according to the agreed rules which gives an incentive to adhere to those design constraints.

Steemit has this too with it's reputation system and reward system. It's an information diffusion and generation platform where you're encouraged to share new information on a transparent blockchain. Your property rights in theory are supposed to be protected by your control of the private keys. So there definitely is ethics built into the design of Steemit and while anyone can fork the code and create a different version the community will typically join the platform which has the ethics they agree with.

The ethics do not go into the source code. In addition, it's not so important that you put your ethics into anything. What you have to do is create a platform which is capable of inheriting the ethics of the community based on some consensus process. This capability can be part of the formal specification of the platform and verified by the formal verification process. The behavior of AI can be constrained in the formal specification and proven by the formal verification process. Platform which don't respect the ethics of the community and which violate human rights can be avoided for alternative platforms which do and the platform most fit will ultimately survive in a truly free market. Of course I know we don't have a truly free market but that is the theory at least.

References

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

very complex issue, and AI in this sense is quite abstract still, despite the accelerating growth of AI in use in present time

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit