“World’s first decentralized big data and machine learning network, powered by a computing-centric blockchain”

A big claim – but an exciting one – so lets take a look insode this project to see what it’s all about.

Firstly, what is big data?

Big data is data sets that are so big and complex that traditional data-processing application software are inadequate to deal with them. Big data challenges include capturing data, data storage, data analysis, search, sharing, transfer, visualization, querying, updating, information privacy and data source. There are a number of concepts associated with big data: originally there were 3 concepts volume, variety, velocity.[2] Other concepts later attributed with big data are veracity (i.e., how much noise is in the data) [3] and value.[4]

Big data can be described by the following characteristics:

Volume

The quantity of generated and stored data. The size of the data determines the value and potential insight, and whether it can be considered big data or not.

Variety

The type and nature of the data. This helps people who analyze it to effectively use the resulting insight. Big data draws from text, images, audio, video; plus it completes missing pieces through data fusion.

Velocity

In this context, the speed at which the data is generated and processed to meet the demands and challenges that lie in the path of growth and development. Big data is often available in real-time.

Veracity

The data quality of captured data can vary greatly, affecting the accurate analysis

Factory work and Cyber-physical systems may have a 6C system:

• Connection (sensor and networks)

• Cloud (computing and data on demand)

• Cyber (model and memory)

• Content/context (meaning and correlation)

• Community (sharing and collaboration)

• Customization (personalization and value)

Data must be processed with advanced tools (analytics and algorithms) to reveal meaningful information. For example, to manage a factory one must consider both visible and invisible issues with various components. Information generation algorithms must detect and address invisible issues such as machine degradation, component wear, etc. on the factory floor

Architecture

Big data repositories have existed in many forms, often built by corporations with a special need. Commercial vendors historically offered parallel database management systems for big data beginning in the 1990s. For many years, WinterCorp published a largest database report.

Teradata Corporation in 1984 marketed the parallel processing DBC 1012 system. Teradata systems were the first to store and analyze 1 terabyte of data in 1992. Hard disk drives were 2.5 GB in 1991 so the definition of big data continuously evolves according to Kryder's Law. Teradata installed the first petabyte class RDBMS based system in 2007. As of 2017, there are a few dozen petabyte class Teradata relational databases installed, the largest of which exceeds 50 PB. Systems up until 2008 were 100% structured relational data. Since then, Teradata has added unstructured data types including XML, JSON, and Avro.

In 2000, Seisint Inc. (now LexisNexis Group) developed a C++-based distributed file-sharing framework for data storage and query. The system stores and distributes structured, semi-structured, and unstructured data across multiple servers. Users can build queries in a C++ dialect called ECL. ECL uses an "apply schema on read" method to infer the structure of stored data when it is queried, instead of when it is stored. In 2004, LexisNexis acquired Seisint Inc. and in 2008 acquired ChoicePoint, Inc. and their high-speed parallel processing platform. The two platforms were merged into HPCC (or High-Performance Computing Cluster) Systems and in 2011, HPCC was open-sourced under the Apache v2.0 License. Quantcast File System was available about the same time.

CERN and other physics experiments have collected big data sets for many decades, usually analyzed via high performance computing (supercomputers) rather than the commodity map-reduce architectures usually meant by the current "big data" movement.

In 2004, Google published a paper on a process called MapReduce that uses a similar architecture. The MapReduce concept provides a parallel processing model, and an associated implementation was released to process huge amounts of data. With MapReduce, queries are split and distributed across parallel nodes and processed in parallel (the Map step). The results are then gathered and delivered (the Reduce step). The framework was very successful,] so others wanted to replicate the algorithm. Therefore, an implementation of the MapReduce framework was adopted by an Apache open-source project named Hadoop. Apache Spark was developed in 2012 in response to limitations in the MapReduce paradigm, as it adds the ability to set up many operations (not just map followed by reduce).

MIKE2.0 is an open approach to information management that acknowledges the need for revisions due to big data implications identified in an article titled "Big Data Solution Offering". The methodology addresses handling big data in terms of useful permutations of data sources, complexity in interrelationships, and difficulty in deleting (or modifying) individual records.

2012 studies showed that a multiple-layer architecture is one option to address the issues that big data presents. A distributed parallel architecture distributes data across multiple servers; these parallel execution environments can dramatically improve data processing speeds. This type of architecture inserts data into a parallel DBMS, which implements the use of MapReduce and Hadoop frameworks. This type of framework looks to make the processing power transparent to the end user by using a front-end application server

Big data analytics for manufacturing applications is marketed as a 5C architecture (connection, conversion, cyber, cognition, and configuration).[51]

The data lake allows an organization to shift its focus from centralized control to a shared model to respond to the changing dynamics of information management. This enables quick segregation of data into the data lake, thereby reducing the overhead time

Applications:

• 1 Government

• 2 International development

• 3 Manufacturing

• 4 Healthcare

• 5 Education

• 6 Media

• 7 Internet of Things (IoT)

• 8 Information Technology

Right so enough of the technical stuff – let’s focus on DX Chain – what is the pain point that it is trying to solve and why does it need the blockchain?

Firstly it is obvious to say that the blockchain is the best place for securing big data that is immutable and protected from interference – but how do DX Chain propose to solve this on the blockchain and how scalable will it be?

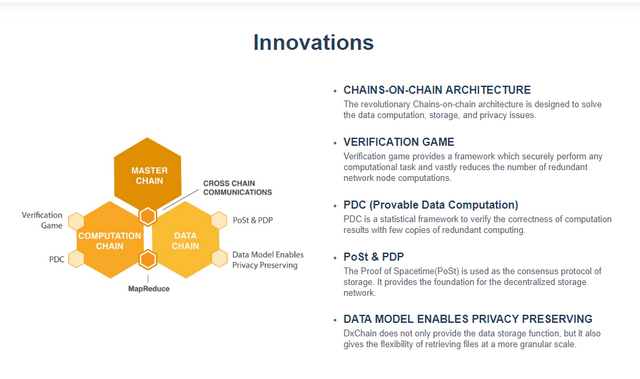

DX Exchange aims to create a big data and machine learning blockchain. The project uses chains on chain architecture, which includes one master chain into side chains to augment data mining algorithms and that's built on top of its ecosystem. So it's a blockchain ICO.

Lets take a look at some of their material:

Nowadays, only large corporates have the capability to run big data tasks and monopolize the data-related services. For individuals and small organizations, it is costly to get data storage services and difficult to acquire data sources. Even worse, the privacy and data security risks are taken by everyone, no matter if you are able to get any rational reward from data services.

There are some blockchain-based platforms designed to provide supercomputer services, however there are no existing solutions in the market to provide decentralized parallel computing environment that supports big data and machine learning.

Therefore, DxChain will be the real game changer in the market.

Blockchain, one of the most ingenious technological inventions in recent years, has many important and innovative characteristics, such as decentralization and immutability. Unfortunately, today’s popular consensus-based protocol still uses huge amount of computational power to maintain the blockchain itself, and cannot provide useful work for the community. Nowadays, people can use Bitcoin and Ethereum to buy something and use hyperledger fabric to trace the flow of a product. However, there are few real applications of blockchain besides financial transactions and tracking assets in a supply chain. That is to say, blockchain did not fundamentally change the way people are using internet.

DxChain designs a platform to solve computation of big data in a decentralized environment. Consequently, people can use DxChain as a data market to trade data, and create applications on DxChain to meet various needs, including business operation and businesses intelligence. Therefore, by applying blockchain to data storage and computation, DxChain will change the fundamental meaning of the internet.

Ok so this really is a novel idea and looking at their claims it really is an innovation.

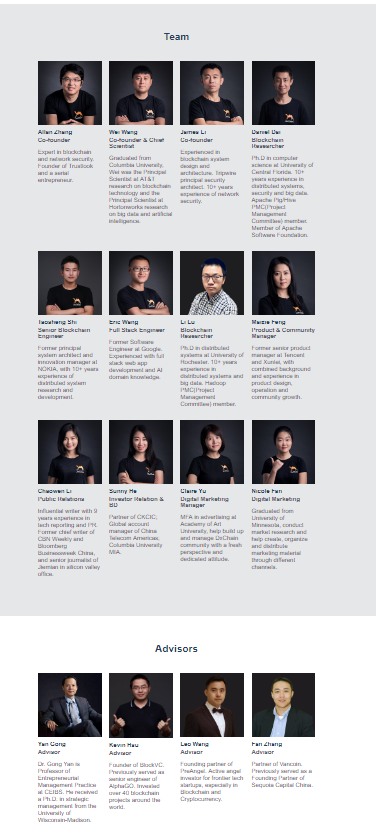

So – do they have the team to pull this off? Let’s take a look.

Indeed, this is certainly world-class team. There is a strong team based in San Francisco. Ken, who is a founder of Block VC. For the co-founder, we have Alan Jiang who worked at Nokia for 4 years, he's currently the CEO of Trust Look which develops AI-based security products. And then we have the second co-founder Mr. Wei Wan, who worked as a blockchain scientist at AT&T for 1.5 years.

Token Sale details and metrics:

Full details regarding the token sale can be round at https://www.dxchain.com/static/assets/docs/DxChain-token-sale-announcement.pdf

In colculision I am very excited about what DX Chain has to offer and I will be certainly be making an investment.

Please feel free to comment below.

Regards,

CC.

Other links:

https://t.me/dxchainchannel

https://t.me/dxchain1

https://t.me/dxchain

https://t.me/DxChainGroup_CN

https://medium.com/dxchainnetwork

http://www.dxchain.com/

https://www.youtube.com/c/dxchain

Congratulations @cryptocroney! You received a personal award!

You can view your badges on your Steem Board and compare to others on the Steem Ranking

Vote for @Steemitboard as a witness to get one more award and increased upvotes!

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit