First of all, what is cross-validation?

Cross-validation is one of the most commonly used techniques used to test the performance of an AI model. It is a resampling technique used to assess a model in the event that we have restricted information. To perform cross-validation, we keep a portion of the original dataset aside which is not shown to the model while it gets trained on the rest of the dataset, and use it later to assess the model’s performance.

What is the need for cross-validation?

We typically lean toward a train-test split on the datasets in a typical model training setup, with the goal that we can keep our training and testing datasets separated from each other for a reliable model assessment. Contingent on the size of the dataset we partition those datasets as 80/20 or 70/30.

The above ratio used during the train test split causes the model’s exactness vacillations as it won’t be steady on one firm unequivocal precision value. It will constantly vary. Because of this, we will be not sure about our model and its precision. The model hence is unfit to be used for our business problem statement.

To forestall this, the cross-validation idea is here to help us. There are different techniques that may be used to cross-validating a model. Regardless, all of them have a similar computation.

Partition the original dataset into two sections: one for training, the other for testing

Train the model on the train set

Test the model on the test set

Rehash 1–3 stages two or multiple times. This number relies upon the CV (cross-validation) technique that you are utilizing.

There are different sorts of cross-validations, yet k-fold cross-validation is the most famous one which we will talk about here.

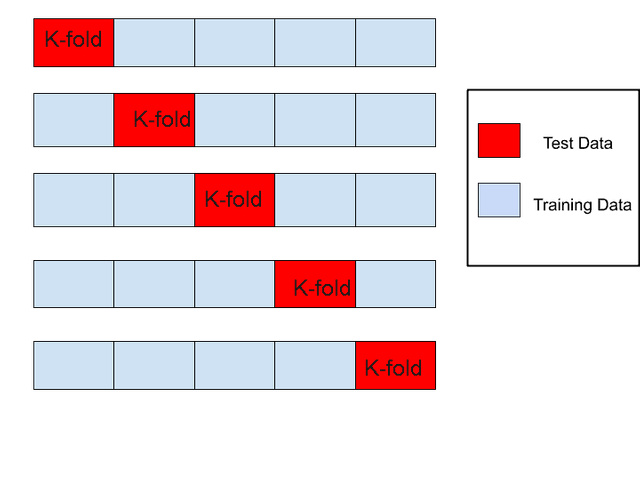

The K-fold cross-validation approach isolates the dataset into K partitions of trials of comparable sizes, which are called folds. For each learning trial, the expectation capability utilizes k-1 folds, and the remaining fold is utilized as the test set.

For instance, consider a dataset having 1,000 records or sections or components, and we need to perform K-fold cross-validation on this dataset. Assuming K=5, that implies there will be 5 folds or 5 trials, on this premise, we will measure the model’s precision.

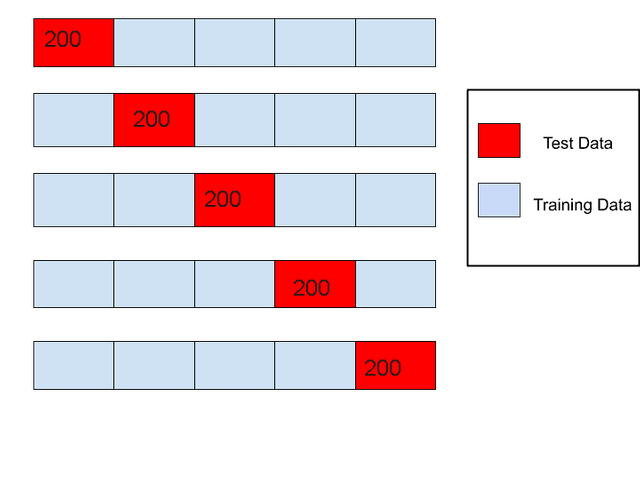

Each fold will have n/k samples in its test dataset

Where,

n= No. of the records in the original datasets

K= No. of folds

Therefore, for dataset having 1,000 records will have 5 folds containing n/k = 1000/5, i.e. 200 records as test samples and the remaining 800 records as training samples.

Assuming, the K1 trail yields the A1 accuracy.

Also, K2 fold will perform for the dataset having 1000 records, yet this time 200 records for the test dataset will be entirely unexpected from the past one. It implies there is no overlapping in the testing samples with the previous trial. A2 is the accuracy for the K2 fold. The fold will continue to perform these operations until it reaches K=5, i.e., the number we provided initially.

Assuming here that A1, A2, A3, A4, and A5 are the accuracies of K1, k2. K3, K4, and K5 fold respectively.

Generally, accuracy is determined as the mean of all the k-fold cross-validation accuracies which isn’t presently fluctuating as well as there is no irregularity in this which we are getting in the train-test split.

Additionally, here you can be certain about your model as, you will have the least, most extreme, and average accuracy from K folds. In our example, assuming that the K3 fold generates the highest accuracy as A3 and A5 as minimum accuracy for K5, we have already calculated mean accuracy as A which is the mean of accuracies generated from K folds.

With this data, you will be ready to stand sure as, yes this is our model. It has the least accuracy as A5, and the greatest accuracy as A3 while normal as A. which will be more reliable for business issues arrangements since this time your model has firm and unequivocal performance as opposed to previous changes and irregularity.

Notice that cross-validations permit you to get not just a gauge of the presentation of your model, yet additionally an action of how exactly this gauge is.

How to Determine the value of K

The most important phase in the process is deciding the value of K. Picking this value accurately ought to assist you with building models with a low predisposition. Commonly, K is set equivalent to 5 or 10. For instance, with scikit-learn, the default worth of k is 5. This will give you 5 gatherings.

While one of the impediments of k-fold cross-validation is that it won’t work efficiently and effectively on imbalanced datasets, for which we have stratified cross-validation.

Summary

In this article, I tried to explain K-fold cross-validation in simple terms. If you have any questions about the post, please ask them in the comment section and I will do my best to answer them.