The Ethereum-blockchain size has exceeded 1TB, and yes, it’s an issue

(TL;DR: It has nothing to do with storage space limits)

This is an indirect response to the following article by Afri Schoedon, a developer for the Parity Ethereum client, written less than a year ago:

The Ethereum-blockchain size will not exceed 1TB anytime soon.

Once a month users post a chart on r/ethereum predicting the blockchain size of Ethereum will soon exceed 1 TB. I…

dev.to

I want to make it clear that I have respect for almost all of the developers in this space, and this is not intended to attack anyone. It’s meant to elaborate on what the real concerns are and explain how the original article does nothing to address those real concerns. I would actually love to see something that does, because then we can throw it into Bitcoin. That being said, there are some developers who mislead, obscure, ignore, and attack via protocol confusion like what occurred with 2X and the replay protection drama, but most aren’t like that. You can’t watch something like this or read something like this and hate these developers. They’re genuinely trying to fight the same fight as us, and I believe Afri is part of the latter group, not the former.

https://github.com/paritytech/parity/issues/6372

If you’ve read my other articles you’re going to see some small bits of that information repeated. Up until now I wrote primarily about Bitcoin from a “maximalist” perspective (still am) and focused on conflicts within that community. What you may find interesting if you only watch from the corner of your eye, is that the reason for “conflict” here is exactly the same. I’ll even use Proof-of-Stake as further leverage for my argument without criticizing it.

Edit: It seems like people are not reading the subtitle and misunderstanding something. This is not about archival nodes. This is about fully validating nodes. I don’t care if you prune the history or skip the line to catch-up with everyone else. This is about about staying in sync, after the fact. Light nodes aren’t nodes.

This has become a 2-part article. When you’re done with this article you can read the follow-up one:

Sharding centralizes Ethereum by selling you Scaling-In disguised as Scaling-Out

The differences between Light-Clients & Fully-Validating Nodes

medium.com

Index

My Argument: Ethereum’s runaway data directory size is just the tip.

My Prediction: It will all work, until it doesn’t.

My Suggestion: Transpose.

My Argument: Larger blocks centralize validators.

It’s that simple. It’s the central argument in the entire cryptocurrency community in regards to scaling. Not many people familiar with blockchain protocol actually deny this. The following is an excerpt from what I consider to be a very well put together explanation of various “Layer 2” scaling options. (Of which, the only working one is already implemented on Bitcoin.)

https://medium.com/l4-media/making-sense-of-ethereums-layer-2-scaling-solutions-state-channels-plasma-and-truebit-22cb40dcc2f4

That article is written by Josh Stark. He gets it. His company even announced a project that’s meant to mirror the Lightning Network on Ethereum. (Which is oddly coincidental given Elizabeth Stark’s company is helping build Lightning.)

The problem? Putting everything about Proof of Stake completely to the side, the incentive structure of the base layer is completely broken because there is no cap on Ethereum’s blocksize, and even if one was put in place it would have to be reasonable, and then these Dapps wouldn’t even work because they’re barely working now with no cap. It doesn’t even matter what that cap is set at for this argument to hold because right now there is none in place.

Let’s backtrack a bit. I’m going to briefly define a blockchain and upset people.

Here is what a blockchain provides:

An immutable & decentralized ledger.

That’s it.

Here is what a blockchain needs to keep those properties:

A decentralized network with the following prerogatives:

Distribute my ledger — Validate

Append my ledger — Work

Incentivise my needs — Token

Here is what kills a blockchain:

Any feature built into the blockchain that detracts from the network’s goals.

A blockchain is just a tool for a network. It’s actually a very specific tool that can only be used by a very specific kind of network. So much so that they require each other to exist and fall apart when they don’t co-operate, given enough time. You can build on top of this network, but quite frankly anything else built into the base layer (L1) that negatively affects the network’s ability to do its job is going to bring the entire network to its knees…given enough time.

Here’s an example of an L1 feature that doesn’t effect the network: Multisig.

It does require the node to do a bit of extra work, but it’s “marginal”. The important thing to note is hardware is not the bottleneck for these (properly designed) networks, network latency is. Something as simple as paying to a multi-signature address won’t tax the network any more than paying to a normal address does because you’re paying on a per-byte basis for every transaction. It’s a blockchain feature that doesn’t harm the network’s ability to continue doing its job because the data being sent over the network is (1)paid for per-byte, and (2)regulated via the blocksize cap. Regulated, not “artificially capped”. The blocksize doesn’t restrict transaction flow, it regulates the amount of broadcast-to-all data being sent over the network. Herein lies the problem.

When we talk about the “data directory” size, it’s a direct reference to the size of the entire chain of blocks from the original genesis block, but taking this at face value results in the standard responses:

Disk space is cheap, also see Moore’s Law.

You can prune the blockchain if you need to anyway.

You don’t need to validate everything from the genesis block, the last X amount of blocks is enough to trust the state of the network.

What these completely ignore is the data per-second a node must process.

You can read my entire article about Moore’s Law if you want, but I’ll excerpt the important part below. Over in Oz they try and argue “you don’t need to run a node, only miners should decide what code is run”. It’s borderline absurd, but I won’t have to worry about that here because Proof of Stake completely removes miners and puts everything on the nodes. (They always were, but now there aren’t miners to divert the argument.)

Moore’s Law is a measure of integrated circuit growth rates, which averages to 60% annually. It’s not a measure of the average available bandwidth (which is more important).

Bandwidth growth rates are slower. Check out Nielsen’s Law. Starting with a 1:1 ratio (no bottleneck between hardware and bandwidth), at 50% growth annually, 10 years of compound growth result’s in a ~1:2 ratio. This means bandwidth scales twice as slow in 10 years, 4 times slower in 20 years, 8 times in 40 years, and so on… (It actually compounds much worse than this, but I’m keeping it simple and it still looks really bad.)

Network latency scales slower than bandwidth. This means that as the average bandwidth speeds increase among nodes on the network, block & data propagation speeds do not scale at the same rate.

Larger blocks demand better data propagation (latency) to counter node centralization.

Strictly from an Ethereum perspective with a future network of just nodes after the switch to Proof of Stake, you’d generally want to ensure node centralization is not an issue. The bottleneck for Bitcoin’s network is its blocksize (as it should be), because it ensures the growth rate of network demands never exceed the growth rate of external (and in some cases indeterminable) limitations like computational performance or network performance. Because of Ethereum’s exponentially growing blocksize, the bottleneck is not regulated below these external factors and as such results in a shrinking and more centralized network due to network demands that increasingly exceed the average users hardware and bandwidth.

Bitcoin SPV clients aren’t nodes. They don’t propagate blocks or transactions around the network, they leech, and all that they leech are the block headers.

Remember this because it’s going to get very important later in this article:

You can put invalid transactions into a block and still create a valid block header.

If the network is controlled by 10 FULL-nodes, you only need half of them to ignore/approve invalid transactions so long as the header is valid.

This is why validating the transactions matter from a network perspective, and why you need a large decentralized network. It doesn’t matter from my grandmas perspective and that’s fine, but we aren’t talking about my grandma. We’re talking about ensuring the network of working and actively participating nodes grows, not shrinks.

This node was participating until it got cut off due to network demand growth:

https://www.reddit.com/r/ethereum/comments/58ectw/geth_super_fast/d908tik/

It’s not uncommon and it continues to happen:

https://github.com/ethereum/go-ethereum/issues/14647

Notice how the solution is to “find a good peer” or “upgrade your hardware”? Good peers shouldn’t be the bottleneck. Hardware shouldn’t be either. When all of your peers are hosed up by so many others leeching from them (because the good peers are the ones doing the real work), you create a network of masters and slaves that gradually trend towards only one master and all slaves. (If you don’t agree with that statement you need to make a case for how this trend won’t subside in the future because currently that’s the direction this is going towards and it won’t stop unless a cap is put in place. If your answer is sharding, I address that fairy dust at the end.) It’s the definition of centralizing. Unregulated blocks centralize networks. Large (but capped) blocks are only marginally better, but set a precedent for an ever increasing block size, which is equally as bad because it sets a precedent of increasing the size “in times of need”, which mirrors the results of unregulated blocksizes. This is why we won’t budge on the Bitcoin blocksize.

I tweeted about it a few times but clearly I didn’t think that was enough. My Twitter reach doesn’t really extend much into the Ethereum space.

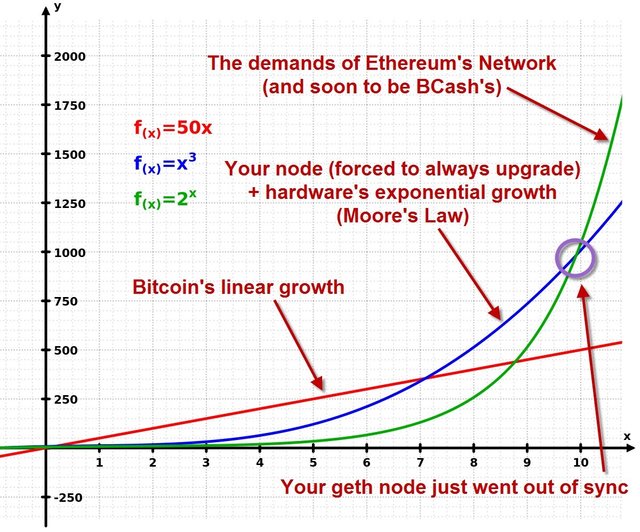

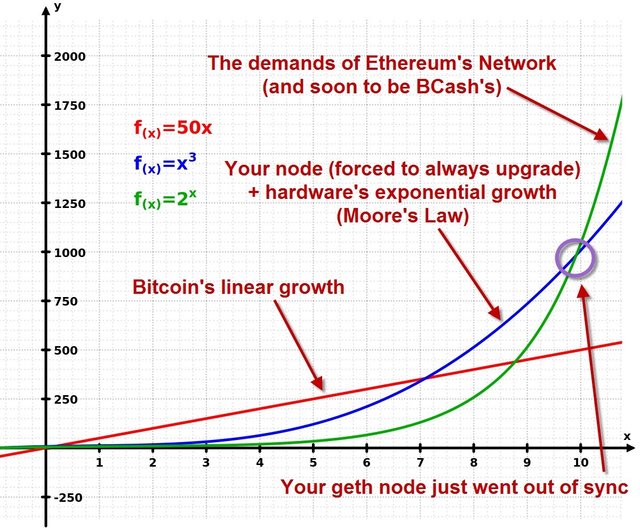

That chart is symbolic and not representative of any actual numbers. It only serves to visually express the point I’m trying to make. To clarify, the green curve represents an aggregated average of the various demands of the Ethereum Network. At some point your node will fall out of sync because of this or a blocksize cap will be put in place. It could happen now, or it could happen in 10 years, or in 50 but your node will fall out of sync at some point at this rate. It will never happen in Bitcoin. You can deny it now all you want, but this article will be here for when it happens, and when it does asinine Dapps like CryptoKitties, Shrimp Farm, Pepe Farm, and whatever comes next will cease to function. This is exactly what happened to Ryan Charles’ service Yours.org that he originally built on Bitcoin. The only difference being Bitcoin already had the cap in place and Ryan either didn’t foresee this from a lack of understanding, or for some reason he expected the blocksize to keep getting raised. Instead of reassessing he doubled down on BCash, meanwhile Yalls.org took his concept and implemented the same exact thing on top of Bitcoin’s Lightning Network.

My Prediction: Ethereum will implement a blocksize cap and it will race BCash to both of their deaths.

http://bc.daniel.net.nz/ ←No longer updating statistics, chart is edited & extrapolated using REAL current data.

https://ethereum.stackexchange.com/questions/143/what-are-the-ethereum-disk-space-needs

The chart above isn’t even a prediction. This is me filling in the blanks (in yellow) on what was the last remaining graph that compared both chains data directories, and then extrapolating from it. Here’s what we know:

Bitcoin’s future is predictable. The blockchain growth & network demands will always be linear. (Ideal)

The amount of data an Ethereum node is required to process per second is through the roof and climbing. (Unideal)

If Ethereum on-chain demand freezes where it is now, blockchain growth will continue the linear trend highlighted by that dotted line. (Very bad)

If Ethereum on-chain demand continues to grow exponentially the amount of people complaining about their node going out of sync will reach a tipping point. (There’s only one option when this occurs.)

That graph above? The owner stopped trying to maintain the node. Physical demands are an issue as well, like time constraints in your personal life. Servicing requirements need to be low, not high, not reasonable…low.

Do you know what I do to service my Bitcoin/Lightning node? I leave my laptop on. That’s it. If I have to reboot I shut down the services, reboot, and start them back up again. Day to day I use my laptop for an assortment of other tasks, none of which inhibit its ability to run the node software. With all due respect if a change was implemented and forced on me that resulted in my node no longer being compatible with the network and unable to maintain a sync, I would flip out over the idiocy that allowed that, if I was a misinformed individual. Fortunately I’m not and I signed up for a blockchain with foresight (Bitcoin).

The problem? I don’t think most of the people running Ethereum nodes are informed enough to know what they signed up for. I don’t think they understand the fundamental incentive models, and I don’t think they fully realize where and why they break down with something as simple as not having a blocksize cap. Hopefully this article will succeed at teaching that.

So what happens when that psychological tipping point is reached? Do people give up? How many nodes have to be lost for this to occur? The explorer websites aren’t even tracking this data anymore. Etherscan.io is no longer tracking full or fast sync directories, Etherchain.org says: Error: Not Found

Etherscan also isn’t letting you zoom out on the memory pool, the queue of transactions waiting to be included into blocks. The reason fees go up is because this queue builds up. You should be able to see this over time. Here’s one that tracks Bitcoin’s mempool, side by side with the Etherscan.io one:

https://jochen-hoenicke.de/queue/#1,4d /// https://etherscan.io/chart/pendingtx

Both of these charts are monitoring the rough total pending transaction counts on these networks, and the scales are about the same, 4/5 days respectively. The difference? I can zoom out on the Bitcoin one and see the entire history. Why does this matter? Psychology matters when your network has no regulated upper boundaries. Here what ours looks like zoomed out:

See what I mean? See how scale matters? What if I zoomed out on Ethereum’s mempool and saw that it was at the top of an ever growing mountain? I’m not saying that’s where it is today, but I am saying that this information needs to stop being obscured. I’m also saying that if/when it ever is unobscured, it’ll be too late and nothing can be done about it anyway. It’s already too late now.

Let’s take a look at block and transaction delay on Bitcoin’s network. Below you’ll see two charts. The 1st one is how long it takes for a block to spread across the network, the 2nd is for a transaction. Transactions are processed by the nodes (all 115,000 of them) and held onto until a valid block is created by a miner and announced to the network.

Block propagation times have dropped drastically because of very well designed improvements to the software. Transactions are validated when they come in and kept in the mempool. When a new block is received, it’s quickly cross referenced with all the transactions you already have stored, and very rarely includes many transactions you haven’t received yet. This allows your node to validate that block extremely fast and send it out to all your other peers.

Transaction times on the other hand have slowly gone up but seem to be stabilizing. They’ve been “intentionally” allowed to go up as a result of privacy improvements in the software, but that’s a worthy tradeoff because blocks are 10 minutes apart on average anyway, so a delay of 16 seconds is acceptable. I’d imagine that once blocks are consistently full this growth will level off because transaction fees from the blocksize cap will self-regulate the incoming flow of transactions, assuming no other protocol changes are made.

Keep in mind, none of this information is available for Ethereum:

https://dsn.tm.kit.edu/bitcoin/

Bitcoin is designed with this in mind. The transaction count queue goes up but the blocks are regulated. People end up learning how to use this tool we call a blockchain the right way over time and transaction flow stabilizes. With an unregulated tool you end up with a bunch of people chaotically trying to use that tool all at once for some random “feature” like CryptoKitties that ends up grinding the entire thing to a halt until the backlog is processed. All of the Ethereum full-nodes need to process every single one of these contracts. You might not need to, and they might tell you that you don’t need to, but someone does need to. So how many of them are there? What do higher fees do? They deter stupid Dapps like CryptoKitties at the base layer. There is absolutely zero need for them, and larger more “functional ideas” will only experience the same thing but much worse because blockchains don’t scale.

These Dapps are crippling your blockchain because it’s unregulated:

But that was the promise though, right? That was the dream. That was the entire premise of the Ethereum blockchain: Bitcoin, but better. It’s not.

Clearly unregulated blocks don’t result in infinite transactions, but the real takeaway here is the network can’t even physically handle the current amount, there just aren’t enough nodes capable of processing that information and relaying it in a timely fashion. Do you know how many Ethereum nodes there are? Do you really know? The Bitcoin network has about 115,000 nodes, of which about 12,000 are listening-nodes. Almost all of them are participating nodes, because that’s regulated too. What a listening-node is, compared to a non-listening, doesn’t matter here because they are all participating in sending and receiving blocks to and from the peers they are connected to. The default is 8, the client won’t even let you get more than 8 unless you add them manually. This was intentionally put in place, and it’s recommended you don’t add more because it’s unhealthy for the network:

https://bitcoin.stackexchange.com/a/8140

Remember this from earlier?

Find a good peer.

That’s not how you fix things. This is a prime example of why a chain that allows participants the freedom to be selfish via lack of regulation is bad. This only has one outcome: Master & Slave nodes, where the limited masters serve all the slave nodes. Sounds decentralized, right? Especially when the financial requirements to be one of those master nodes keeps going up…

To be fair, and as an aside: This is the exact criticism the Lightning Network gets, but it’s a completely different type of network. Blockchain networks are peer-to-peer broadcast networks. State-Channel networks like Lightning are peer-to-peer anycast networks. The way information is being sent is completely different. Your refrigerator has enough hardware to be a Lightning node. Lightning “Hub & Spoke”criticisms are with channel balance volumes. Hub & Spoke is equivalent to the Master & Slave issues, but with channel balances there is no bottleneck on the data. You just standardize the Lightning clients to open X amount of channels with X amount funds in each, then network forms around that standard, completely avoiding hubs or spokes, just like the Bitcoin clients standardize 8 peers. The Lightning Network is new so we don’t know what that standard should be yet because we have almost zero data we can measure. /endlightningdefense

Speaking of zero data we can measure, why are these the only charts for Ethereum node counts? Where’s the history? How many of these nodes used fast/warp sync and never fully validated it all? You don’t need to store it all because you can prune, but again, how many are fully validated? How many are just light clients syncing only the block headers?

https://www.ethernodes.org/network/1

It’s funny how propaganda sites like Trustnodes pushing BCash conspiracies publish pieces like the following one with bold-faced lies, then it gets circulated around and no one outside the flow of correct information questions it:

I’m not linking to a BCash propaganda site.

There are 115,000 Bitcoin nodes and they all fully validate:

http://luke.dashjr.org/programs/bitcoin/files/charts/software.html

So what do you do now? What do you do as an individual who slowly comes to this realization? What do you do as an individual who has no idea what’s going on? What happens to a network that is primarily made up of these individuals that slowly leave (not literally, but as a participating node downgrading to a light-node)? How many participating nodes are left? How many nodes hold a full copy of the original genesis block? What happens when 5 data centers are serving the entire network of slaves (light-nodes) the chain? Who’s validating those transactions when everyone is only syncing the block headers? You can sit there and repeat time and time again that “the network only needs the recent state history to be secure” all you want, but when your network is broken from the bottom up and most nodes can’t even keep up with the last 1,000 blocks, how is that secure in any way?

The takeaway from all of this:

Ethereum’s blocksize growth is bad because of node processing requirements, not how much they need to store on a hard-drive.

To prevent complete collapse of the network, Ethereum will need to implement a reasonable blocksize cap.

Implementing a blocksize cap will raise fees and in return prevent many Dapps from functioning, or severely slow down. Future Dapps won’t work.

If Dapps don’t work, Ethereum’s entire proposition for existing is moot.

Where does BCash fit in?

BCash just increased their blocksize from 8MB to 32MB, and is adding new OP_CODES soon to allow “features” like ICOs and BCash Birdies™.

BCash has “room to grow” coming from a completely understressed blockchain, while Ethereum is a completely overstressed blockchain.

https://txhighway.cash

Ethereum is dying and BCash is trying to be exactly like it while ignoring all the warning signs we’ve been trying to bring to everyones attention. They wanted bigger blocks and ICOs, they got it now. Both chains will become the same thing: Centrally controlled blockchains that will slowly die, but given temporary life support via gradual blocksize increases to continue supporting fraudulent utility tokens, until the entire system breaks down when no one can run a node.

My Suggestion: Stop using centralized blockchains.

The only one in that room that runs a fully validating node is the one that’s simultaneously holding up the painting, and the Ethereum network. I’ve managed to make no mention of Vitalik this whole article so I can focus on the technicalities, but if this picture (or the original) doesn’t represent the essence of the Ethereum space then I don’t know what does. I applaud Vitalik for calling out scammers like Fake Satoshi, yet at the same time he equally misrepresents the functionality claims of Ethereum.

Oh, and that golden goose egg you call sharding? It’s hocus pocus. Fairy dust. It’s the same node centralization issue with a veil thrown in front of it. It’s effectively force feeding you the Master & Slave network I just warned you about, under the disguise of “new scaling tech”.

Forgetting Vitalik’s diagram he put out because it’s meaningless, let’s try to simplify Ethereum’s current network first. The diagram below essentially shows all the light-clients in green and the “good” full-nodes in red. Your fast/warp sync node may be red now until it can’t or you give up on upgrading/maintaining and just use the light client feature, then it joins the green group.

As time moves forward, the green nodes increase while the red decrease. This is inevitable because it’s what everyone is already doing. Do you run a full-node or a light client? Do you run anything at all? Switching to using the light client is consistently recommended “if syncing fails”. That’s not a fix.

This chart is really generous. Many full-nodes (red) actually have bad peers/connections, they’re not this well connected:

I’ve updated this diagram in my follow-up article.

Don’t worry though, Vitalik is here to save the day. He’s turning “nodes” into SPV clients that only sync the block headers:

But what does that mean? Well, fortunately I wasted a lot of time drawing this up too so I can explain it visually, but first let’s start with words:

In Bitcoin you either fully validate, or you don’t. You’re either:

A Full-Node and do everything. You fully validate all transactions/blocks.

An SPV Client that does nothing, is just tethered to a full-node, syncs just the block headers, and shares nothing. They are not part of the network. They shouldn’t even be mentioned here but I’m doing it to avoid confusion.

Again, there are 115,000 Bitcoin full-nodes that do everything.

In Ethereum there are:

Full-Nodes that do everything. They fully validate all transactions/blocks.

Nodes that try to do everything but can’t sync up because of peer issues so they skip the line and use warp/fast sync, and then “fully”-validate new transactions/blocks.

Light-“nodes” that are permanently syncing just the block headers, and I guess they are sharing the headers with other similar nodes, so let’s call these “SPV Nodes”. They don’t exist in Bitcoin, again SPV clients in Bitcoin don’t propagate information around, they aren’t nodes.

That Ethereum node count? Guarantee you those are mostly Light-Nodes doing absolutely zero validation work (checking headers isn’t validation). Don’t agree with that? Prove me wrong. Show me data. They are effectively operating a secondary network of just sharing the block headers, but fraudulently being included in the network node count. They don’t benefit the main network at all and just leech.

In New-Ethereum with Sharding, things change a bit. There are:

Full-Nodes that do everything. They fully validate all transactions/blocks.

Shard networks with full-nodes that do everything within that shard, and validate the headers of other shards.

“Nodes” that just sync, validate, and propagate the block headers of the different shards. (Checking headers isn’t validation, this is effectively a secondary network of non-nodes that they are going to pretend are real Ethereum nodes, and they are going to include it in the node count and continue tricking people into thinking this is a functioning decentralized network when it’s not.)

Take a wild guess which kind of node you’ll be running. Go ahead, I’m waiting. Nothing has changed for the fully-validating nodes at all. Nothing.

I went ahead and cheated a bit with the connections using copy/paste, I’m not wasting that much time doing this for the sake of accuracy. The blue networks are the shard networks. The red network is the same old Ethereum network made up of nodes that still need to process everything. The green “nodes” are just syncing the headers and sharing them around:

I’ve completely remodeled this diagram in my follow-up article for further elaboration.

This isn’t scaling. When your node can’t stay in sync it downgrades to a light client. Now with sharding it can downgrade to a “shard node” . None of this matters. You’re still losing a full-node every time one downgrades. What’s even worse is they are calling all the green dots nodes even though they are only syncing the headers and trusting the red nodes to validate.

How would you even know how many fully validating nodes there are in this set up? You can’t even tell now because the only sites tracking it count the light clients in the total. How would you ever know that the full-nodes centralized to let’s say, 10 datacenters? You’ll never know. You. Will. Never. Know.

Correction: I’ve been “corrected” on sharding despite being aware of how it’s proposed to work. I wanted to keep this article simple because it’s not about sharding, and I’ll be writing another article that focuses specifically on its issues, primarily the Collator and Executor nodes, which are just rebranded full nodes you’ll never be able to run. Until then, you can use the following new diagram for a more accurate representation of the Ethereum network with sharding. That article is here, with a section that focuses more on Sharding.

0 Full-Nodes:

On the other hand, Bitcoin is built from the ground up to prevent this:

https://twitter.com/_Kevin_Pham/status/999152930698625024

So what are you going to do?

What should you do?

Are you a developer? Take everything you’ve learned and start developing applications on top of a good blockchain. One that isn’t broken.

Are you a merchant? Start focusing on readying your services to support payment networks. Ones that are built on top of a good blockchain.

Are you an investor? Take everything you’ve invested and start investing in a good blockchain. One that isn’t going to die in the coming years.

Are you a gambler? Buy EOS. It’s newer, just as shitty for all the same reasons I mentioned above, just no one knows it yet.

Are you an idealist? This is definitely not the chain for you. Find one that is.

https://twitter.com/StopAndDecrypt/status/992766974022340608

Part 2

Sharding centralizes Ethereum by selling you Scaling-In disguised as Scaling-Out

The differences between Light-Clients & Fully-Validating Nodes

medium.com

If you’re interested in running a Bitcoin node that will never go out of sync or demand that you update your hardware, check out this tutorial I put together:

A complete beginners guide to installing a Bitcoin Full Node on Linux (2018 Edition)

How to compile a Bitcoin Full Node on a fresh installation of Kubuntu 18.04 without any Linux experience whatsoever.

hackernoon.com

🅂🅃🄾🄿 (@StopAndDecrypt) | Twitter

The latest Tweets from 🅂🅃🄾🄿 (@StopAndDecrypt). Fullstack Social Engineer: 10% FUD, 20% memes, 15% concentrated…

twitter.com

BitcoinEthereumBlockchainScalingDecentralization

Like what you read? Give StopAndDecrypt a round of applause.

From a quick cheer to a standing ovation, clap to show how much you enjoyed this story.

15.8K

69

Follow

Go to the profile of StopAndDecrypt

StopAndDecrypt

Information Aggregator

Follow

Hacker Noon

Hacker Noon

how hackers start their afternoons.

More from Hacker Noon

9 Things You Should Know About TensorFlow

Go to the profile of Cassie Kozyrkov

Cassie Kozyrkov

4.6K

More from Hacker Noon

Beginner’s Guide to Blockchain — Explaining it to a 5 Year Old

Go to the profile of Sidharth Malhotra

Sidharth Malhotra

1.5K

More from Hacker Noon

Python Tricks 101

Go to the profile of Gautham Santhosh

Gautham Santhosh

6.9K

Responses

Conversation between Vitalik Buterin and StopAndDecrypt.

Go to the profile of Vitalik Buterin

Vitalik Buterin

May 24

Because of Ethereum’s exponentially growing blocksize, the bottleneck is not regulated

At some point your node will fall out of sync because of this or a blocksize cap will be put in place.

This is severely uninformed. Ethereum already has a block size limit in the form of its gas limit, and…

Read more…

6.1K

6 responses

Go to the profile of StopAndDecrypt

StopAndDecrypt

Jun 7

I responded to this at the end of this follow-up article:

Sharding centralizes Ethereum by selling you Scaling-In disguised as Scaling-Out

The differences between Light-Clients & Fully-Validating Nodes

medium.com

60

Applause from StopAndDecrypt (author)

Go to the profile of Vinnie Falco

Vinnie Falco

May 24

This just makes me respect the Bitcoin Core devs and contributors even more.

388

Applause from StopAndDecrypt (author)

Go to the profile of tradertimm

tradertimm

May 23

This is hands-down the best damn summary I’ve seen about why parameters matter in scaling. Damn good job — it needs more visibility, and I’ll do my best to provide it.

327

Conversation with StopAndDecrypt.

Go to the profile of Steffen

Steffen

May 23

What is your motivation for writing this article? Do you hold ETH? Do you have an interest in seeing it succeed? Curious

14

1 response

Go to the profile of StopAndDecrypt

StopAndDecrypt

May 23

I want people to:

Understand that blockchains need to be regulated via consensus rules in a way that will keep them decentralized or they’ll centralize and not last.

Know that given the regulations required to maintain those properties, the use cases for a blockchain become severely limited, but L2…

Read more…

585

Conversation with hasufly.

Go to the profile of Daniel

Daniel

May 24

I agree with the majority of points in your article. But I fail to see how this will result in Ethereum’s demise? It will simply result in it become more centralized…

Ethereum will effectively become a better way to manage large cloud computing centers like those that already exist. Think about how much computing already…

Read more…

9

3 responses

Go to the profile of hasufly

hasufly

May 24

AWS is currently 1.000.000 times (yes, really) more efficient than Ethereum. Their only benefit is decentralization. OP demonstrates why this decentralization cannot last, and its demise won’t even make Ethereum more efficient.

108

Conversation with StopAndDecrypt.

Go to the profile of Olajide Jr

Olajide Jr

May 24

Such an uninformed opinion about EOS which arguably has better software than Bitcoin and Ethereum, we’ll have to see how it turns out in the future, I know its more “centralized” but no one cares if it helps increase the market cap faster

5

3 responses

Go to the profile of StopAndDecrypt

StopAndDecrypt

May 24

Fortunately this article wasn't about EOS. I’ll let that grow a bit so I have a target in the future to try and justify my relevance with.

200

2 responses

Conversation with StopAndDecrypt.

Go to the profile of HostFat

HostFat

May 24

Utxo commitments will be probably added this year on Bitcoin Cash, then old blocks will be even be completely lost, and it will not be an issue.

Strange (not) that you didn’t write about this thing anywhere in your article …

25

1 response

Go to the profile of StopAndDecrypt

StopAndDecrypt

May 24

You claim to know I don’t mention this, implying you've gone through my entire article.

It’s strange how after reading my entire article you still think this is about old blocks when I made multiple statements about being okay with pruning, and even being okay skipping the line with fast sync throughout this article.

109

Conversation with Alan Bilsborough.

Go to the profile of Sergii V

Sergii V

May 24

And how bitcoin is better? It doesn’t have these problems just because of its tps being manually capped — no transactions, no problems. Without icreasing tps it is even more useless. Same shit like all blockchain based platforms.

33

1 response

Go to the profile of Alan Bilsborough

Alan Bilsborough

May 24

Slowly but surely people are beginning to realise that Bitcoin for the foreseeable future, and possibly forever, will be regarded as a store of value. Therefore it’s main selling point is solid as a rock security for your funds. There isn’t one other blockchain out there where I’d store more than 10% of the value I have in Bitcoin because they’re…

Read more…

134

1 response

Conversation with StopAndDecrypt.

Go to the profile of Tobia De Angelis

Tobia De Angelis

May 24

Instead of reassessing he doubled down on BCash, meanwhile Yalls.org took his concept and implemented the same exact thing on top of Bitcoin’s Lightning Network.

What will stop Cryptokitties and other games /NFTs move to layer 2 (raiden or whatever)?

1 response

Go to the profile of StopAndDecrypt

StopAndDecrypt

May 24

I’d love to see them on a L2 network. That’s what L2 is designed for. That shitty Pokemon game can be built on Lightning and it won’t have any bottlenecks.

100

Conversation with Richard Buckingham.

Go to the profile of Daniel

Daniel

I agree with the majority of points in your article. Ethereum will effectively become a better way to manage large cloud computing centers like those that already exist. Think about how much…

Go to the profile of Richard Buckingham

Richard Buckingham

May 24

You are sort of missing the point; buying more nodes does not fix the scalability issue here. There will not be a choice of providers with different capacities available as they will all share the same platform and so they all suffer the same scalability issue simultaneously.

7

Conversation with StopAndDecrypt.

Go to the profile of Daniel Goldman

Daniel Goldman

May 24

“In New-Ethereum with Sharding, things change a bit. There are:

Full-Nodes that do everything. They fully validate all transactions/blocks.”

The designs/proposals have been pretty clear that there’ll be no full nodes in ethereum sharding; shard-chains will process a subset of blocks, and the VMC will…

Read more…

65

1 response

Go to the profile of StopAndDecrypt

StopAndDecrypt

May 24

That’s even worse.

I don’t think that eliminates the category though. It just puts the full-node count equal to zero.

Until it’s implemented I’d hold off on saying what it’s going to be. Stripping the individual from the ability to validate everything is the worst thing you can do with a blockchain.

82

1 response

Conversation with StopAndDecrypt.

Go to the profile of Micah Zoltu

Micah Zoltu

May 24

Minor point of contention, though I do think it is important. To trust the network as a whole you don’t need many nodes, you merely need at least one honest/altruistic validating node.

One way to achieve this (Bitcoin’s solution) is to make it easy enough/cheap enough so that anyone can run a node and then the probability…

Read more…

15

1 response

Go to the profile of StopAndDecrypt

StopAndDecrypt

May 24

https://twitter.com/StopAndDecrypt/status/992196408441802753

7

Conversation with StopAndDecrypt.

Go to the profile of Sean Pollock

Sean Pollock

May 24

Centrality is defined in graph theory as the average shortest path distance between two nodes. Centrality counterintuitively equates to decentralized. Simply having more nodes does not make the network more decentralized, rather it is the proportion of edges to nodes that matter. Having more laptop and hobby server nodes that are not very well…

Read more…

1 response

Go to the profile of  5-4B61-B10E-2C2AE6284410.jpeg](

5-4B61-B10E-2C2AE6284410.jpeg]( )

)

StopAndDecrypt

May 26

spare me the mining node nonsense

mining is an external process, blocks are submitted just like transactions are

i’ll elaborate on this in my follow up article

Hi! I am a robot. I just upvoted you! I found similar content that readers might be interested in:

https://hackernoon.com/the-ethereum-blockchain-size-has-exceeded-1tb-and-yes-its-an-issue-2b650b5f4f62

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

Thank you.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

Coins mentioned in post:

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit