We received an alert from our monitoring system that all our nodes went down simultaneously at 0230 UTC early this morning and were down for about 30 minutes before passing off production to a backup and 2 hours in total. We immediately alerted the other BPs in an emergency communication channel and determined that a few more BPs had gone down as well.

Parsing through our logs, we noticed a "bad allocation" error that we ended up tracing back to a specific line in our config named chain-state-db-size-mb. This config controls the maximum size of the state tree storage in RAM and on disk. The default is 1024, when it should have been at 65535. The on-chain storage exceeded this value this morning which caused the nodes to freeze. The problem was identified and updated within 10 minutes (special thanks to Igor from EOSRio). The remainder of the downtime was spent re-syncing the database back to the current state.

About 30 minutes after we went down, we attempted the first active BP deactivation on the mainnet. We unregistered our BP temporarily and allowed eosgenblockbp at the #22 slot to take over production until we came back up. While it is upsetting that our block producer missed blocks during this time, we are proud to set what should become a precedent for future BP downtimes by executing the first successful production handoff to a backup.

Ricardian Contract Compliance

Many producers have suggested that yesterday's downtime constituted a violation of the regproducer Ricardian contract, specifically this portion:

Based on this statement: I, {{producer}}, agree not to set the RAM supply to more RAM than my nodes contain and to resign if I am unable to provide the RAM approved by 2/3+ producers, as shown in the system parameters.

Our response on Telegram is reproduced here:

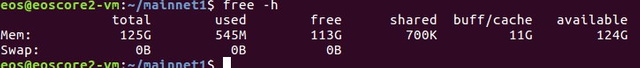

Our take on it: It was a mis-configuration, not a violation. Our nodes are all setup with 128GB of RAM and we have it all available to provide. We missed a config that required us to manually specify any usage over 1GB. I'm surprised the default was set that low in the software, but it is what it is. Now that we've added that configuration, we're providing the full 64GB the chain can support and we have an extra 64GB ready to go as well on each node

as you can see, we support and have free the full RAM expected by the Ricardian contract. that's one of our nodes, but we have 4 more

We did not set the RAM supply to greater than what we have (128 GB) and we are able to comfortably provide the RAM approved by 2/3 of producers (64 GB). Those are the 2 strict requirements, and we violated neither

Improvements to Infrastructure

We performed a couple improvements to our infrastructure in response to the failure:

- A line-by-line audit of our config that should be complete by later today.

UPDATE: The audit is complete and we have a full understanding of the configuration settings from version 1.0.8 (the configs change from version to version so we will have to examine for new configurations every update). We have provided sample configs for API, peer, and producing nodes here.

- A script that performs regular backups of the chain state and block log every 15 min. This should cut down our failover time from 2 hours to 10 minutes if all our nodes go down simultaneously again.

UPDATE: We have tested a full restore from backup and cut down our restart time from 2 hours to 20 min. Next goal is to bring that down to 10 min, which should be doable by later this week.

Technical Notes

Replaying blocks with

--wasm-runtime wavmis 3-4x faster than replaying withbinaryen. You can switch back tobinaryenonce the node is fully synced. (thanks to Mini from EOS Argentina for that tip). It took 30 minutes to replay withwavmversus 2 hours withbinaryen.Catching up from the end of the replay point to the head block took 1 hour despite there only being a 10k block difference. We tried messing with the peer config multiple times with no luck. Blocks were syncing in spurts rather than consistently with an overall rate of about 250 / min. It might have been a network congestion issue since so many peers were trying to resync at the same time.

Excellent! You deserve my vote.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

This is the type of attitude that we need among BPs, keep up the good work!

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

Hello, in the aftermath have you dound if theres any way in the config.ini to help the block catching up to another fullnode on the same LAN?

Also, is it safe to just rsync the blockchain dir?

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

Actually,

There is NO DEFAULT value of this item in eos github repo. what you said "default" value 1024, it is from eos-bios created by eoscanada. when launching the eos mainnet, many bps used this config.

But there are little peoples can explain what it really means. And No One explains this item and value, or suggest the value. Maybe it won't affect this good project and the good community, and the price of EOS.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

I've set up a proxy account to vote for members who don't have the time to properly vett trusted Block Producers. If you want to contribute to the voting process but don't have time to check out who's the best and who's just a whale or exchange, then set your proxy vote to this account: https://eosflare.io/account/eoswatchdogs

To EOS Community Members who want to improve the health and security of the EOS network, please consider voting for 30 Block Producers for a wider distribution of voting, or choose a proxy that you feel resonates with what you would want in a blockchain.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

Crypto Exchange In Australia?

This is a good article on the best crypto exchange Australia!?Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit