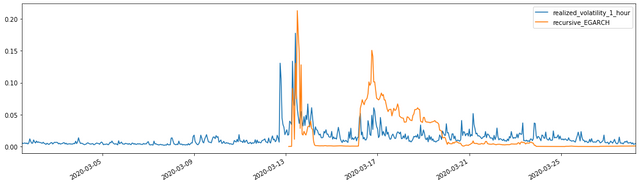

Combined with the matched predicted volatility, the results are compared with the realized volatility of the sample as follows:

In [28]:

def recursive_forecast(pd_dataframe):

window = 280

model = 'EGARCH'

index = kline_test[1:].index

end_loc = np.where(index >= kline_test.index[window])[0].min()

forecasts = {}

for i in range(len(kline_test[1:]) - window + 2):

mod = arch_model(pd_dataframe['log_return'][1:], mean='AR', vol=model,

lags=3, p=2, o=0, q=1, dist='ged')

res = mod.fit(last_obs=i+end_loc, disp='off', options={'ftol': 1e03})

temp = res.forecast().variance

fcast = temp.iloc[i + end_loc - 1]

forecasts[fcast.name] = fcast

forecasts = pd.DataFrame(forecasts).T

pd_dataframe['recursive_{}'.format(model)] = forecasts['h.1']

evaluate(pd_dataframe, 'realized_volatility_1_hour', 'recursive_{}'.format(model))

pd_dataframe['recursive_{}'.format(model)]

recursive_forecast(kline_test)

Out[28]:

Mean Absolute Error (MAE): 0.0201

Mean Absolute Percentage Error (MAPE): 122

Root Mean Square Error (RMSE): 0.0279

It can be seen that EGARCH is more sensitive to volatility and better matchs volatility than ARCH and GARCH.

8. Evaluation of volatility prediction

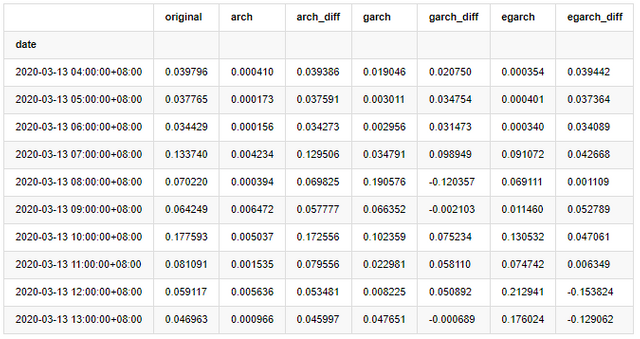

The hourly data is selected based on the sample, and the next step is to predict one hour ahead. We select the predicted volatility of the first 10 hours of the three models, with RV as the benchmark volatility. The comparative error value is as follows:

In [29]:

compare_ARCH_X = pd.DataFrame()

compare_ARCH_X['original']=kline_test['realized_volatility_1_hour']

compare_ARCH_X['arch']=kline_test['recursive_ARCH']

compare_ARCH_X['arch_diff']=compare_ARCH_X['original']-np.abs(compare_ARCH_X['arch'])

compare_ARCH_X['garch']=kline_test['recursive_GARCH']

compare_ARCH_X['garch_diff']=compare_ARCH_X['original']-np.abs(compare_ARCH_X['garch'])

compare_ARCH_X['egarch']=kline_test['recursive_EGARCH']

compare_ARCH_X['egarch_diff']=compare_ARCH_X['original']-np.abs(compare_ARCH_X['egarch'])

compare_ARCH_X = compare_ARCH_X[280:]

compare_ARCH_X.head(10)

Out[29]:

In [30]:

compare_ARCH_X_diff = pd.DataFrame(index=['ARCH','GARCH','EGARCH'], columns=['head 1 step', 'head 10 steps', 'head 100 steps'])

compare_ARCH_X_diff['head 1 step']['ARCH'] = compare_ARCH_X['arch_diff']['2020-03-13 04:00:00+08:00']

compare_ARCH_X_diff['head 10 steps']['ARCH'] = np.mean(compare_ARCH_X['arch_diff'][:10])

compare_ARCH_X_diff['head 100 steps']['ARCH'] = np.mean(compare_ARCH_X['arch_diff'][:100])

compare_ARCH_X_diff['head 1 step']['GARCH'] = compare_ARCH_X['garch_diff']['2020-03-13 04:00:00+08:00']

compare_ARCH_X_diff['head 10 steps']['GARCH'] = np.mean(compare_ARCH_X['garch_diff'][:10])

compare_ARCH_X_diff['head 100 steps']['GARCH'] = np.mean(compare_ARCH_X['garch_diff'][:100])

compare_ARCH_X_diff['head 1 step']['EGARCH'] = compare_ARCH_X['egarch_diff']['2020-03-13 04:00:00+08:00']

compare_ARCH_X_diff['head 10 steps']['EGARCH'] = np.mean(compare_ARCH_X['egarch_diff'][:10])

compare_ARCH_X_diff['head 100 steps']['EGARCH'] = np.abs(np.mean(compare_ARCH_X['egarch_diff'][:100]))

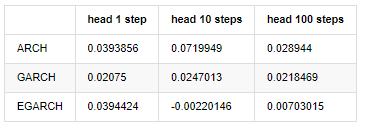

compare_ARCH_X_diff

Out[30]:

Several tests have been conducted, in the prediction results of the first hour, the probability of the smallest error of EGARCH is relatively large, but the overall difference is not particularly obvious; There are some obvious differences in short-term prediction effects; EGARCH has the most outstanding prediction ability in the long-term prediction.

To be continued...