The support of market quotes data is indispensable when researching, designing and backtest trading strategies. It is not realistic to collect all the data from every market, after all, the amount of data is too large. For the digital currency market, FMZ platform supports limited backtest data for exchanges and trading pairs. If you want to backtest some exchanges and trading pairs that temporarily wasn't supported by FMZ platform, you can use a custom data source for backtest, but this premise requires that you have data. Therefore, there is an urgent need for a market quotes collection program, which can be persisted and best obtained in real time.

In this way, we can solve several needs, such as:

- Multiple robots can be provided with data sources, which can ease the frequency of each robot's access to the exchange interface.

- You can get K-line data with a sufficient number of K-line BARs when the robot starts, and you no longer have to worry about the insufficient number of K-line BARs when the robot starts.

- It can collect market data of rare currencies and provide a custom data source for the FMZ platform backtest system.

and many more..

we plan to use python to achieve this, why? Because it's very convenient

Ready

Python's pymongo library

Because you need to use database for persistent storage. The database selection uses MongoDB and the Python language is used to write the collection program, so the driver library of this database is required.

Just installpymongoon Python.Install MongoDB on the hosting device

For example: MacOS installs MongoDB, also same as windows system installs MongoDB. There are many tutorials online. Take the installation of MacOS system as an example:Download

Download link: https://www.mongodb.com/download-center?jmp=nav#communityUnzip

After downloading, unzip to the directory:/usr/localConfigure environment variables

Terminal input:open -e .bash_profile, after opening the file, write:exportPATH=${PATH}:/usr/local/MongoDB/bin

After saving, in the terminal, usessource .bash_profileto make the changes take effect.Manually configure the database file directory and log directory

Create the corresponding folder in the directory/usr/local/data/db.

Create the corresponding folder in the directory/usr/local/data/logs.

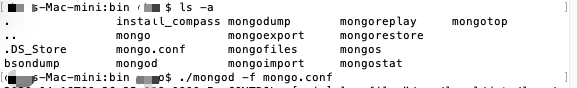

Edit the configuration file mongo.conf:

#bind_ip_all = true # Any computer can connect

bind_ip = 127.0.0.1 # Local computer can access

port = 27017 # The instance runs on port 27017 (default)

dbpath = /usr/local/data/db # data folder storage address (db need to be created in advance)

logpath = /usr/local/data/logs/mongodb.log # log file address

logappend = false # whether to add or rewrite the log file at startup

fork = false # Whether to run in the background

auth = false # Enable user verification

- Run MongoDB service

command:

./mongod -f mongo.conf

- Stop MongoDB service

use admin;

db.shutdownServer();

Implement the collector program

The collector operates as a Python robot strategy on FMZ platform. I just implemented a simple example to show the ideas of this article.

Collector program code:

import pymongo

import json

def main():

Log("Test data collection")

# Connect to the database service

myDBClient = pymongo.MongoClient("mongodb://localhost:27017") # mongodb://127.0.0.1:27017

# Create a database

huobi_DB = myDBClient["huobi"]

# Print the current database table

collist = huobi_DB.list_collection_names()

Log("collist:", collist)

# Check if the table is deleted

arrDropNames = json.loads(dropNames)

if isinstance(arrDropNames, list):

for i in range(len(arrDropNames)):

dropName = arrDropNames[i]

if isinstance(dropName, str):

if not dropName in collist:

continue

tab = huobi_DB[dropName]

Log("dropName:", dropName, "delete:", dropName)

ret = tab.drop()

collist = huobi_DB.list_collection_names()

if dropName in collist:

Log(dropName, "failed to delete")

else :

Log(dropName, "successfully deleted")

# Create the records table

huobi_DB_Records = huobi_DB["records"]

# Request data

preBarTime = 0

index = 1

while True:

r = _C(exchange.GetRecords)

if len(r) < 2:

Sleep(1000)

continue

if preBarTime == 0:

# Write all BAR data for the first time

for i in range(len(r) - 1):

# Write one by one

bar = r[i]

huobi_DB_Records.insert_one({"index": index, "High": bar["High"], "Low": bar["Low"], "Open": bar["Open"], "Close": bar["Close"], "Time": bar["Time"], "Volume": bar["Volume"]})

index += 1

preBarTime = r[-1]["Time"]

elif preBarTime != r[-1]["Time"]:

bar = r[-2]

huobi_DB_Records.insert_one({"index": index, "High": bar["High"], "Low": bar["Low"], "Open": bar["Open"], "Close": bar["Close"], "Time": bar["Time"], "Volume": bar["Volume"]})

index += 1

preBarTime = r[-1]["Time"]

LogStatus(_D(), "preBarTime:", preBarTime, "_D(preBarTime):", _D(preBarTime/1000), "index:", index)

Sleep(10000)

Full strategy address: https://www.fmz.com/strategy/199120

Usage data

Create a strategy robot that uses data.

Note: You need to check the "python PlotLine Template", if you don't have it, you can copy one from the strategy square to your strategy library.

here is the address: https://www.fmz.com/strategy/39066

import pymongo

import json

def main():

Log("Test using database data")

# Connect to the database service

myDBClient = pymongo.MongoClient("mongodb://localhost:27017") # mongodb://127.0.0.1:27017

# Create a database

huobi_DB = myDBClient["huobi"]

# Print the current database table

collist = huobi_DB.list_collection_names()

Log("collist:", collist)

# Query data printing

huobi_DB_Records = huobi_DB["records"]

while True:

arrRecords = []

for x in huobi_DB_Records.find():

bar = {

"High": x["High"],

"Low": x["Low"],

"Close": x["Close"],

"Open": x["Open"],

"Time": x["Time"],

"Volume": x["Volume"]

}

arrRecords.append(bar)

# Use the line drawing library to draw the obtained K-line data

ext.PlotRecords(arrRecords, "K")

LogStatus(_D(), "records length:", len(arrRecords))

Sleep(10000)

It can be seen that the strategy robot code that uses the data does not access any exchange interface. The data is obtained by accessing the database. The market collector program does not record the current BAR data. It collects the K-line BAR in the completed state. If the current BAR real-time data is needed, it can be modified slightly.

The current example code is just for demonstration. When accessing the data records in the table in the database, all are obtained. In this way, as the time for collecting data increases, more and more data is collected. All queries will affect performance to a certain extent, and can be designed. Only data that is newer than the current data is queried and added to the current data.

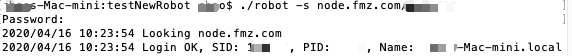

Run

running docker program

On the device where the docker is located, run the MongoDB database service

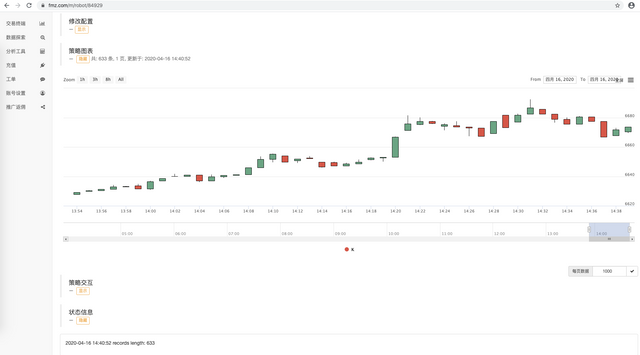

The collector runs to collect the BTC_USDT trading pairs of FMZ Platform WexApp simulation exchange marekt quotes:

WexApp Address: https://wex.app/trade?currency=BTC_USDT

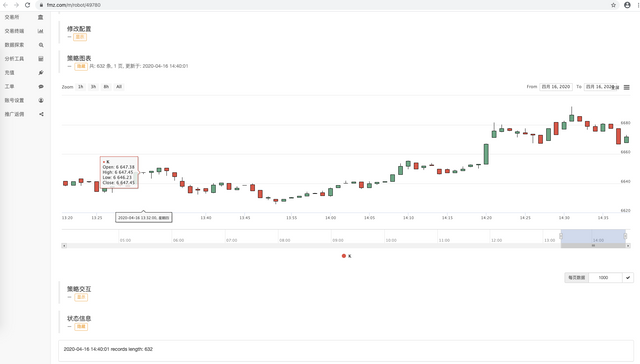

Robot A using database data:

Robot B using database data:

WexApp page:

As you can see in the figure, robots with different IDs share K-line data using one data source.

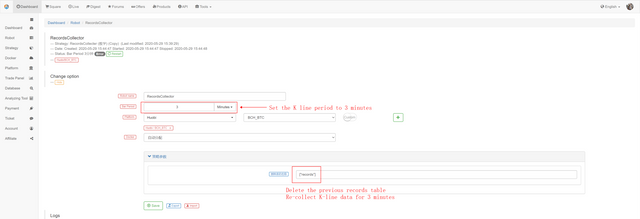

Collect K-line data of any period

Relying on the powerful functions of FMZ platform, we can easily collect K-line data at any cycle.

For example, I want to collect a 3-minute K-line, what if the exchange does not have a 3-minute K-line? It does not matter, it can be easily achieved.

We modify the configuration of the collector robot, the K line period is set to 3 minutes, and FMZ platform will automatically synthesize a 3 minute K line to the collector program.

We use the parameter to delete the name of the table, setting: ["records"] delete the 1 minute K-line data table collected before. Prepare to collect 3-minute K-line data.

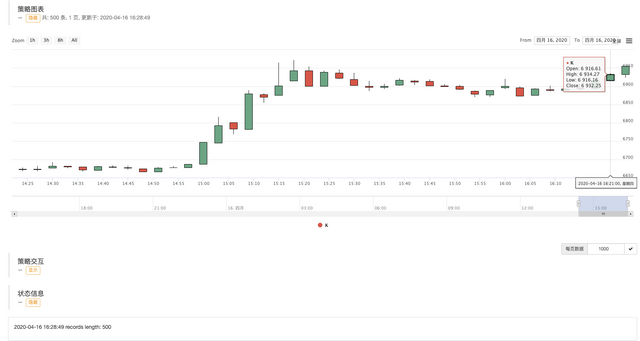

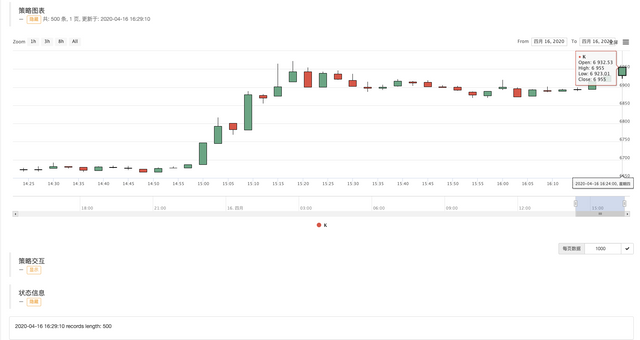

Start the collector program, and then re-start the strategy robot using the data.

You can see the K-line chart drawn, the interval between BARs is 3 minutes, and each BAR is a K-line bar with a 3-minute period.

In the next issue, we will try to implement the requirements of custom data sources.

Thanks for reading!

From: https://blog.mathquant.com/2020/05/30/teach-you-to-implement-a-market-quotes-collector.html