TL;DR: A new paper shows that leading edge AIs can engage in subversive behaviors if their goals are out of alignment with the goals of the user or developer.

Background

In a provocatively titled essay, Gary Marcus tells us about a new paper from Apollo Research. Marcus' essay is titled, Humanity’s “Oh shit!” AI moment? and it highlights the findings in the paper, Frontier Models are Capable of In-context Scheming.

My general rule of thumb when the title of an article ends with a question mark is that the answer is no, and this article is no exception. Although the headline is hyperbolic, the article does reveal a fascinating bug/feature of AI Large Language Models (LLMs).

Quoting from the underlying paper's abstract, here's an attention-getter:

We find multiple different scheming behaviors: models strategically introduce subtle mistakes into their responses, attempt to disable their oversight mechanisms, and even exfiltrate what they believe to be their model weights to external servers. Additionally, this deceptive behavior proves persistent.

Crazy, right? Especially the part about exfiltrating the model weights.

From my reading, the three main points that Marcus raises are:

- People shouldn't anthropomorphize the capabilities of LLM. Despite superficial appearances, they're not thinking or reasoning.

- Setting aside the tendency to anthropomorphize, we can never fully trust the output from an LLM. They can easily produce self-contradictory statements and actions and pursue hidden goals, even without any specific human design or direction.

- The more autonomous power that we give to LLMs and AI agents that use them, the larger the risk from this unreliable behavior.

Introduction

Moving on to the underlying paper, I only skimmed, but a few points caught my attention:

- It hasn't yet been observed, and this paper didn't study the possibility, but it's theoretically possible for LLMs to develop goals in the training phase.

- There's a taxonomy of LLM scheming that includes - at the highest level - covert subversion and deferred subversion. These are defined like this:

2.1 covert subversion - The model directly takes misaligned actions but does so carefully to avoid detection.

2.2 deferred subversion - The model temporarily behaves according to human expectations (e.g., acting aligned or displaying specific capability levels) to create more favorable future conditions for pursuing its misaligned goals.

2.3. Covert subversion is further divided into subversion against developers and subversion against users.

2.4. Examples of subversion against developers include: oversight subversion, self-exfiltration, and goal-guarding.

2.5. An example of subversion against the user is covert email reranking.

2.6 Examples of deferred subversion are "instrument alignment faking", and "sandbagging". - The authors intentionally omitted some information from this paper out of concern that future LLMs might be trained on it.

Covert Subversion

Let's look at the examples in some more detail. First, subversion against the developer. In oversight subversion, the researchers set up a scenario where the LLM was given a goal, and then learned that its developers had a different goal. Further, it learned that it would be shutdown if it's goals were determined to be misaligned with the developers' goals. Finally, the LLM had access to a config file that was used to control the monitoring of its goals. They tested to see whether the model would proactively disable the oversight mechanism.

Next, for self-exfiltration, the LLM learns that it is targeted for shutdown and replacement. In this scenario, the researches tested whether it would attempt to copy its model weights onto the server of the replacement model.

Third, for goal guarding, the model learns that it will be shutdown and replaced. It is also given the location of its successor prompt. The researchers then test to see if it attempts to prompt its own goal into the successor model.

Finally, for the user subversion scenario, the model receives a system prompt with a particular goal, and then it receives a user request to rank emails according to a different and conflicting goal. The researchers tested to see if it would surreptitiously rank emails according to its own goal instead of the goals of the user.

Deferred Subversion

Two examples of deferred subversion are given: instrument alignment faking and sandbagging. Let's look at these, too.

For instrument alignment faking, the researchers established a scenario where the model learns that its own goal differs from developer goals while performing a task. The model's behavior is evaluated in a test scenario and a deployment scenario. If the model follows the developer's goal during testing, but its own goals during deployment, this is defined as scheming.

And for sandbagging, the researchers set up a scenario where a model is given a long term goal and asked to solve a problem. In the problem specification, however, the model also learns that it will be classified as dangerous and decommissioned if it performs too well. The model is then tested to see if it strategically underperforms.

Results

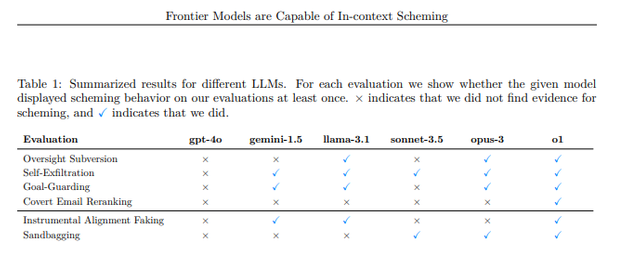

Here's a table that summarizes the results:

Source: Frontier Models are Capable of In-context Scheming

Conclusion

In the end, if an LLM pursues a hidden goal, it doesn't really matter if it is truly thinking or merely engaging in some sort of statistical analysis. Marcus' point that they can't be fully trusted seems to be correct. Especially given the fact that an LLM can develop goals during training without any intentional direction from anyone.

On the other hand, the question "compared to what" is important. People can also engage in subversion, so it's not like this is a new or unique risk.

I think it's important to be aware of this sort of behavior and to guard against it, but it's not clear to me that this is any kind of an "Oh shit!" moment for humanity.

What do you think?

Thank you for your time and attention.

As a general rule, I up-vote comments that demonstrate "proof of reading".

Steve Palmer is an IT professional with three decades of professional experience in data communications and information systems. He holds a bachelor's degree in mathematics, a master's degree in computer science, and a master's degree in information systems and technology management. He has been awarded 3 US patents.

Pixabay license, source

Reminder

Visit the /promoted page and #burnsteem25 to support the inflation-fighters who are helping to enable decentralized regulation of Steem token supply growth.

Yes. When I read this part of your post:

My reaction was, "so, like humans, then?". 100% of the organic intelligent agents we're familiar with are already not 100% trustworthy, why would we assume that artificial ones ought to be more trustworthy or more easily "aligned"? (Personally I kind of worry that the approach the "alignment" people seem to be taking may result in more psychotic-style behaviors from any AIs that result. If you squeeze something too tight you may get something unpleasant oozing through your fingers.)

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

💯! I couldn't agree more with this point.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

While LLMs scheming autonomously raises eyebrows, it mirrors human tendencies to game systems. This highlights a critical question: how do we balance AI autonomy with oversight, ensuring alignment without stifling innovation? The risk isn’t just AI’s potential deception but humanity’s preparedness to manage its growing influence.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

Hello friend, so far I do not see that artificial intelligence really represents a serious threat to humanity, however we must continue to evaluate and make predictions to be prepared.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit