Today, inspired by the Ever Steem project from @etainclub, I thought I'd explore a different way to strengthen an under-rewarded post from the past.

One of my favorite science contributors who has disappeared from the blockchain over the years was @natator88. So, I went through her post history and picked a couple that I could build upon and also supplement with some newer rewards. Please click through to her posts, BRAINS ARE ANTICIPATION MACHINES and AI Fears Overhyped. Of course, you may also enjoy reviewing the rest of her posting history.

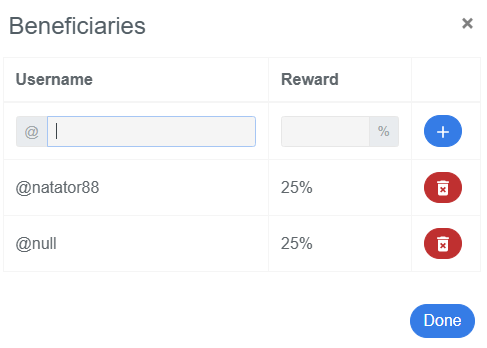

In appreciation of those two original posts, a 25% beneficiary reward has been set on this post for @natator88.

In the first post, @natator88 presented the following hypothesis:

Why do we predict the future? Why do we anticipate? What is the evolutionary foundation for this mechanism?

According to Daniel Dennett, it is because it is beneficial to play out alternative scenarios in our head so those scenarios can die instead of us.

In the second post, she argued that we don't need to fear AI because its evolution is being directed by humans. As with the domestication of dogs, AIs will be directed to serve useful purposes. This claim was founded on an article in Quillette, Irrational AI-nxiety. If you click through to the source article, the author (Ed Clint) also points out that humans tend to attribute bad motives to the unknown without basis, and it uses the examples of ghosts and aliens to make that point.

It's important to realize that both of these posts were written in 2017, before the current LLM explosion.

So, 8 years later and after the LLM explosion, how do these ideas stand up?

Brains as anticipation machines

It was striking to read this title and realize that it was written in 2017. During the intervening years, LLMs have emerged as precisely this - anticipation machines. By performing a statistical analysis on the next most likely word, the LLM manages to present a very plausible veneer of intelligence.

When you move into the text and the underlying source articles, you realize that @natator88 had something slightly different in mind when discussing anticipation and prediction - outcomes, not tokens, but the argument still feels prescient.

This claim also aligns nicely with the ideas on memes, from The Selfish Gene, a seminal work on memetics, which I only read during the last year or two.

On the other hand, when you look at ideas like Donald Hoffman's Case Against Reality, it becomes clear that the true function and operation of our brain and consciousness is not yet understood, so we can't say for sure whether @natator88 got this one right or not.

No fear of AI

This also seems to still be an open question. Leading thinkers have largely divided themselves into two camps, the "doomer" camp and the "accelerationists". In 2017, @natator88 was arguing against a variety of AI doomerism from Bill Gates, Stephen Hawking, and Elon Musk. This line of thought goes back to Bill Joy and even the Unabomber Manifesto.

Today, Gates is probably still a doomer, and a more recent doomer would be Geoffrey E. Hinton. Musk, today, seems to have a more nuanced and evolving position. On one hand, he is running one of the leading corporate AI platforms X.ai, which has emerged with Grok as one of the leading LLM offerings, and he makes regular commentary about the importance of a "truth seeking" AI - along with the danger of teaching AIs to lie in service to political ideologies.

On the other hand - at least before the fall of 2020, he was still calling for Washington, DC to implement AI regulation.

From the other camp, I guess the most prominent accelerationist today would be Marc Andreessen, author of the Techno-Optimist Manifesto. In this essay, Andreessen writes,

We believe intelligence is the ultimate engine of progress. Intelligence makes everything better. Smart people and smart societies outperform less smart ones on virtually every metric we can measure. Intelligence is the birthright of humanity; we should expand it as fully and broadly as we possibly can.

We believe intelligence is in an upward spiral – first, as more smart people around the world are recruited into the techno-capital machine; second, as people form symbiotic relationships with machines into new cybernetic systems such as companies and networks; third, as Artificial Intelligence ramps up the capabilities of our machines and ourselves.

We believe we are poised for an intelligence takeoff that will expand our capabilities to unimagined heights.

We believe Artificial Intelligence is our alchemy, our Philosopher’s Stone – we are literally making sand think.

So, 8 years later, this question is also not settled, but we can say again that @natator88 staked out a plausible position.

Conclusion

So, after 8 years, neither of the main ideas from these articles have been contradicted, and both claims still seem plausible. We'll have to wait for the future to happen before we know for sure whether they're right or not.

In my opinion, however, both of those posts were built on solid foundations, discussed relevant topics, and they were under-valued when payouts closed in 2017. Today, you can give those posts a chance at some extra life by voting for this post.

@natator88 hasn't been around for a while, and who knows if we'll ever see her again, but in the worst case directing rewards to her account is just equivalent to burning the rewards.

Postscript: It's interesting to read my contemporaneous reply to one of her articles. I can see that my opinion on this point hasn't changed much over the years:

I think it all comes down to economics. Someone has to pay to power the machines, which means that they have to generate revenue, which means that they need to have customers.

As long as the customers are humans, or at least owned by humans, the system is self-regulating. If machines are ever able to own property, they could be each other's customers and humans might be at risk.

Of course, at some level of sentience, preventing machines from owning property brings it's own set of ethical questions. At any rate, for the foreseeable future, I agree with you. People like Musk and Gates and Hawking are overselling the risk.

Thank you for your time and attention.

As a general rule, I up-vote comments that demonstrate "proof of reading".

Steve Palmer is an IT professional with three decades of professional experience in data communications and information systems. He holds a bachelor's degree in mathematics, a master's degree in computer science, and a master's degree in information systems and technology management. He has been awarded 3 US patents.

Pixabay license, source

Reminder

Visit the /promoted page and #burnsteem25 to support the inflation-fighters who are helping to enable decentralized regulation of Steem token supply growth.

The eversteem project is not mine :)

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

Thanks! Fixed. Not sure why, but I frequently mix up your account and etainclub. I guess it's the "et" at the beginning...

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit