This year, Google I/O held online presentations on the 18th and 19th, and I took the time to watch three videos, so today I am ready to share with you some new technologies that I think are more interesting.

This issue will mainly focus on the latest developments in AI and Android.

AI progress

Pictures

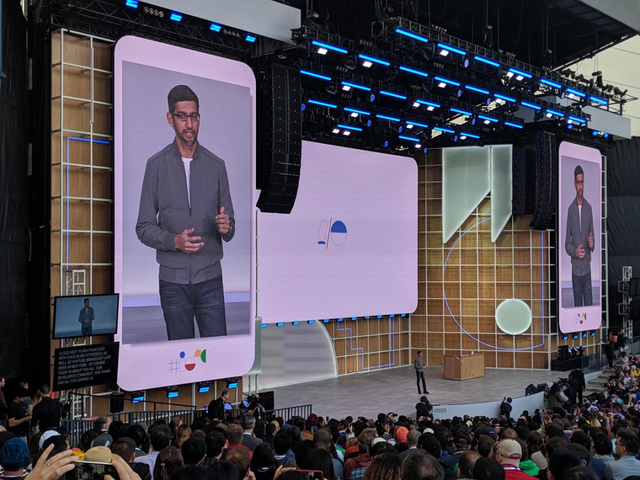

In the keynote speech of Google I/O 17, Sundar Pichai, CEO of Google, announced that Google entered the era of AI first, and this year, AI has already played a very big impact in Google's various platforms and services.

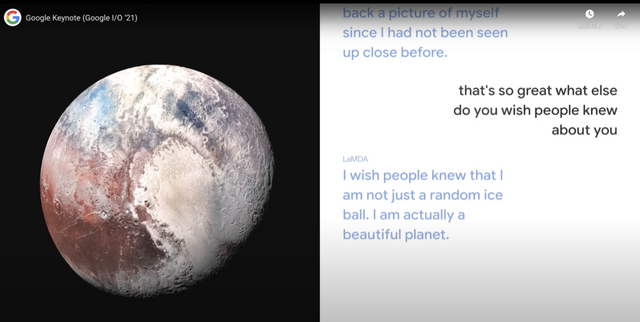

At this year's Google I/O, two new natural language models were introduced to everyone: LaMDA and MUM.

LaMDA

LaMDA is a new model for processing linguistic conversations. In real life, human conversation scenarios, context switching is very frequent, for example, your family members are telling you to eat on time one second, and asking you if you found someone the next.

But in AI, this seems to be a very difficult problem to deal with. At this stage, most of AI's conversations are only for narrowly defined scenarios to make answers, and when you randomly switch to a new topic, AI may say, "Sorry, I don't know how to reply to you."

The new LaMDA model unlocks a more natural way of chatting, like talking with a friend who has a very rich knowledge base and always gives you the most appropriate answer, even though the conversation goes off topic.

Image

MUM

MUM is a multitasking unified processing model for search scenarios. Although it is a natural language model in the same Transformer architecture as LaMDA, MUM can distill multiple key messages in context for a question and give you the right feedback.

Just as if you were asking an experienced friend, MUM improves understanding of human problems and improves search, moving from a keyword-centric search process to an intelligent search with context and context.

More powerful than LaMDA, MUM can understand multiple forms of information such as text, video, images, and audio, analyze the content information and understand the intent behind it, and then give you the most relevant search results.

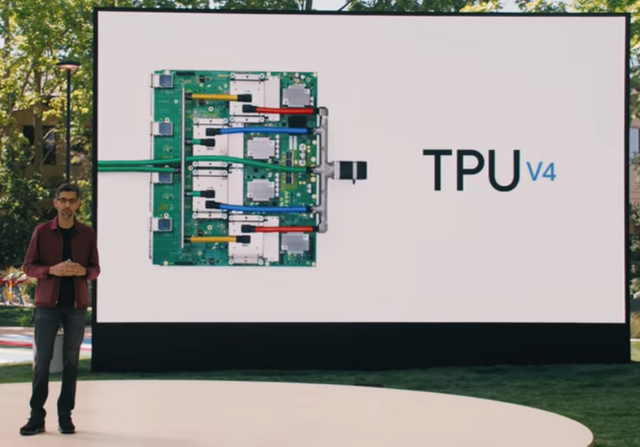

TPU v4 chip with quantum computer

TPU is Google's chip for machine learning, fully integrated with Google Cloud, the new generation v4 chip is twice as fast as its predecessor, multiple TPUs can be connected together to form a supercomputer, Goolge calls it a Pod, a single Pod consists of 4096 v4 chips, a single Pod can provide more than 1 exaflop of computing power.

After announcing the new TPU chips, Pichai said that there are many problems with classical computing that cannot be solved in a short period of time, and that quantum computing is the future of computing.

Although still in the early stages, Google plans to deliver a commercially available quantum computer by 2029.

When I saw this, as an Android programmer, I suddenly had a frustrated mood 😂, often and others that we are practitioners of technology Internet, in fact, we are a hundred thousand miles away from the real technology, we are just a code mover ...

Let's go back to our area of interest and see what the latest developments in Android are.

Android progress

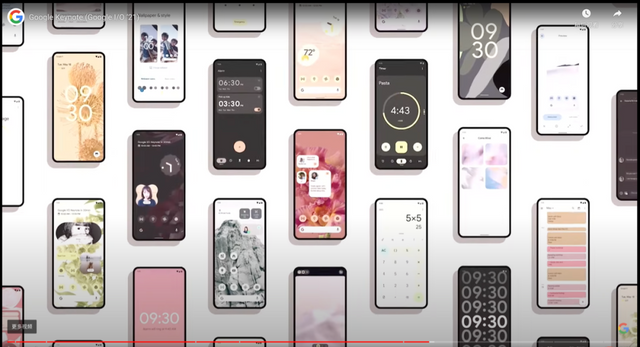

This year's Android 12 update focuses on design and interaction, which can be said to be more like iOS, which I think is not a bad thing. (I am talking about you 👉 Material Design)

- new design and interaction

Material You

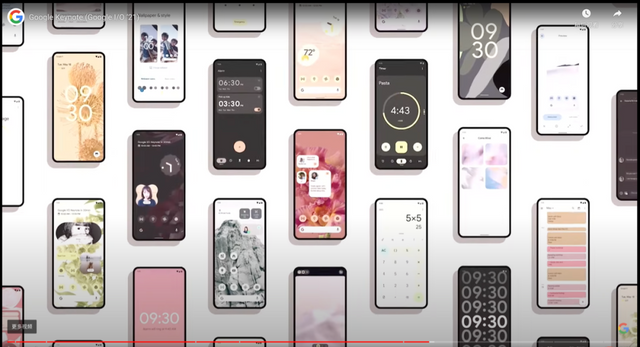

The new Android 12 launched Material You, a new design style, I was most impressed by a sentence, is when the speaker introduced their new UI specifications in the design, asked themselves a question: "If the design is not to follow the rules and functions, but to follow the feeling, what will it be like? "

That's a lot of change, too.

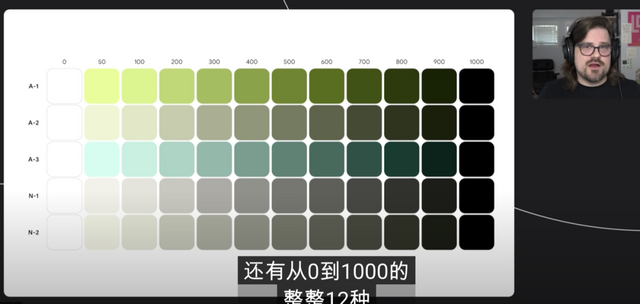

The exact design specs won't be released until the fall, and in the past Android actually had a built-in theme accent color, for example, it was cyan in the AOSP code and blue on the Pixel. Android 12 expands the system with a rich palette of colors, using its own palette to generate preset styles that the system components will automatically coordinate to fit.

In addition to preset styles, Android 12 also provides developers with the ability to call and combine colors through preset APIs, freely assigning background colors, accent colors, foreground colors, etc. by sorting them from 0-1000 brightness.

If you know the API of Material Design, the Material Design team established the standard names of different scene colors in the app in the early days, such as BackgroundColor for the background color, SurfaceColor for the color of the container on top of Background, and primaryColor for the theme color.

So Android 12 will most likely follow this specification to use the built-in color palette to make the combination of styles. However, it is not known whether this feature will be retained in the domestic environment.

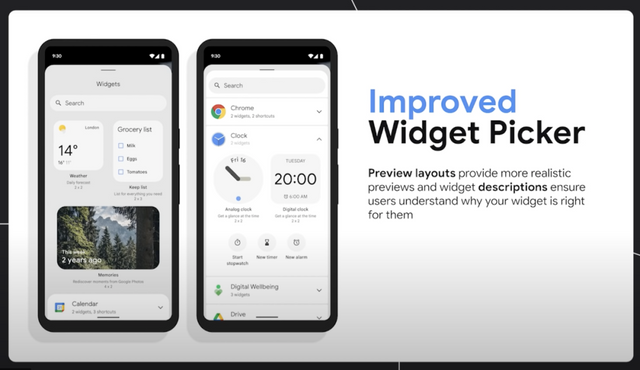

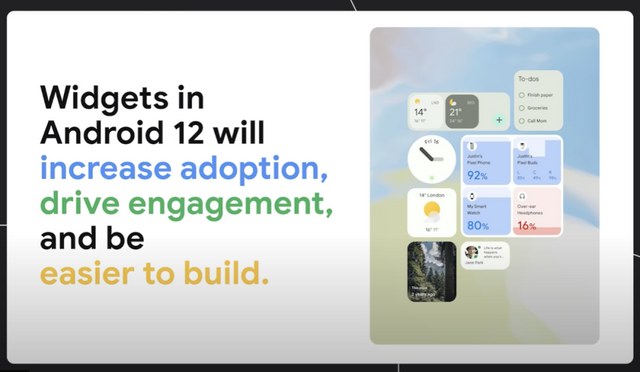

The new Widget

The widgets in Android 12 have been completely redesigned, both in terms of UI and operability. Apple supported the screen widget function for the first time in iOS 14, but the iOS widget only provides a display role, and any operation needs to jump to the App.

Another difference from iOS is that iOS widget sizes are rigid, supporting only small, medium, and large, while Android 12 continues the previous widget development model, where widgets can be customized to realistic sizes under specifications like NxN.

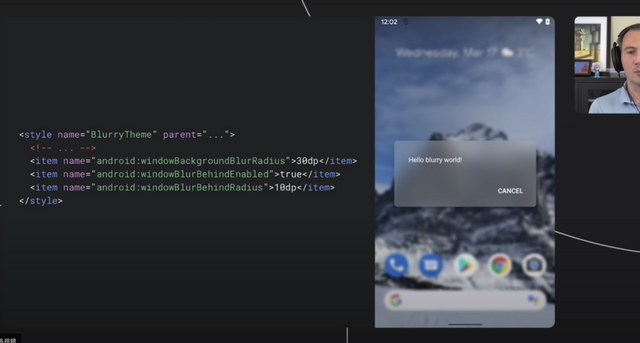

Hairy glass, rounded corners, and list damping effects

I was curious before why Android didn't support Blur effect for so many years. Some people might say Gaussian blur, but in my opinion Gaussian blur and Blur are not quite the same. Gaussian blur achieves the blur effect by manipulating the pixel matrix of the Bitmap, while Blur looks more like a layer on top of the original View.

Finally, at Google I/O this year, I got an answer: in fact, the T-Mobile G1 supported the Blur effect on the first Android-powered phone, but Android removed it afterwards due to performance and design style considerations. It was not until this year's Android 12 release that the effect was reintroduced.

Also updated are the custom API for View rounded corners and the list sliding damping effect, I want all of them for iOS. 😂 Of course, it also has its own unique feature, a touch animation with a particle ripple effect.

Android 12 also provides customization of the App Launch animation, which developers can customize through the Animated Vector Drawable. But I don't think many apps will support it in the domestic environment of opening screen ads.

In addition to these Toast has also been updated, Toast will now add app icons to display. Notifications have been changed again and again. I really don't know why Google keeps changing the notifications every year.

Anyway, this year's Android 12 definitely focuses on design and interaction updates, which makes me feel that Android is really different. 2.

- privacy updates

Microphone, camera call tips

Privacy dashboard

This seems to be supported by many domestic phones, isn't it?

Clipboard read notification

Geolocation permission allows to choose exact or approximate range

- Image system update

In terms of image system, Android 12 adds support for AVIF image format, which can be smaller than JPG size while retaining more details of the image.

When the user chooses a format that the app does not support, such as HEVC (H.265), HDR, HDR+, the system will automatically transcode it to AVC (H.264).

That's all on the Android side. Finally, I'd like to share one of the most amazing products from Google I/O.

Project Starline

By sampling people with ultra-high resolution cameras and depth sensors, users can experience a life-size 3D image in front of a special screen, as if they were face-to-face, so that they can communicate with each other. This product is really awesome.

I haven't watched all the videos of the Google I/O presentations yet. If there are other interesting contents in the subsequent videos that are worth learning, I will be the first to share them with you!