Introduction

Ethereum has revolutionized the digital world with its blockchain technology and smart contracts, enabling the creation of all kinds of decentralized applications (dApps). However, with the rise of its popularity, scalability issues such as network congestion and increased transaction costs arose. To solve these challenges, different paradigms called scalability solutions or Layer 2 (L2) solutions have been proposed. These promise faster and cheaper transactions without compromising security or decentralization, but let's not get ahead of ourselves just yet.

Origin and Popularity of Ethereum

Ethereum's story began with the rise of Bitcoin in 2017. As people became more familiar with cryptocurrencies, Ethereum stood out as a more accessible option with fast and inexpensive transactions. In addition, its technology enabled the use of smart contracts, an aspect that differentiated it from Bitcoin and allowed the creation of decentralized applications.

However, Ethereum's consensus method required a large amount of energy and computational effort, which increased power consumption and established a limited number of transactions per second, even though the process could be accelerated by paying a higher fee.

The ERC-20 and ERC-721 Standards.

In 2015, Ethereum introduced the ERC-20 standard, which allowed users to create their own tokens on the Ethereum blockchain, without the need to develop a blockchain from scratch. This standard was the basis for the popularity of ICOs (Initial Coin Offerings), a funding method for cryptocurrency startups. However, this also became a risk, as many scams emerged using the ERC-20 standard.

The ERC-721 standard, introduced in 2018, allowed the creation of non-fungible tokens (NFTs), unique and non-exchangeable. These NFTs went viral, being digital collectibles, such as the famous CryptoKitties and the Bored Ape Yacht Club (BAYC). The interest in NFTs attracted artists, celebrities, and collectors, cementing Ethereum technology as a new global disruptive phenomenon.

Scalability Issues.

Despite its innovations, Ethereum began to face scalability issues due to increased demand on its network, especially with the rise of NFTs and dApps. Transactions became slow and expensive, which paved the way for other blockchains such as TRON, which offered a different (more eco-friendly and much, much faster) consensus method, called proof of Stake (PoS), to become more attractive.

To solve these problems, Vitalik Buterin, the creator of Ethereum, proposed a new version called Ethereum 2.0, which would use PoS, thus being more efficient and environmentally friendly. A key event for this transition would be called the “Merge”, an update that would make Ethereum faster and cheaper, better adapting to market demands.

But the wait for the new version was getting long.... Very long...

And the memes began

Converting a blockchain that operates under proof-of-work consensus to a new, more eco-friendly model was not simply a matter of waving a magic wand and making “hocus pocus” and voilà!

It was necessary to come up with a solution that ensured the scalability of the Ethereum blockchain.

Blockchain Trilemma

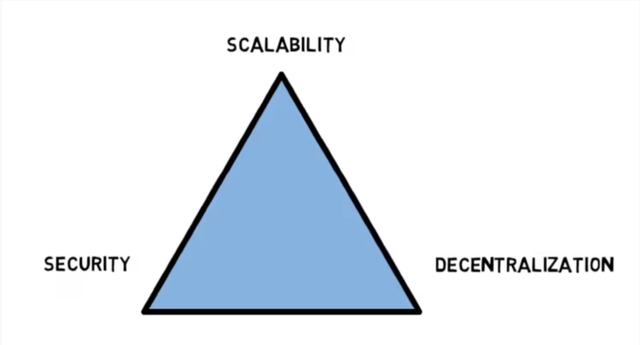

Solving the scalability problem within a decentralized system is quite complicated. This is where the famous Blockchain Trilemma, also known as the Scalability Trilemma, comes into play. This is a very popular concept in the world of cryptocurrencies and blockchain technology. It highlights the difficulty of achieving a balance between the three fundamental properties of a decentralized system or blockchain: scalability, security, and decentralization.

The biggest problem is that improving one of the three often compromises one or both of the others. Let’s take a closer look at this.

When we talk about scalability, we refer to the ability to handle more transactions per second (remember TPS?) as the number of users in the system increases. A system is said to be scalable when it can accommodate a higher volume of transactions without affecting its response time or slowing down.

Security involves protecting the system against attacks and fraud. In the blockchain world, these must be resistant to malicious attacks, such as the infamous 51% attack; where the attackers manage to control a group of nodes that represent more than 50% of the network, thus having the power to control the entire system in general and manipulate transactions.

The last aspect is the most important: decentralization. Allowing multiple participants to regulate control and decision-making makes the system resilient to failures and censorship, promoting greater transparency and autonomy, and increasing security by making it resistant to outages. Remember that with distributed control, if one part fails, the overall system will continue to function. This is the magic of blockchain.

Now let’s examine the challenge of the Trilemma:

Improving scalability always compromises security and decentralization. For example, increasing the block size means you can store more transactions per block, thus serving more transactions per second. However, this reduces decentralization, as it requires more specialized nodes, with more computational processing to ensure the validity of transactions (security). So becoming a node will require more resources, therefore, any computer will not be able to be a node in the network. Then the sense of decentralization is lost, since only “special” nodes will be able to process transactions faster. An attack on these nodes would compromise the entire network... Are you beginning to understand how complicated this is?

On the other hand, increasing security will also require more resources, which may limit not only decentralization but also scalability (speed).

Encouraging decentralization can lead to a more secure network, in the aspect of being less vulnerable to attacks or network crashes, but it can also jeopardize scalability, as it will require many nodes involved in the validation process.

Do you see the problem faced by Olympus Gods?

Still, they managed to find several ways to solve this trilemma, through totally different approaches. This is the beauty of it all and what Vitalik Buterin was referring to with the concept of pluralism in his article Layer 2s as cultural extensions of Ethereum.

There is not really a single solution, but a set of them oriented towards the applications that require them. But to understand this, let's first get to know the scalability solutions that came to light.

Scaling the Ethereum network

According to Buterin, there are two ways to scale a blockchain: The first, by modifying the main blockchain, increasing its capacity to handle more transactions. Which is achieved with “bigger blocks”. But we already saw what happens when you increase the block size. However, Buterin proposes a more sustainable way by making use of a method called sharding (fragmentation) and it is the one that the new version of Ethereum, Ethereum 2.0, will use (which we will explain later).

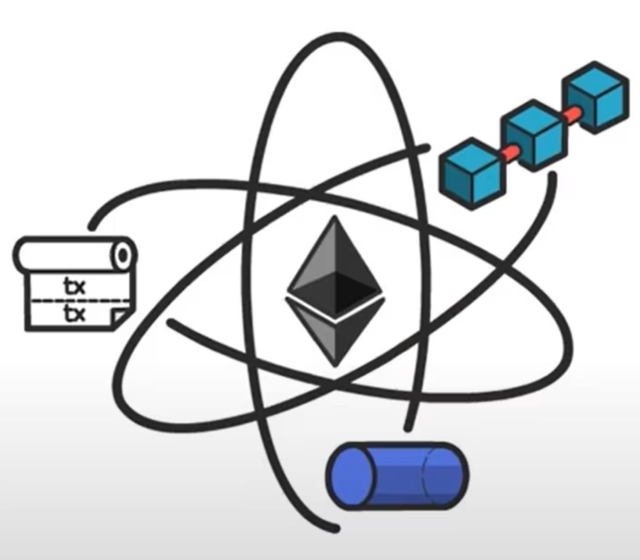

The second way is to think outside the box. Instead of having all the work done on the original blockchain, the mainnet (layer 1), the system will process transactions "off-chain" by making use of a layer 2 protocol or solution.

Yes. This is where L2s, or Layer 2 solutions, come into the picture (Finally!).

Buterin explains that there will be a smart contract on the main chain (mainnet), in charge of doing two jobs: the first, processing deposit and withdrawal transactions, and the second, doing verification testing so that everything that happens off-chain is secure.

Buterin, from his point of view, also argues that there are many ways to perform verification testing; however, most solutions agree that performing such testing at layer 1 (mainnet) is much cheaper than doing it off-chain.

Layer 2 solutions

Layer 2 solutions, as we have already seen, emerged with the goal of improving scalability, which translates into lower transaction fees and undoubtedly faster response times. Layer 2 (L2) is a collective term that refers to solutions designed outside of Ethereum's core network (Layer 1). Some claim that some of these solutions were inspired by Bitcoin's Lightning Network solution (probably).

There are four main types of Layer 2 scaling: state channels, plasma, sidechains, and the famous rollups. All of them with their advantages and disadvantages. However, most were designed specifically to solve application-specific focused problems, i.e., solutions to make certain applications run more efficiently.

Channels

Channels are one of the most widely used Layer 2 solutions; they allow, as the name suggests, to create a channel through which participants (or channel creators) can perform all their transactions (only with each other) “off-chain” without the need to be constantly storing them in the Ethereum blockchain (Layer 1). When the channel is closed, the final state of the transactions is recorded in the Ethereum blockchain (Layer 1), ensuring that the final balances are valid and reflected in the main chain. As you can see in the following image.

The most popular types of channels are state channels and their subtype called payment channels. Channels improve the performance of the network by performing thousands of transactions per second, which significantly lowers the price of their commissions. However, in order to use them, both users must be registered and have previously frozen their funds in a multi-signature contract, in order to be able to carry out their transactions.

Another disadvantage of this type of solution (although it was not designed for that purpose) is that it does not support the scalability of complex smart contracts, which may require more resources and processing. This solution was originally proposed by Bitcoin's Lightning Network protocol.

Plasma

This solution is a bit confusing because of the way it works, however, I will do my best to explain what I understood. Plasma was created by Vitalik Buterin and Joseph Poon as a framework for creating scalable applications on Ethereum. Plasma makes use of a data structure called Merkle tree (more on what this is used for later) and leverages Ethereum smart contracts to create multiple “child” chains or secondary chains; which are copies of the parent Ethereum chain, to process large volumes of “off-chain” transactions in a faster and cheaper way, relieving the burden on the parent chain. Eventually, the final state of transactions is recorded on the parent chain.

Plasma uses a tree structure that allows child chains to create their own “child” chains. This is where the Merkle tree stands out for its functionality. This tree structure is essential in scaling, as it ensures integrity and efficiency in data verification between the child chains, the sub-chains, and the parent chain. Each leaf of the tree represents a block of data, and the Merkle root summarizes all the information.

The problem with this solution is that users must wait perhaps up to a week to withdraw their funds. This is because Plasma uses a fraud-proofing mechanism to ensure that the transactions are valid. So you have to wait a period of time for the nodes (validators) to provide proof that the transaction is valid or invalid.

Like channels, this solution cannot scale general-purpose smart contracts.

The OMG network implements this framework to execute its transactions.

Sidechains

Sidechains are blockchains that run independently of the Ethereum blockchain. They have their own consensus method and their own block parameters, designed to improve efficient transaction handling. They use their own VM (Virtual Machine) compatible with the Ethereum Virtual Machine (EVM), which allows any smart contract, running on Ethereum, to also work on sidechains. This makes it possible for decentralized applications (dApps) to run on these chains without significant changes to their programming. Unlike the other solutions, sidechains do not store state changes or transaction data on the Ethereum mainnet. Instead, they communicate with the Ethereum network through “bridges”.

Bridges make use of smart contracts to achieve fund interoperability, i.e., they allow moving funds between the Ethereum blockchain and the sidechain. In reality, funds are not moved back and forth, the smart contracts in the bridge perform a burn (destruction) of assets on one chain and mining (creation) of their equivalent on the other chain and vice versa.

Polygon's network uses this scalability solution and is one of the most popular currently.

Rollups

Rollups use sidechains; however, they allow scaling by grouping or “rolling up” transactions into a single transaction. To the latter, they generate a cryptographic proof, known as SNARK (Succinct Non-interactive Argument of Knowledge), which is stored on the Ethereum main chain.

The rollups take advantage of Ethereum's security and its consensus mechanism. However, all transaction state and execution is done “off-chain”, and only transaction data is stored on the main chain.

An advantage of rollups is that they are general-purpose, meaning that they can execute smart contracts, which facilitates the migration of decentralized applications almost without the need to change their code.

In addition, they use compression techniques to reduce the amount of data published on the mainnet, which significantly lowers transaction fees. In fact, Buterin mentions that transferring ERC-20 tokens on the Ethereum mainnet costs 45000 in gas (gas is the unit of transaction fee measurement in Ethereum, it is also known as the fuel to move transactions). However, transferring the same ERC-20 token, using rollups, would cost under 300 in gas.

Rollups are considered the most secure solutions for scaling Ethereum's throughput to 4800 TPS or 85 times more productive.

There are two types of rollups: ZK rollups (Zero Knowledge) and optimistic rollups.

Optimistic Rollups

Optimistic rollups have their own EVM called OVM (Optimistic Virtual Machine), which allows you to run any smart contract running on the Ethereum mainnet. This is an advantage, as it allows the migration of DeFi (decentralized finance applications), thus, you can run dApps on these scale-out solutions much faster and without the need to reprogram them again. This is important since DeFi's contain smart contracts that have been tested in real life against attacks.

They are called optimistic because they assume that off-chain transactions are valid and do not publish proofs of validity for batches of transactions that are stored on the mainnet. However, once sending the batch to Ethereum, there is a period of time called the challenge period; during which a sequencer node (the ones in charge of performing this whole process) can challenge the results of a rollup transaction, calculating a proof of fraud, penalizing the responsible sequencer in case of a positive result.

Optimism is a project that uses this form of scaling.

If you want to learn more about this paradigm, you can do so by visiting the following link.

ZK Rollups

ZK rollups or Zero-knowledge rollups, like optimism rollups, execute transactions off-chain reducing the load on the main chain, similarly, they group transactions in batches and send them to the mainnet. Unlike optimistic rollups, zk-rollups validate batches by performing a cryptographic proof called validity proof. This is where SNARKs appear, which are complex mathematical and cryptographic proofs to validate the information to be stored on the mainnet. The advantage of this method is that validity proofs can be verified quickly by the mainnet, allowing withdrawals to be processed almost immediately.

In contrast, for optimistic rollups, withdrawals can take one to two weeks, as the sequencers must be given time to validate batches of optimistic transactions.

However, due to the complexity of validity proof, zk-rollups are not easy to implement, so developing decentralized applications in this paradigm is complicated and requires more resources, unlike optimistic rollups.

Due to their speed and validation process, zk-rollups are perfect for payment applications or exchanges.

Despite their complexity, in the long run, zk-rollups will be the preferred scaling solution (or so the rumor goes).

If you want to learn more about zk-rollups, you can do so by visiting the following link.

zkSync and Polygon have implemented in their platform this paradigm to improve Ethereum's scalability.

The Merge to the rescue

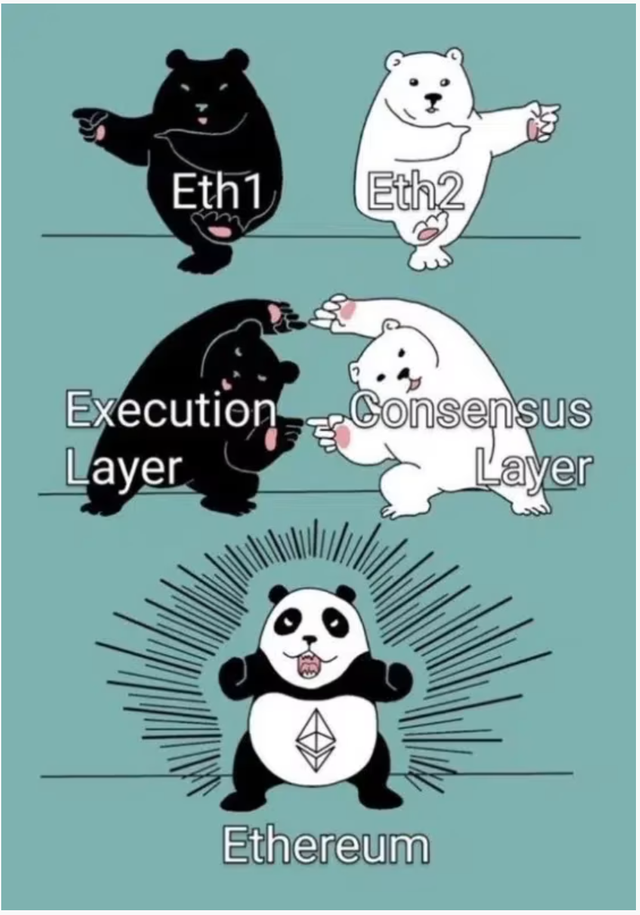

The Merge refers to a pivotal event in Ethereum's history that occurred in September 2022, where the Ethereum blockchain changed its consensus mechanism from proof-of-work (PoW) to proof-of-stake (PoS). Thus creating Ethereum 2.0.

Undoubtedly, it was necessary to change the old consensus method to make Ethereum more flexible, faster, and greener. You may wonder why the heck is it called Merge (I wondered that too) It is called merge, as it is merging/adding the old Ethereum blockchain, the chain that was originally mined with the proof-of-work (PoW) consensus, with the new Ethereum chain that implements the consensus method of participation (PoS), called Beacon Chain.

Without a doubt, those who suffered from this change were the old miners who invested in PoW mining equipment, it is usually quite expensive. But they were warned a long time ago, and they could change their platforms, eventually, reselling their equipment to mine another type of cryptocurrency ...

But it doesn't hurt to know a little more about this proof-of-stake or PoS consensus. Let's see then:

There are no miners anymore, they are now called validators and they are in charge, as their word indicates, of validating the new blocks that join the blockchain. However, to become a validator, you must invest or staking with an amount of 32 ETH (something like $60mil, maybe a bit more).

Validators are randomly selected to perform the task of validating transactions and new blocks, if they do it correctly, they are rewarded with ETH. If for some reason, a validator tries to enter fake transactions, they are penalized by subtracting the ETH they have invested (32 ETH).

As we have already seen, this consensus is much, much faster than the previous consensus and does not require as much energy consumption. So this brings a fundamental change to Ethereum, making it a more accessible, scalable, and sustainable blockchain.

But then, will L2s really be necessary?

You may be wondering, now that Ethereum has migrated to the new consensus method, becoming Ethereum 2.0, will Layer 2 scaling solutions be necessary?

Of course, they will...

Despite using the PoS consensus method, Ethereum 2.0 implements a method called sharding. A scalability paradigm that divides the network into multiple independent shards, each capable of processing its own transactions and data. This significantly increases the throughput of network transactions by distributing the load, thus improving transaction speed and efficiency.

Execution sharding is a powerful solution, but it requires significant changes to the Ethereum structure. It requires all network participants, including validators, to adapt to this new structure. One of the main problems with this paradigm is achieving effective communication between shards, thus maintaining network consistency and security.

Despite these improvements, Ethereum will still require the use of L2s to handle hundreds of thousands or even millions of transactions per second in the future.

Plurality and L2s

Buterin's Layer 2s as cultural extensions of Ethereum article

It is clear that all the scaling solutions we have just seen present different ways of solving Ethereum's scalability issue. Some are completely different from others, some more complex requiring more computational power, and others taking advantage of security, and other features of the Ethereum mainnet.

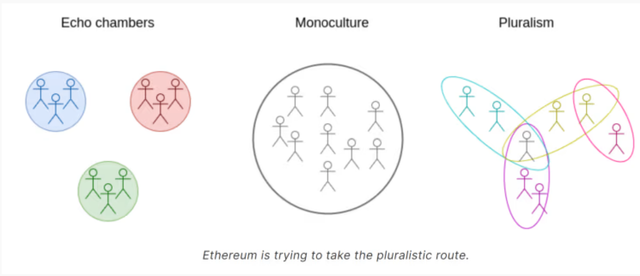

Plurality is a concept introduced by Buterin in his article, The Philosophy of Plurality in an Incredibly Oversized Nutshell. Defined by Glen Weyl, in 2022, in his article Why I Am A Pluralist:

“I understand pluralism as a social philosophy that recognizes and encourages flourishing and cooperation among a diversity of socio-cultural groups/systems.”

Pluralism is a concept that refers to the coexistence of diverse perspectives, cultures, or values within a society or system.

Buterin argues that the diversification of scaling solutions promotes the same concept of pluralism since the Ethereum ecosystem is evolving in a flexible and decentralized way when using different paradigms to improve its operation. Ethereum, being a platform open to everyone, attracts the interest of different groups of people with different interests but with the same purpose, to improve scalability.

Talking about pluralism in the Ethereum ecosystem implies the convergence of different approaches or perspectives on how the technology should be improved and what its purpose should be (remember when I mentioned that it is constantly reinventing itself). This is where Buterin mentions the subcultures that have most influenced this development:

The “cypherpunks” culture, interested in privacy and data protection, oriented open source development.

The “regens” culture, focused on sustainability and the positive social impact of technology.

And finally, the “degens”, a culture driven purely by speculation and the accumulation of wealth at all costs; are also called financial nihilists.

The influence of these diverse cultures allows the ecosystem to strengthen and expand its development potential. If a platform is influenced by only one vision, it runs the risk of becoming stagnant and then not flexible enough to adapt to the changes and challenges that constantly arise. The plurality of L2s makes the ecosystem more resilient, and capable of growing in the face of adversity.

However, this kind of pluralistic growth can also bring problems. The biggest concern is that cultures become isolated from each other, creating what is known in social networks as “echo chambers”. In an echo chamber, interaction with opposing ideas is minimal or non-existent, creating a bubble.

Pluralism not only seeks diversity of approaches, but it also promotes interconnection and dialogue between different perspectives to prevent the ecosystem from fragmenting.

Wrapping up

Layer 2 solutions are essential to the future of Ethereum. While the shift to proof-of-stake (PoS) consensus in Ethereum 2.0 has improved some aspects, the core network still needs support to handle large transaction volumes. L2s are real solutions that are in place and allow for reduced congestion, lower costs, and increased speed, ensuring that Ethereum remains an efficient and accessible platform for developers and users in a decentralized ecosystem.

To summarize, L2s allow:

increase scalability (speed),

decrease transaction costs,

maintain security without compromising decentralization (and obviously without compromising scalability, don't forget the trilemma)

maintain the decentralized approach of the Ethereum ecosystem.

Finally, the diversity of solutions allows the development of decentralized applications according to your needs.

More information about Ethereum & L2

Ethereum website

https://ethereum.org/

Scaling

https://ethereum.org/es/developers/docs/scaling/

Ethereum Layer 2 Scaling Explained

https://finematics.com/ethereum-layer-2-scaling-explained/

Vitalik Buterin's website

https://vitalik.eth.limo/index.html

Plurality philosophy in an incredibly oversized nutshell

https://vitalik.eth.limo/general/2024/08/21/plurality.html

Videos

Which Layer 2 solution to choose: Polygon, Arbitrum, Optimism

ZK Rollups vs Optimistic Rollups. The Difference Between Them Explained in Simple Terms

What are Sidechains in Crypto? Rootstock + Polygon Explained!

What is the EVM? Ethereum Virtual Machine - Explained with Animations

Ethereum 2.0 Upgrades Explained - Sharding, Beacon Chain, Proof of Stake (Animated)

What is Ethereum Gas? (Examples + Easy Explanation)

Wow what a very detailed explanation you actually put up here which everyone should be aware of. I believe the layer 2 is the future as it will help to give that scalability the Blockchain system needs

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

Thanks for coming by. I appreciate it

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

hi @jadams2k18

did you realy wrote it all yourself? that is such a massive article. how many hours did you spent writing it? I wonder

Cheers, Piotr

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

Hello, my dear friend! Long time no see! I hope you're well. Yes, It was longer, hahaha... I spent days not hours 😅

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit