The Essentials of L2 Regularization: Why It Works

Visualizing L2 Regularization

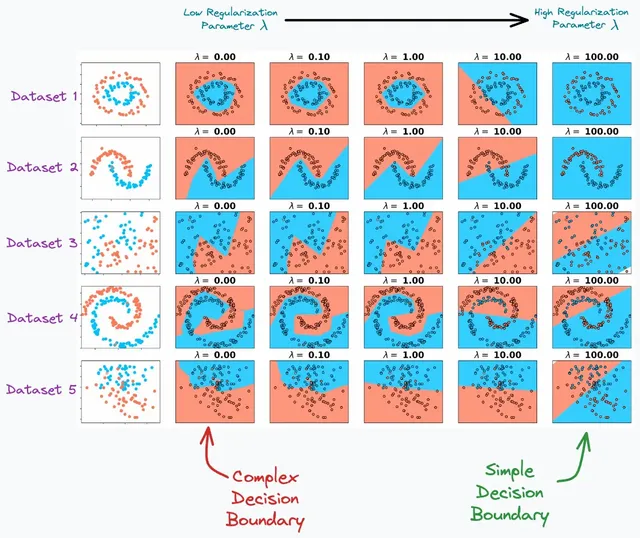

To understand the impact of L2 Regularization, it's helpful to visualize its effects. The following plot illustrates how increasing the regularization parameter simplifies the decision boundary of a model across various datasets:

As the regularization parameter increases, the decision boundary becomes simpler, highlighting how L2 Regularization helps manage model complexity.

The Role of the Regularization Term

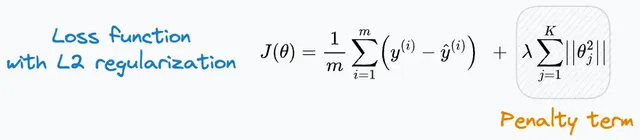

L2 Regularization modifies the loss function by adding a penalty term, formulated as:

This penalty discourages large parameter values, promoting simpler and more generalizable models.

Why Use a Squared Term?

The Probabilistic Justification

The choice of a squared term in L2 Regularization has a solid probabilistic basis:

Overfitting and Model Complexity: Overfitting occurs when a model captures noise rather than the underlying signal. Regularization penalizes this excessive complexity to counteract overfitting.

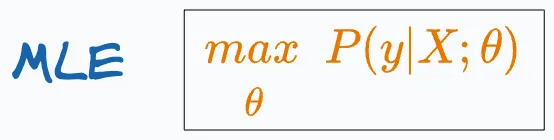

Maximum Likelihood Estimation (MLE): MLE seeks parameters that maximize the likelihood of the observed data:

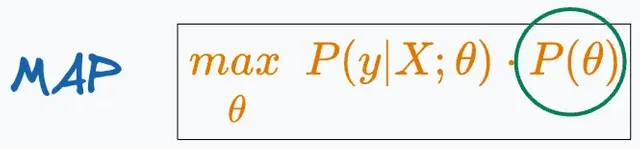

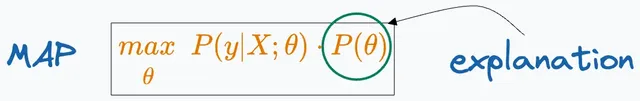

Maximum A Posteriori Estimation (MAP): MAP incorporates a prior belief about the parameters along with the data likelihood:

MAP balances the fit to the data with prior beliefs, leading to a more robust and less overfitted model.

Intuitive Example: Choosing the Right Explanation

Imagine you are trying to explain unusually hot weather and consider three possible explanations:

- Personal sensitivity to heat.

- The Earth has moved closer to the sun.

- Climate change, wildfires, etc.

MLE Approach: MLE might favor the dramatic explanation (Earth moving closer to the sun) because it produces extreme temperatures:

MAP Approach: MAP considers the rarity of such cosmic events and may favor a more plausible explanation (climate change, wildfires):

Why Squared Terms in L2 Regularization?

Mathematical Simplicity: Squaring the terms results in a smooth, differentiable penalty function that is easy to optimize.

Probabilistic Basis: The squared term corresponds to a Gaussian prior in Bayesian statistics, assuming normally distributed parameter values with zero mean.

Conclusion

L2 Regularization is a crucial technique in machine learning that balances model fit and complexity. By adding a squared penalty term to the loss function, it effectively manages overfitting and improves generalization. This approach is not just a heuristic but has a solid probabilistic foundation that supports its effectiveness.