Hi everyone!

Today, I’m going to teach you a simple approach to collect data from lots of websites and how to develop a web scraper that can act much like a human using a browser.

This sort of scraper can even undertake freelance web scraping jobs on its own, on sites like Upwork.

Web scraping has evolved a lot due of AI, especially in 2024.

In the past, huge corporations like Amazon or Walmart had to invest a lot of time and money to scrape data from other websites so they could maintain their prices competitive.

They achieved this by duplicating what a browser does: making queries to acquire the website’s HTML, and then using special code to discover and get the information they sought.

This was tricky since each website is distinct, and whenever a website changed its design, the scraper stopped operating. This meant enterprises had to spend more time maintaining and upgrading their scrapers.

Imagine Amazon wants to keep an eye on what Walmart is asking for the identical items. To achieve this, Amazon would require a scraper developed particularly for Walmart’s site.

But if Walmart altered their site, Amazon would have to update the scraper.

This is time-consuming and expensive.

It’s not just huge enterprises that require scrapers.

If you visit freelance websites like Upwork, you’ll notice thousands of small businesses asking for individuals to construct scrapers for things like gathering contact data, tracking pricing, market research, or job postings.

For example, a tiny firm may need to monitor product pricing across several e-commerce platforms to determine their own rates.

Before AI, it was hard and expensive for small firms to access these solutions.

Now, with the aid of large language models (LLMs) and new technologies, it’s lot easier and cheaper to construct web scrapers.

What used to take a week for a developer to construct may now be done in only a few hours. LLMs can grasp diverse website architectures in a smarter way, so you don’t need to continually rewriting scrapers for every tiny change.

Let’s speak about how to scrape data successfully and manage different sorts of websites - from simple ones to highly intricate ones.

I’ll divide this down into three groups:

• simple public websites • websites that need more sophisticated workflows • advanced scenarios that demand smart agents.

- Scraping Simple Public Websites:

Simple public websites are pages like Wikipedia or corporate websites that don’t demand you to log In or pay.

These sites can still be tough since they have varied design, but with vast language models, this work has gotten much easier.

Let’s say you need to collect information about different plants from Wikipedia for a school project.

In the past, you would need to look at the HTML code of each page, find the tags with the data you need, and then write custom code to get that data.

Doing this for every page would be a lot of work.

But now, with LLMs, you can just give the raw HTML to the AI and it can extract the data for you.

You can even tell It exactly what data you need, like “get the plant’s name, description, and care tips,” and the AI will give you a nicely organized answer.

This saves a lot of time and effort.

LLMs are also good at figuring out where information is if you don’t know the exact page it’s on.

For example, if you are looking for contact information on a company website but aren’t sure which page has It, the AI-powered scraper can search all the pages until it finds what you need. It’s like having a helper that knows where to click and what to read.

2 .Scraping Websites with Complex Interactions:

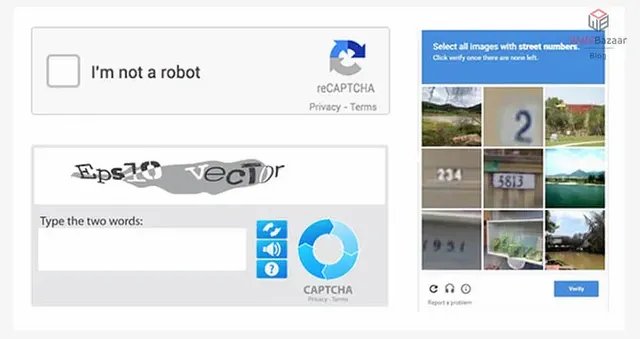

Some websites are harder to scrape because they need you to interact with them — like logging in, solving CAPTCHAs, or clicking past pop-ups.

Think of news websites that need you to log in to see the articles. This is where tools like - Selenium - Puppeteer - Playwright help

These tools were originally made for testing websites, but now they are used to simulate how a real person would use a website.

Imagine you want to scrape articles from a news site like The New York Times. The articles are behind a paywall, so you need to log in first.

You can use tools like Playwright or Selenium to make the scraper log in for you, click through pop-ups, and access the articles.

But even with these tools, it can still be tricky to make the scraper interact with every button or input box on the page.

This is where AgentQL comes in handy.

AgentQL helps find the right elements on a webpage, like buttons and forms, and tells the scraper what to do.

For example, if you want to collect job listings from multiple job boards, AgentQL can help your scraper find the login form, fill it in, and navigate to the job postings.

This means you can collect a lot of job listings in just a few minutes without doing any manual work.

You could even have the scraper put the data into Google Sheets or Airtable, so it’s easy to sort and analyze.

Let’s say you are trying to keep track of software developer job openings on sites like Indeed, Glassdoor, and LinkedIn.

With these tools, you can make the scraper log In, search for jobs, and gather all the details in one place, like a Google Sheet.

This saves you hours of work.

- Advanced Uses That Need Smart Thinking:

The last group involves more vague tasks that need decision-making — like finding the cheapest flight to a destination within the next two months or buying a concert ticket based on your budget.

These tasks are tough because they require planning and judgment. While still new, there are tools being developed that can do this.

One such platform is Multion, which makes agents that can do these kinds of complex tasks on their own.

For example, you could ask the agent to “find and book the cheapest flight from New York to Melbourne in July,”

And it would look through different travel websites, compare prices, and book the flight for you.

It’s not perfect yet, but It’s impressive how close these tools are to acting like a real person.

Another example is buying a concert ticket. You could ask an agent “Buy me a ticket for Taylor Swift’s concert for under $100.”

The agent would browse through numerous ticket sites, select a ticket that suits your budget, and complete the transaction.

This technology is still improving, but tools like Multion are making it feasible to automate even these complex processes.

Practical Tools for Web Scraping

Here are some handy tools if you want to start web scraping utilizing LLMs and agents:

Fireship, Gina, and SpiderCloud: These technologies assist transform online material Into an easy-to-read style that AI models can grasp better. For example, Fireship can take a sophisticated restaurant website and transform it into a basic version that just displays the relevant information, such menu Items and pricing. This makes it cheaper and faster for AI models to process the information.

AgentQL: This technology enables a scraper interact with webpages much like a person would. For example, if you need to scrape a job board that has plenty of buttons to click and forms to fill out, AgentQL helps make sure your scraper can accomplish all of that smoothly.

Airtable/Google Sheets Integration: Once your scraper obtains data, it’s Important to preserve it in a meaningful fashion. Tools like Airtable or Google Sheets can store the data so you can simply evaluate it later. For example, if you are tracking property prices on real estate websites, Google Sheets can help you compare and evaluate patterns over time.

Octoparse and ScrapeHero: These programs are particularly good at handling JavaScript-heavy webpages. Octoparse features prebuilt templates that make it easy to scrape data from e-commerce websites, and it employs sophisticated approaches to prevent getting blacklisted. ScrapeHero is perfect for applications that need a lot of data rapidly, like gathering pricing from numerous retailers at once.

ScraperAPI and Zyte: These services assist make sure your scraper doesn’t get blocked by rotating proxies. ScraperAPI enables you tweak things like request headers, which Is important for focused scraping. Zyte, originally named Scrapy, is also particularly good at handling massive scraping operations and ensuring sure you obtain the data you need without being interrupted.

Mozenda with online Robots: Mozenda helps automate more difficult online forms and also allows you to schedule scraping operations. Web Robots is useful if you need to construct your own scraping scripts and extract data directly into files like CSV or Excel.

So, in 2024 and 2025, AI Is transforming the way we scrape data from websites.

With broad language models and technologies like AgentQL and Playwright, even complicated sites can be scraped with minimal manual labor.

The greatest aspect is that these systems are adaptable enough to perform a broad variety of activities – whether it’s gathering company data, looking for opportunities, or even booking tickets.

The options to automate online scraping are greater and more accessible than ever.

So, whether you’re a small firm requiring market data, a freelancer supporting a client, or someone just curious to learn ? then these AI technologies make web scraping a powerful and straightforward option.

🌺 Hi @iron-pen! Your contribution is great! 💫

Hey friend! 🎉 Come check out your awesome post on my shiny new front-end! It's still a work in progress but I'd love to hear what you think! View your post here ✨

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

very good

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit