Articles from this series

- Information science - Introduction

- Information science – Uniqueness and essential questions

- Information science – Philosophical approaches

- Information science – Paradigms

- Information science – Epistemologies

- Information science – What is information?

- Information science - Terminology (Knowledge, Document)

- Information science - Terminology (Collections, Databases, Relevance)

- Information science – Domain

- Information science – Organisations of information

- Information science - Information systems

Main source

Introduction to Information Science - DAVID BAWDEN and LYN ROBINSON

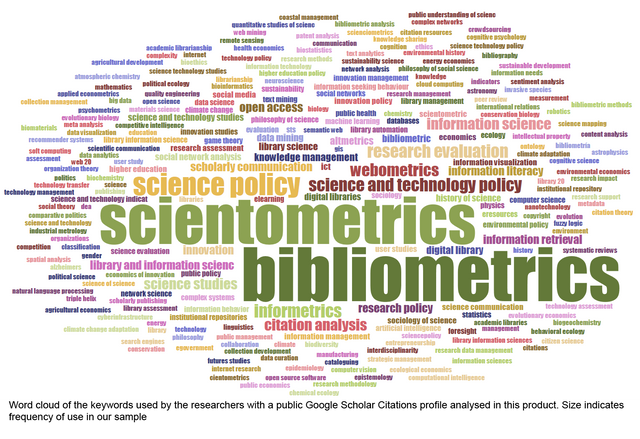

Informetrics

I think it can be objectively stated, that mankind isn’t really informed. Sókrates would say that we know that we don’t know much. How accurate for a 2 thousand years old guy. Informetrics is theoretically the perfect tool how to remove “the unknown” – the entropy. Reality though is still a modest one. Next (probably) 2 articles will deal with measuring, transmission, a usage of the information for that is what informetrics deals with.

“How much information exists?”

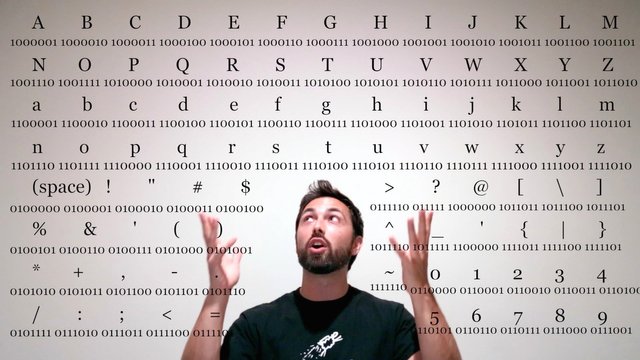

How to measure information anyway? Prior to digital age, it was rather easy. Basically the amount of documents equalled the perceived amount of information. It was anything but accurate back then and with the digital age things got even harder. In 2007 amount of digitally transmitted information (bits) was 2 zettabytes, while the storage memory was 300 exabytes. Those numbers are just a reminder that is supposed to help us to realize the enormity of the info-universe.

Basic informetrics

There are two guys that stand out from my point of view. Keep in mind, that this is an observation that has been done throughout decades, rather than rigid laws. Also the information isn’t anything that would destroy our comprehension of reality. It’s just an interesting information that show some patterns happening in science world.

The first one was Alfred Lotka. He has been studying documents in scientific databases, whose was a single author. The amount of authors that have written two articles is equal to ¼ of those that have written only one, 1/9 of those that have written three and so on. Around 60% of all the authors in any given database have written only one article. If there were 1000 authors in a single database, 250 authors would have written 2 articles, 111 would have produced 3, 60 that have written 4 and so on. This data shows us, that in every database only handful of authors contribute regularly. This syndrome has been called a “the long tail”. Speaking of which…

The second was Bradford. He has once again been studying scientific sources and its documents. He has found out that only handful of sources actually produce relevant documents. Those sources form some kind of core of the scientific researches for any given science field. Around 2/3s of all the relevant documents are in those few core databases, while 1/3 is spread across huge amount of sources. “The long tail” too if anyone wondered.