This article will be a easy introduction into the world of computer vision using artificial neural networks. Here, we will go into some of the basic theory behind cv as well as dive into the code involved(the full code is available below).

Computer vision is by far one of the most popular use cases for modern machine learning algorithms(with natural language processing(NLP/NLU) and reinforcement learning(RL). However, neural networks have only just broken into mainstream use. In 2012, Krizhevsky et al. bashed the previous best on the ImageNet dataset, acheiving a new Top-5 error rate of only 15.3% by utilizing new breakthroughs, the most important of which being dropout1.

Since then, computer vision models have only been getting bigger and more accurate(VGG-19, Inception, etc.). Today, a large enough and properly tuned model with enough data can easily push 95%+ on multi-class classification problems.

Before we begin we must prepare our machines with data and some packages.

Prerequisites:

Data(we will only be using apples and tomatoes, or a part of the whole dataset): https://www.kaggle.com/moltean/fruits

Tensorflow: https://www.tensorflow.org/install/

Keras: https://keras.io/#installation

With those, we are ready to begin.

Getting Started

Let us begin by organizing our dataset. Unzip the folder after downloading it and take a look. After opening it, you should see both a train and test folder. Each contains the same number of directories corresponding to the fruit contained within it. For this project let us just use golden apple and tomato directories. For both the apples and the first 3 tomato directories, let us combine them into 1 directory. This may require renaming the files from the source directory(if using a mac this can be done easily by highlighting all, right-clicking and clicking rename x files). This should leave you with 1465 apple and 2147 tomato images for training and 489 apples and 717 tomato images for testing/validation.

Next, to keep classes balanced, let's reduce the number of tomato and images to the same as apple for both training and validation. After that, open up your favorite python IDE(this script can be altered easily to run via CLI using argparse) and create a new folder called "data", and within that, "train" and "validate". For each of those, we can create a folder for both golden-apple and tomato. These are our binary classes.

Currently, our directory structure should look like this:

project_root

- data

- train

- golden-apple

- tomato

- validate

- golden-apple

- tomato

Now that we've completed this we can move the images and get into the code.

Importing Modules and Data Prep

We begin by importing our modules(note* if you are having troubles with keras refer to the installation docs above):

from keras.models import Sequential

from keras.layers import Conv2D, MaxPooling2D

from keras.layers import Activation, Dropout, Flatten, Dense

from keras.preprocessing.image import ImageDataGenerator

from keras.callbacks import TensorBoard,ModelCheckpoint

Because we are using very little data comparatively, we have to use some tricks to get the most out of our dataset(albeit, likely unnecessary for such a simple problem). We do this via image augmentation:

batch_size = 32

# augmenting our images during training to offset small dataset

train_datagen = ImageDataGenerator(

rescale=1./255,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True)

# we do not augment the images during validation

test_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(

'data/train', # source dir

target_size=(100, 100), # resizing images to 100x100(square)

batch_size=batch_size,

class_mode='binary') # using binary labels

validation_generator = test_datagen.flow_from_directory(

'data/validate',

target_size=(100, 100),

batch_size=batch_size,

class_mode='binary')

Keras is great for what we are doing here because it allows us to interact with the data and models at a high level(without having to deal directly with graphs and gradients like in tensorflow). The lines above are just performing simple augmentations on our input data and creating generators for which to train from. Now we are ready to start building our model.

Building the Model

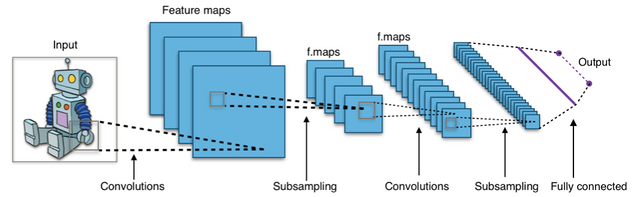

At a high level, our model will consist of an input layer, followed by multiple convolution layers, and then fully-connected layers and sigmoid output layer(to do the binary classification). We will use a ReLU activation:

and adam optimization(update rule):

Here is the code:

#constructing our model

model = Sequential()

model.add(Conv2D(32, (3, 3), input_shape=(3, 100, 100)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

#max pooling to downsample

model.add(Conv2D(32, (3, 3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Conv2D(64, (3, 3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Flatten()) # 3d feature maps --> 1d feature vectors

model.add(Dense(64))

model.add(Activation('relu'))

model.add(Dropout(0.5))

model.add(Dense(64))

model.add(Activation('relu'))

model.add(Dropout(0.5))

model.add(Dense(1))

model.add(Activation('sigmoid'))

model.compile(loss='binary_crossentropy',

optimizer='rmsprop',

metrics=['accuracy'])

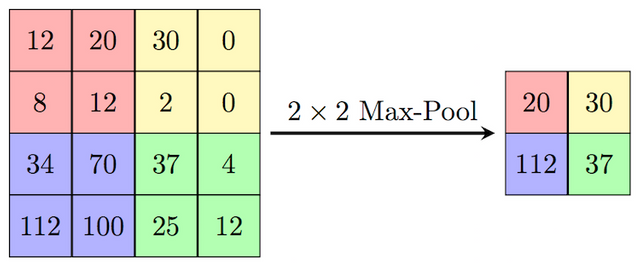

As you can see we create a sequential model with an input layer of shape (3,100,100) - 3 channel rgb, 100px height x 100px width. Each layer has a ReLU activation, followed by a 2x2 MaxPooling layer. An important thing to realize is what max pooling does.

Looking at the image above, we can simply see we are taking the max of a region(2x2) of the 4x4 matrix and creating a new matrix from that. This is down to downsample and reduce dimensionality(similar to something like PCA for tabular data).

At the end, we tack on some fully connected layers, followed by our output which has, as mentioned previously, a sigmoid activation(

).

).Training our Model

Now that we have completed building it, it's time to train it up.

checkpoint=ModelCheckpoint(filepath='models',monitor='val_acc',mode='auto',save_best_only=True)

tensor = TensorBoard(write_grads=True,write_graph=True,write_images=True,log_dir='logs')

model.fit_generator(

train_generator,

steps_per_epoch=2932 // batch_size,

epochs=15,

validation_data=validation_generator,

validation_steps=980 // batch_size,

callbacks=[tensor,checkpoint])

Create a directory called "logs" in the project directory, this is where the tensorboard logs will be saved. Above we can see that we are creating 2 keras callbacks(TensorBoard, and ModelCheckpoint). TensorBoard can be used to visualize model parameters, as well as get in-depth feedback about how your model is doing(see: https://www.tensorflow.org/guide/summaries_and_tensorboard)

We then fit the data using our 2 generators. 2930 and 978 are the number of images for both training and validation. We then train for 15 epochs.

We can see that our validation accuracy hits 100%! This is because our problem is very naive and easy from simple classes and removed backgrounds. A much tougher task would be from different types of images that need to be spotted in some background. Though this model could acheive 80%+ easily with a similar number of harder samples.

Now to predict:

from keras.models import load_model

from keras.preprocessing.image import img_to_array, load_img

img = load_img('10_100.jpg')

img = img_to_array(img)

img = img.reshape((1,) + img.shape)

print img.shape

model = load_model('model.h5')

pred = model.predict(img)

print pred

---->

[[1.]]

As an extension, perhaps try this same problem with harder classes, or try multi-class classification(using softmax). Be sure to let me know if you liked this tutorial, as well as what you would like to see next(I am thinking reinforcement learning). Thanks!

1: https://papers.nips.cc/paper/4824-imagenet-classification-with-deep-convolutional-neural-networks.pdf

A good read on the latest CV architecture by Hinton et al.: https://arxiv.org/abs/1710.09829

Congratulations @hisairnessag3! You received a personal award!

You can view your badges on your Steem Board and compare to others on the Steem Ranking

Vote for @Steemitboard as a witness to get one more award and increased upvotes!

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit