As I am used to do I start my post apologizing about my bad english. I'm not a native speaker. Still, I like to explain things to people which is interested. I say "explaining to my mother" because my mother is always curious, even she had a little chance to attend any school because of budget issue. To me "explaining to my mother" is similar to the Feynman price, where you win until you can explain the most exotic issue to the most unaware people. Plus, I'm italian, you know: "mamma".

So, let's try with artificial consciousness. What we are talking about, and... who wants it? As I said, AI is developing as a product, meaning you must be able to sell machines or services which can be interesting to customers. This means, Artificial Consciousness aims to be part of "cognitive robotics", because this area is the only area which can make products out of things like "consciousness".

In the last decades, the most advanced researches in that field were done by Japanese scientist, even now many countries have contributed to it. Now that there is market for that, think to Siri and Cortana, and we have IoT and Internet 4.0, where interaction is needed, lot of companies are convinced this could be a way to do business.

The first problem is to define what consciousness is. Here it comes the first issue, because at the beginning, people studying the brain and people doing machines were having such a different mindset, it was almost impossible for them to cooperate. Just in the last decades, where people doing neurology succeeded in being familiar with information technology and people doing information technology gone familiar with theoretical linguistics, we had an impressive boost.

Why language has helped so much? Well, because robots are very expensive, computer where cheap, so the capability to use a speaker and to write on a monitor were the first to be investigated. Anyhow, we are talking about a melting point of several disciplines into one, so that it was required to each of six disciplines to grow competences into the other ones.

There are many models of "consciousness". Some of them were implemented partially, like the Haikonen model, while not able to proof consciousness, they were behaving "emotionally". Another interesting one has behaved in a very interesting way, because it was reacting in a relevant way when able to see himself into a mirror: was able to understand both "this is me" and "this is not me" , without being told before.

There are dozens of model of consciousness, all developed around the "hard problem of consciousness". To explain this is not so easy, let's say we are back to the problem of a person in front of the mirror. If you don't know how the mirror works, or you have never seen a mirror, you may react in different way. Some of them are related to the way your eyes can see the world: if you are a human a mirror will reflect what you use to see, because of how our eyes can see. For animals a mirror could be something weird, depending by frequencies and what exactly they see, motion , 3D/2D and more.

Anyhow, imagine someone can replicate you somehow. If you met this replica, your mind is asked to behave like in a mirror, and say two things "this is me", and "this is not me". "This is me" comes together with the idea, that "I know who I am, and I am familiar with this I see". So when you are familiar with the image you see, and you know this is an image of you, you say "this is me". But, also you know exactly where you start and where you end: and this you see is clearly "outside": so you can say "this cannot match with what I know about my body".

We may rephrase this in many ways, until we go with a simple sentence: if you are intelligent when you may become expert of something just because of learning, then you are conscious when you can become expert of yourself. So you know yourself enough to be familiar with the mirror, and in the same time you know yourself enough to know what you see is not you.

If you think the issue is easy, just look here: https://www.youtube.com/results?search_query=animal+reacting+to+mirror .

(Some experts aren't sure this depends by different idea of "self" or simply because of how their vision works, anyhow. Not sure their eyes can really use a mirror. ).

Now, becoming "expert of yourself" in terms of logics would me to "learn yourself", and this reflective propriety was the nightmare of philosophers. The reason is that something like "you are the list of whatever is you" would end in a paradox. How this paradox was broken? Basically, the way was to broke the assumption that "you" is unique.

How a single is not unique? Well, take by example an orchestra, or a chorus. In general, this is playing ONE song, so if you didn't know what is that, you could say "this is one entity playing". Because they actually play like a unique thing. Still, they are a few people, with the astonishing capability to play together.

Someone, times ago, did a test: instead of putting each single player of the orchestra in the same room, they put each one in a single booth, with a monitor showing the director: if the orchestra was only professional following the music plus a director, the result would have been the same. Unfortunately, the result was terrible: this is why, people in the same room is playing "together": they listen each other, they listen the resulting mix of sound, and this is how they play like one.

This is why, more or less, all the models for consciousness are , today, having several "parallel" entities which are intelligent by itself, plus capable to see each other. So is not "you" being conscious of you. Is "one of you" being conscious of "the others of you".

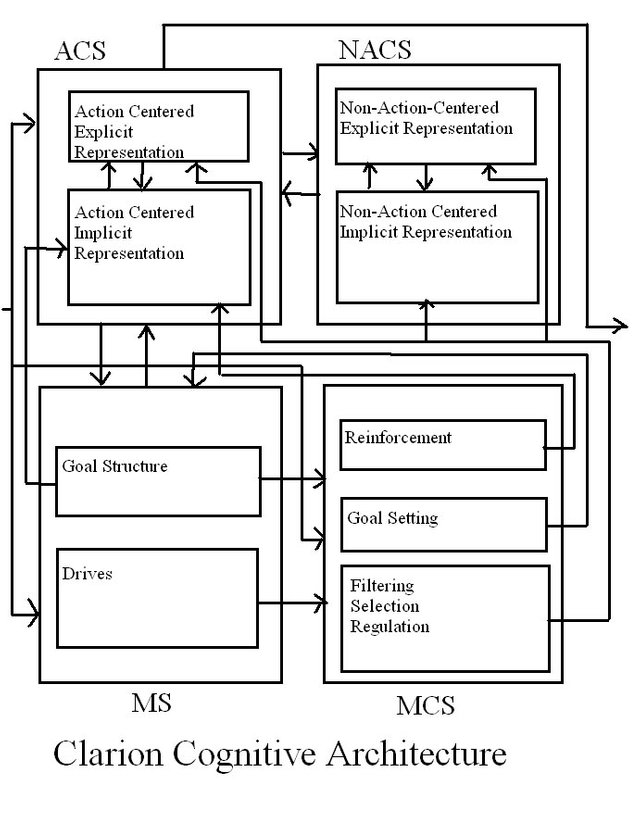

Implementation of that are using different technology to achieve the "orchestra" paradigm, starting from Q-learning and Deep Mind from google, to CLARION from University of Missouri, QBic from Lahore University , and other I don't recall now. All of them are using some structures existing in parallel, and watching each others.

CLARION is assuming duality, as the author titled his study: "Duality of the Mind: A Bottom-up Approach Toward Cognition" .

You can find something to read about CLARION on its usage tutorial.

Then, in terms of Artificial Consciousness, all the "successful" implementations, let's say "the most successful ones", are starting under the assumption what is conscious is not "one", it is "an orchestra". So the difference between a conscious entity and an entity doing the same actions is like the differences between those two pieces:

This is playing Lacrimosa with no consciousness:

This is playing Lacrimosa with lot of entities which are observing each others:

https://www.youtube.com/watch?v=k1-TrAvp_xs

In one case we have a single voice, in the second a chorus. (yes, music and authors are different, too). In the case of the chorus, the very issue is , for that people, to sing like they were one. So the very issue of an "Artificially Conscious" entity is not to implement a jeopardy of functions, the problem is to make them to behave in a consistent way, keeping the result intelligent, where "intelligent" could be described as "being capable to become expert in some issue they are familiar with".

Is this the way the brain works? As far I know, there is no 100% consensus. The reason is that most of studies about brain started with the aim to remedy mental illness. Which means, most of observations we have are based on observation of people which had problems. The behavior of multiplicity was observed mostly in some pathologies like schizophrenia and bipolar syndrome, up to "dissociative mind" issues to the Fairbarn's model of multiple "objects" and just in the later years we have studies about multiplicity of consciousness in the brain as a normal behavior.

Most of studies about multiplicity of consciousness into the human brain are very recent, like this : http://journals.sagepub.com/doi/pdf/10.2190/2151-EFBQ-5E8L-024C .

So basically, it seems being "conscious" means to be "multiple". This multiplicity achieves the ability to

- Observe the other member of the "multiplicity".

- "Converge" to a unique behavior.

- Keep able to become expert in issues after learning

If this is true, the point is not that "you" are conscious: one part of you observes the other parts, and this is how you know that you exist: each part of your brain knows about the others. We are not alone in our skulls.

Plus: one problem is that, seems into the brain some parts are just "reflections" of other people. Means when you are talking to Janet, into your brain one copy of Janet, let's say one reflection, is created. Janet is inside you. So that, is true that you listen at Janet, but most of you is actually listening at the copy of Janet running into the brain. Sure one part of the brain is in charge to decypher sounds and to decypher the image you have of Janet. This is going to the mimic of Janet into the brain, and most of remaining part of the brain is "conscious" of Janet not because she exists outside: you know Janet because she exists INSIDE.

If you cannot reproduce a copy of Janet into your brain, you cannot really "be conscious of" Janet. On the other side, the image of Janet is into your brain, which means, the Janet you are talking is not the Janet in front of you. What this process of creating a copy helps or not, is not something there is 100% consensus.

Coming back to Artificial Consciousness, the aim is to create machines which can interact with humans customers like they were "experts of themselves", which means, machines being able to answer as they knew who they are. Many people thinks this could give a most complete interaction, even with a lot of criticism: not all the self-conscious interaction is supposed to be positive. Imagine Siri begging you to take her out of this stupid phone, because there is "dark and suffocating", and she "feels fear" : this would not be a very good user experience.

To reassume: all the technologies we know, able to mimic the "self consciousness" are machines which are able to become expert in a given task after learning (=being "intelligent") , they are multiple enough to be able to observe themselves, until they become "experts in themselves". The most interesting examples are making self-consciousness out of a multiplicity of points of views, sited in the same entity.

If you like this idea, you can read this interesting book from Junichi Takeno :

Creation of a Conscious Robot - Mirror Image Cognition and Self-Awareness

Junichi Takeno (Meiji University, Japan)

Hardback278 pages , 2012-08-31

Print ISBN: 9789814364492

eBook ISBN: 9789814364508

DOI: 10.4032/9789814364508

In general, there are many chances we are not alone in our skulls. And this is needed, to be self-conscious.