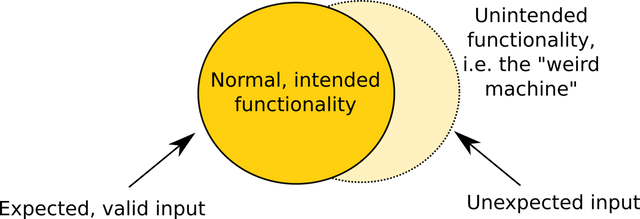

Weird machines - unexpected behavior from unexpected (crafted) inputs

To understand how exploits such as what happened with TheDAO and future exploits upcoming we have to understand the concept of weird machines. Programs typically rely on inputs, and from these inputs there are deterministic behaviors which take place within the app which we can call the functionality. The quality of the input of any program is a determining factor for the predictable functioning of the program and ultimately the expected output.

Garbage in garbage out

Many "hackers" or exploit code writers know about SQL injection. SQL injection is a good example of an unexpected (crafted) input which creates an unintended functionality (weird machine) which results in unexpected behavior (the backdoor). Garbage in garbage out is the principle behind the creation of weird machines.

My own experiences

When I was a teenager I used to play video games quite a bit and used to mess around with technology. One of the first times I ever discovered how to create exploits was through literally trying to break programs. I learned this originally from video games where you have the concept of "button mashing" and this "button mashing" eventually led to the discovery that there is always some probability that if you find a bug somewhere in a game, that you can leverage that little mistake in the game to escalate the bug further. In the case of a game like Mario it might be to get infinite lives:

An example of this could be the bug where you get stuck between the wall in Super Mario Bros. The discovery of bugs by random input (equivalent to button mashing) is called fuzzing and while I did not know the name of this as a child or teenager, I did intuitively know that you could intentionally break the functionality of certain programs simply by doing stuff like overflowing the buffer with a really large or unexpected input.

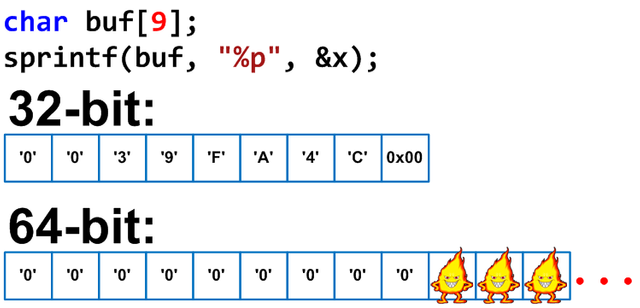

In certain chat applications for example there was a buffer overflow exploit where if the input in the text window was much more than the programmers expected the application would crash. In some cases if the programmers did not write the program right then the entire chatroom might crash or even worse a computer might crash on the other end. This is an example of a buffer overflow exploit and this category of exploits is also based on crafted inputs.

Some technical details of fuzzing

I described the concept of fuzzing as the equivalent to button mashing in a video game. When you button mash in certain games you might discover errors or bugs in the game. I also described how in the 90s you could do something similar with chat applications to discover buffer overflow exploits. Today there are applications which allow developers to automate and simulate what teenage "hackers" and gamers do instinctively and these apps make up the procedure for fuzz testing.

Fuzz testing is employed as part of black box testing where you try random valid or invalid unexpected inputs to a program. This will trigger unexpected behavior if they can be triggered by producing functionality which perhaps the developers never intended. This behavior could be a memory leak which leads to a "blue screen of death" for instance. Fuzzing only finds simple exploits and to go further and create weird machines may require more than just random inputs or crafted inputs.

Altering the flow of execution

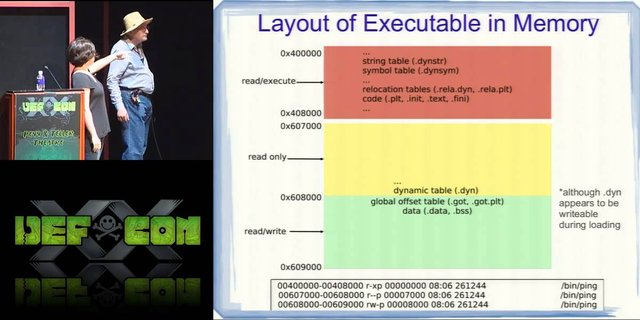

While the techniques may differ, the goal of a weird machine is to take over the flow of execution of a program. By hijacking the flow of execution then the functionality of the program itself is altered.

The three main steps for creating a weird machine are below, taken from here:

1 . identifying computational structures in the targeted platformthat allow the attacker to affect the target’s internal state via

2 . distillingthe effects of these structures on these inputs to tractable and

isolat- able primitives ;3 . combining crafted inputs and primitives into programs to comprehensively manipulate the target computation .

Conclusion

The fact is that certain languages are much more vulnerable to this category of exploits than others. C, C++, and certain other languages are particularly vulnerable to stack overflows and require very skillful developers to avoid the sorts of errors which could allow for crafted inputs. There are forms of testing such as blackbox testing, and there are development methods such as correct by construction, which can lower the risk of the this kind of attack vulnerability.

The way to resolve this issue in the most comprehensive way is to go with langsec. The easiest way if it's a new project would be simply to avoid the languages which don't have built in protections against unexpected behaviors or crafted inputs. The other way is to process the input in a safe way, using sophisticated input handling, to allow for "trusted input". I will not go into detail in this article on how to do that as it is quite a dense topic but input handling is the answer for security when you can't just change the language but can filter and secure the input.

References

Awesome article!

Totally agree on the choice of language point - C is an incredibly dangerous language to write in if you don't need to, and there are some pretty good alternatives surfacing these days that are garbage collected like Go or have a very strict compile time borrow checker like Rust.

The argument that programmers "should" be able to manage memory safely is all well and good but it's backed more by ego than pragmatism... just look at the continual stream of CVEs and security patches.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit