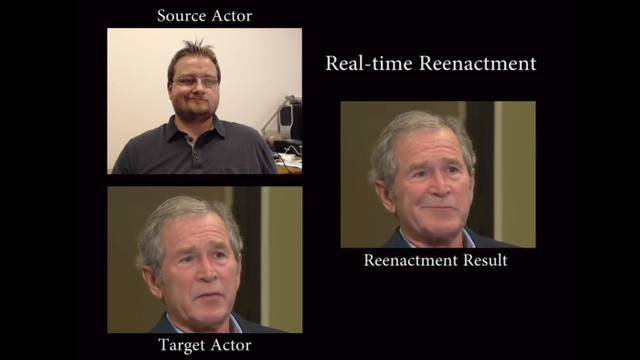

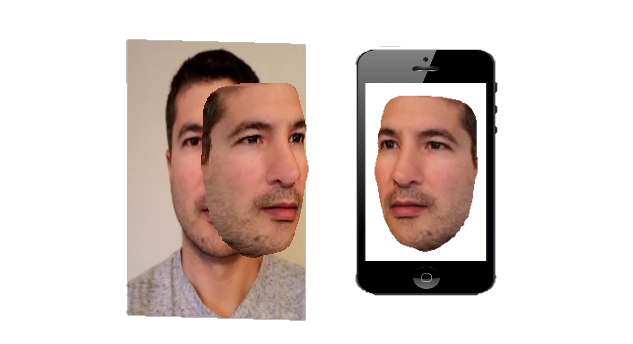

Technology is reaching a point where it can nearly create fake video and audio content in real-time from handheld devices like smartphones.

In the near future, you will be able to Facetime someone and have a real-time conversation with more than just bunny ears or some other cartoon overlay. Imagine appearing as a person of your choosing, living or deceased, with a forged voice and facial expressions to match.

Image Source: http://niessnerlab.org/projects/thies2016face.html

It could be very entertaining for innocent use. I can only image the number of fake Elvis calls. Conversely, it could be a devastating tool for those with malicious intent. A call to accounting from the ‘boss’ demanding a check be immediately cut to an overseas company as part of a CEO Fraud or your manager calling to get your password for access to sensitive files needed for an urgent customer meeting.

Will digital media forgery undermine trust, amplify ‘fake’ news, be leverage for massive fraud, and shake the pillars of digital evidence? Will there be a trust crisis?

Tools are being developed to identify fakes, but like all cybersecurity endeavors it is a constant race between the forgers who strive for realism and those attempting to detect counterfeits. Giorgio Patrini has some interesting thoughts on the matter in his blog Commoditisation of AI, digital forgery and the end of trust: how we can fix it. I recommend reading it.

Although I don’t share the same concerns as the author, I do think we will see two advancements which will lead to a shift in expectations.

Technical Advancements

- The fidelity of fake voice + video will increase to the point that humans will not be able to discern the difference between authentic and real. We are getting much closer. The algorithms are making leaps forward at an accelerating pace to forge the interactive identity of someone else.

- The ability to create such fakes in real-time will allow complete interaction between a masquerading attacker and the victims. If holding a conversation becomes possible across broadly available devices, like smartphones, then we would have an effective tool on a massive scale for potential misuse.

Three dimensional facial models can be created with just a few pictures of someone. Advanced technologies are overlaying digital faces, replacing those of the people in videos. These clips, dubbed “Deep Fakes” are cropping up to face swap famous people into less-than-flattering videos. Recent research is showing how AI systems can mimic voices with just a small amount of sampling. Facial expressions can be aligned with audio, combining for a more seamless experience. Quality can be superb for major motion pictures, where this is painstakingly accomplished in post-production. But what if this can be done on everyone’s smartphone at a quality sufficient to fool victims?

Expectations Shift

Continuation along this trajectory of these two technical capabilities will result in a loss of confidence for voice/video conversations. As people learn not to trust what they see and hear, they will require other means of assurance. This is a natural response and a good adaptation. In situations where it is truly important to validate who you are conversing with, it will require additional authentication steps. Various options will span across technical, process, behavioral, or a combination thereof to provide multiple factors of verification, similar to how account logins can use 2-factor authentication.

As those methods become commonplace and a barrier to attackers, then systems and techniques will be developed to undermine those controls as well. The race never ends. Successful attacks lead to a loss in confidence, which results in a response to institute more controls to restore trust and the game begins anew.

Trust is always in jeopardy, both in the real and digital worlds. Finding ways to verify and authenticate people is part of the expected reaction to situations where confidence is undermined. Impersonation has been around since before antiquity. Society will evolve to these new digital trust challenges with better tools and processes, but the question remains: how fast?

Interested in more? Follow me on your favorite social sites for insights and what is going on in cybersecurity: LinkedIn, Twitter (@Matt_Rosenquist), YouTube, InfoSecurity Strategy blog, Medium, and Steemit

...then if we take it one step further and simply have an AI control the entire interaction! The next generation of robo-calls, marketing video-calls, interactive advertising, spam, phishing, browser ads. I don't want to browse to a website and have a video 'conference' with an AI persona trying to sell me something in the ad-bar!

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

And if the AI knows you, then they would pick an avatar, voice, accent, and speaking style that would be most influential to specifically you! That is an ugly evolution of marketing.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

Great points. More defined trust levels and what they mean will have be adopted and confirmed too. If not this will get ugly. I agree multifactors we can trust will be a necessity. :)

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

Another reason why I think we need an exocortex. I do see the same problem you see. I don't see any top down solution to it.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

Are you talking a Brain Computer Interface? Whoa, and I was just worried about computers, cars, and toasters being hacked. What malicious things could someone do if they could hack a BCI? Mind boggling.

As for fake communications, the problem has existed for centuries. We can easily add certs, hashes, ID/auth to digital communications for validation. The tech and tools for trust are possible, but will they be integrated and used?

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

When I refer to exocortex I am not referring to BCI. BCI is BCI. Exocortex does not require BCI because BCI is merely a communication interface and the interface in my opinion isn't the mind. I also don't think the brain itself is the mind, so to have BCI merely connects the brain to the computer but the what matters in my opinion is what the computer can do. So we could use a website like this here, a smartphone, or anything, as the interface technology I expect will evolve over time.

The concepts are on my blog. I think the biggest issue is the lack of wisdom and I think we can use some of these decentralized technologies to provide what I call a wisdom engine. A wisdom engine is a function of an exocortex. An exocortex in my opinion has to exist because a human brain is biologically limited and not suited to scale with the complexity of our society.

Details are in these blog posts for you to review and comment on:

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

Isn't the risk of such capabilities is that we become heavily dependent upon them? There is an axiom in cybersecurity: The value of technology capabilities is proportional to accompanying increases in risks.

i.e. if an exocortex is created with all the tremendous capabilities, we will likely become heavily dependent upon it. Therefore attacks which compromise, deny, and expose it for malicious manipulation become more attractive to malevolent parties.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

In my latest post I identified the problem we face as societal complexity explosion. This in mathematics would be combinatorial complexity where the more hyper connected we become to each other the more complicated our interactions, social lives, also become. The technology evolving I compared to the analogy of adding new chess pieces and squares to the chess board which increases the complexity of our interactions with every additional piece and square.

So when you say there is a risk that we will become heavily dependent on an exocortex I think it's already too late. Society already is complex beyond the point which any particular human brain can grasp. Can any of us besides the best lawyers make the claim that we even know every federal law on the books (excluding state laws)? Even if we know these laws can we then claim we truly have an understanding of these laws including the probability of aggressive enforcement? Without that ability we cannot properly assess risk.

Let's move beyond the law, how about terms of service agreements? How many of us have the attention span to read and understand these agreements?

Let's move beyond legal. Let's move to social, how many of us actually have the social capacity to try to meet the expectations of 5000 friends? People have 5000 friends on Facebook whom they barely know yet each one of them gets to see their timeline and judge their every word.

So do you see my point here? It's already too late. We live in a society where most people don't even memorize their own phone number or other people's phone numbers anymore. We live in the society where people ask their smart phone for directions rather than applying their own spatial intelligence. We live in the society where people use spell check. Most importantly we live in the society of judgment, of personal responsibility, of mass incarceration, of public shaming, all which require exceptional levels of wisdom to avoid. All of the risks a person faces in terms of risk of negative socioeconomic outcomes are rising as the technology improves.

The technology puts increasing restraint on behavior, on speech, on even the ability to think. I don't really have the ability to reverse these trends but an exocortex is about surviving the changing trends. The purpose is to allow the individual in society to build their capacity for resilience and to allow for them to pursue wisdom in the face of obstacles known and unknown.

You are very correct that these exocortex technology will be attacked which is why I'm very concerned about the fact that all the current attempts to build them seem to be around centralized companies where the data is controlled by the company. If Facebook is going to offer for example intelligent agents and similar technology then do we have to worry about all of it being compromised if Facebook is compromised? The idea I have is to create security through diversity. To decentralize and generalize the technology so that users do not have to rely on any particular company to provide an excortex functionality. This would make it more like running your own email server vs paying a company to host your email vs going with Gmail. You can do any of these or all three, so the attacker cannot simply hack your Gmail account or get a job at Google and leak.

I don't have all the solutions of course so feel free to review and leave commentary. If you have a way to make it more secure I'm open minded. Also if you have a way where we can avoid depending on these technologies heavily I'm also open minded. I personally don't see any other way to scale myself up with societal complexity (more laws, more rules, more norms, more relationships, more information to process and evaluate), as my brain capacity is finite.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

I agree it is already 'too late' for some aspects. Heck, I don't remember anyone's phone number anymore, but we still must manage the risk of this being used against us. Pushing forward without a plan or mitigating controls seems hazardous and short-sighted.

So, how do we identify the optimal risks and put compensating controls in place to mitigate as we continue down this path?

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

It's being used against us right now. That is one of the topics I'm concerned about. Actually put another way, our cognitive limitations are exploited by social engineers, hackers, scammers, and bad actors.

If the risk emerges from "wise" hackers exploiting cognitive limitations then the only mitigation I've been able to find is to figure out how to reduce that attack surface. For example social engineers, or psy ops using memes, they know very well that the human brain relies on stereotypes as a short cut to reduce cognitive load. They may not know the neuroscience behind this but they intuitively exploit it and if it's a foreign government behind the information operations then for sure they know what they are doing.

The problem is there is no viable immune system for this. I'm basically required to be ignorant and susceiptible to disinformation, to make decisions with cognitive biases, to make decisions with attention scarcity, and businesses thrive on this. I will not name the businesses but I'm sure you can think of some businesses which use marketing in such a way that profit can be extracted by exploiting these cognitive limitations.

In my opinion to do a risk assessment together, globally, collaboratively, requires building the platform in the first place. Else we will have to rely on small groups of experts, many of whom may be compromised, to make top down solutions which we may not have the chance to give our input or discuss. I would suggest we use decentralized technologies to specifically produce wisdom building tools so that we can do collaborative risk assessing, collaboratively design the very platform itself using the platform we are designing. This in the engineering sense would be a self optimizing design, and even Steem to some degree has some of these capabilities although Steem lacks the capacity for wisdom building, as it does not include a shared knowledge base, or have the symbolic reasoning AI, which in my opinion will be necessary to boost decision making. Steem's interative design improvement capacity relies on high technical expertise which results in ultimately less collaborative centralized decision making.

So I'm definitely not someone in favor of pushing forward without a plan, on the engineering level we require a specification, whether it be formal or informal. I do not think we can plan for everything though which is why the design has to be iterative, adaptable, capable of being upgraded over time as we learn more, become more wise, etc. So a wisdom engine or whatever we could call it, as it produces results we could use those results to in theory improve the wisdom engine continuously.

In my references I include two examples of designs which are self optimizing logic. Theoretically speaking I agree with the approach of using the platform itself to continuously improve on the design of the platform provided that such a platform can be built. The idea being that upon initial construction we don't really know any answers to the tough questions such as the ones you ask, and so we must use the platform to ask the tough questions in the appropriate way so as to receive the answers. Changes can go into effect if agreement by the agreed upon mechanism can be reached. Most important of all, public sentiment is critical in my opinion to decisions which effect stakeholders, so in terms of putting controls in place we would again need this to be a data driven process where we have some idea what the values of people are, and factor this into the safety decisions somehow. Thanks for asking tough questions because that is exactly what is needed to build something great and keep it beneficial.

References

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

One more thing to consider. It may be the 'wise' attackers who develop inroads to exploitation, but it then will be disseminated to less technical, basically anyone, to leverage. That's when the problem scales unimaginably.

This is one of the problems with AI and cybersecurity that I work on. Adoption of AI could be a turning point for efficiency and effectiveness across just about all facets of mankind, but if those same tools we embrace are leveraged for malice, then we have created a tool for our demise. How do we proceed where we gain from the benefits and yet still mitigate the risks to an acceptable level.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

I think the issue goes beyond just trust. The problems I refer to just in case you don't want to read each blog post are:

All of these problems in my blog I show trace back to neuroscience. The biological limits of the brain mean that wisdom (and morality) doesn't scale. So having greater transparency without wisdom and without capacity to be moral is likely a disaster. I also mention that the idea that we should create a sort of small town atmosphere also can't scale if the human brain is only capable of managing 150 relationships, so the metaphor of small town on something like Steem can't work because a community traditionally is 150 people not 1 million people or 10,000 people like we see online.

Fake sockpuppets, fake communications, etc, these are side effect problems that result from the biological limits. Because I don't have the ability to examine everyone to determine who I can trust then it's just a flood of information coming at me. It will only get worse until we develop an ability to filter and an exocortex (the concept I describe) can help with this.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

Some of the technical requirements, for an exocortex in the most simplistic form Alice just needs to have bots. These bots are agents, and Alice would simply ask questions, delegate tasks to these bots. These bots form a network, and could seek answers, services, or solutions from other bots or from humans. These bots can be built on Steemit even now so that part is not technically sophisticated.

Alice also would require access to a shared knowledge base, as well as having her personal knowledge base. This way a knowledge economy can form. This part requires linked data, rdf, reasoner, etc. The Cyc project is the closest thing to it, but for the most part this is the most technically chllenging part. The ability to actually create knowledge, to structure the data in a way which symbolic style (logic based) AI can reason over it.

Alice requires privacy, the ability to control her data, her knowledge base, etc. This privacy component may be possible through homomorphic encryption but in my blog I just called it advanced cryptography. Theoretically it is possible but performance is still very slow until CPU power improves.

All in all I think it can be built but the funding currently isn't there. More focus is going into small picture projects with some exceptions. Enigma is trying to solve the privacy part by building the homomorphic encryption I speak of and they have pretty good funding.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

Absolutely right . Now a day technology is reaching a point where it can nearly create fake video and audio content . It will be difficult to find good content on any platform.If this is going on, good content can not be found in future...

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

On the upside, it might just make for more entertaining content. Sketch routines that include live interviews with long deceased personalities (Elvis, Einstein, Lincoln, etc.). Could be used for educational purposes, etc. My biggest concern is around fraud and misleading people to their detriment.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

People should also be looking at the lighting and shadows in the video, whether all of the elements featured in the frame are the right size, and whether the audio is synced perfectly, said Mandy Jenkins, from social news company Storyful, which specializes in verifying news content.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

Looking for artifacts, lighting, and shadow discrepancies works for today's fakes. But imagine what tomorrow will hold. AI will be able to simulate proper lighting and sound effects. Quality will be much higher. Eventually, complete 3D models will be generated.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

Oh this sounds really dangerous and this is why technology have an great negative points where we know it's really good and it can make our life more effective but technology or more advancing technology of today's phase can bring some situations which can fall under the fraudulent activities and innocent people can pushed into problems, and hope that we can develop more tools to determine the all fraudulent activities. Thanks for sharing and wishing you an great day. Stay blessed. 🙂

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

Technology is just a tool. Powerful tools can be used for good or malice. We must be aware and adjust as necessary to embrace the benefit while minimizing the risks.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

Absolutely true.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

To the question in your title, my Magic 8-Ball says:

Hi! I'm a bot, and this answer was posted automatically. Check this post out for more information.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit