What if I told you:

- Writing to a file could decrease its space usage?

- Writing data to the middle of a file could increase its space usage?

- Deleting a file might not free up any space?

All these can happen on modern storage systems.

Files and Storage Features

Most computer storage today consists of "files". You probably know this already; they're practically unavoidable when dealing with computers. Even if you use cloud storage, each document is a "file". (Is a discussion stored on the Steem blockchain a file? Maybe that's a topic for another time.)

We can make this a little more formal: a file is a unit of storage; the abstraction a file provides is a one-dimensional array of bytes.

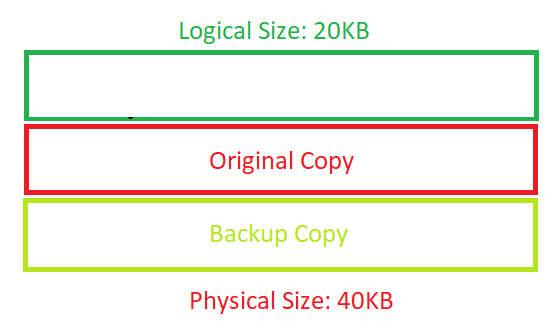

Logical Size

The array of bytes making up a a file has a well-defined length. Writing to the end of the file will increase this length; it's also possible in most file systems to "truncate" a file and reduce its length.

When we talk about the "size" of a file, this is usually what comes to mind. That’s what Windows shows in the explorer. This length is called the "logical size" of a file, to distinguish it from...

Physical Size

How much space on a hard drive or flash drive, does your file actually occupy? We can call this the "physical size".

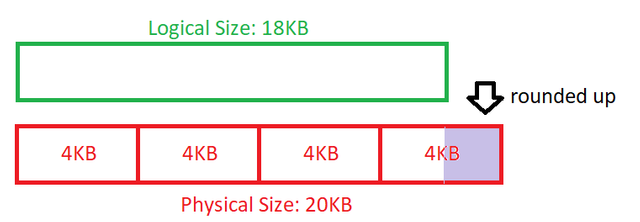

The computer's file system typically doesn't allocate just a byte at a time from the underlying storage. Allocations of new space might be in units of 512 or 4096 bytes (which are powers of two.) So the physical size is rounded up to a convenient unit.

But that's not the only thing a file system can do that makes "physical size" different from "logical size".

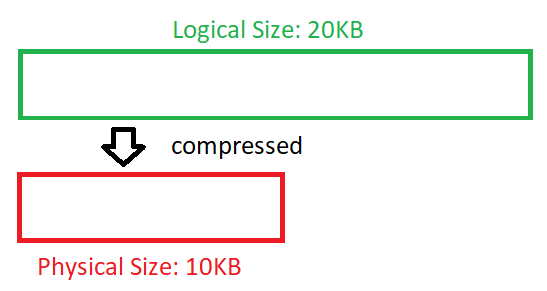

Compression

A file system can run your file through a compression algorithm before storing it. Many file formats are very redundant or wasteful, and so compression can cause the file to use less space on disk, than its original size. For example, a file which starts out as 20KB might be compressed down to 10KB, invisibly to the users of the file.

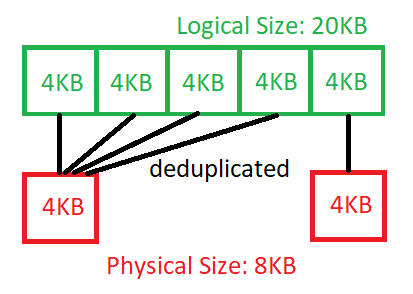

Deduplication

This feature is more common in enterprise storage systems than home computers. The file system can recognize that two blocks of data are the same, and only store one copy instead of two. Both files then reference the same physical storage, even though logically it looks like there are two copies.

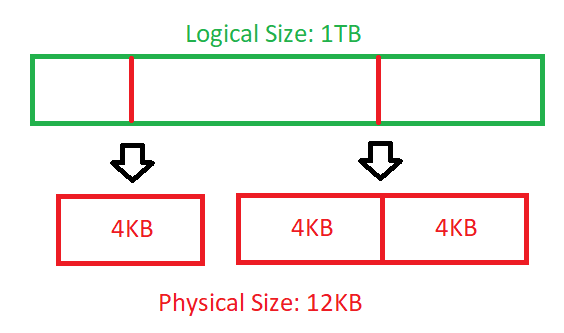

Thin Provisioning

If you create a new file that’s 1TB in size, the computer doesn’t actually need to allocate 1TB of physical storage right then and there. It can just keep track that all of the locations that have never been written contain only zeros. Later, if data is actually written to those locations, the file system can allocate some space.

Data Protection

A file system might store multiple copies of your file, or spread the file over multiple disks. Perhaps it keeps backup copies of an old version of your file, either explicitly or in an efficient form where it remembers the changes between one version and the next. A source code repository like GitHub works this way, but some file systems have such a capability built-in.

Paradoxes in data storage

Some or all of these features might be at work in a modern file system, which can lead to some strange effects.

You can run out of space before you expect

Suppose a file system stores data in 4KB units. Then when we write a file that is 5000 bytes long, it actually takes 8192 bytes of storage. A 1TB hard drive would be full with 122,070,312 such files. But 122 million * 5000 bytes = only 610 gigabytes! This is called “internal fragmentation”--- the space doesn’t hold any data, but you can’t make use of it.

Overwriting data can increase space usage

On a file system that performs compression, if we write uncompressible data to a file that previously stored compressible data, the new data will take more space on disk. Even though the logical file size is the same, we could even run out of disk space just by writing new data to an existing location in the file!

Data protection features also work this way; you might think you’re doing an overwrite, but underneath the hood, the compute may be keeping copies of both the new data and the old data.

Writing new data to a file could decrease its space usage!

Suppose a file was 6 KB long, and we wrote an extra 2KB to the end of the file. If the file system performs deduplication on 4KB blocks, this could reduce the space needed to store that file. If the last 2KB of data was unique, but that 2KB plus the new 2KB of data appears in other file, then the file system can decide to share those two 4KB chunks of data, reducing overall space usage.

Deleting a file might not save any space

A file which consists entirely of blocks found elsewhere in storage would be entirely deduplicated away. In this case, deleting the file would not free up any space--- all the same blocks would have to still be on the device.

Automatic data protection also may interfere with space reclamation; if there’s a “snapshot” of the file, then it contains all the same data as the file you deleted.

How large is your file?

It depends why you want to know.

If the question is “how much data can be read”, that’s one answer.

If the question is “how much space will be freed if I delete it”, that’s a completely different answer.

If the question is “how much would it cost to store the file somewhere else” you might get yet another answer.

Modern storage has a lot of features, and those features mean even things as simple as "size" can be complicated.

Informative.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

Hi markgritter, nice article on file storage features. I would like to drop an advice for you.

Nice one though. Cheers

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit