Analysis Storage startup WekaIO has joined a growing crowd of techies making large performance gains and latency lowering file system tech moves.

It has claimed to have developed the highest-performance, lowest-latency file system ever created – which is quite a bold statement – but since this technology area is developing so rapidly, it is almost unsurprising.

We've encountered WekaIO before, here and here for example. Now it's out of stealth.

The software can run in dedicated storage servers or in a hyper-converged cluster or in the public cloud. Application-level 4K IO latencies are lower than from an all-flash storage array and there is linear scaling in IOPS as the cluster size increases, it has said.

WekaIO claims its software, based on its MatrixFS distributed and parallel filesystem, delivers high performance for all workloads – big and small files, reads and writes, random, sequential and metadata heavy ones.

There's a white paper discussing its technology, and a quick video intro:

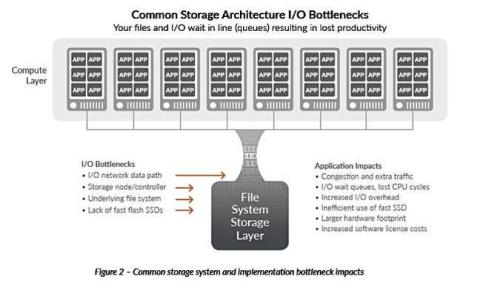

To set the scene here is its view of traditional storage architecture limitations:

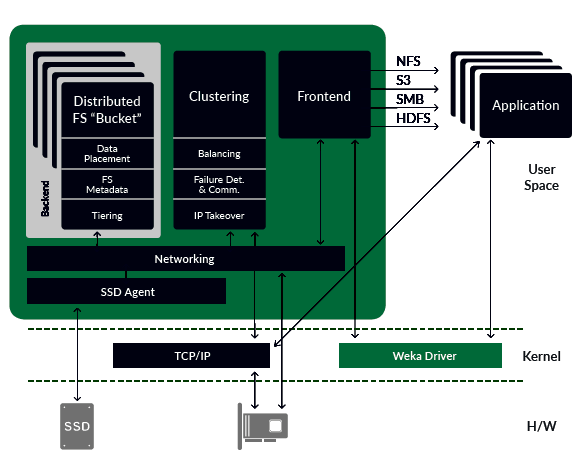

In the video we learn that WekaIO's software runs in a server that's part of a customer's server cluster, with tens, hundreds or thousands of servers in the cluster. It operates its own RTOS (real time operating system) in Linux user space, not the kernel space, and runs its own scheduling and networking stack. The networking stack talks directly to the server's network interface card (NIC) through PCIe virtualisation.

WekaIO's software talks directly to the server's SSDs. It has its own memory management. The software does not rely on the Linux kernel to provide very low latency.

When an application needs file services it uses system calls to talk to a Linux driver, in this case WekaIO's own VFS driver. This is POSIX-compliant, distributed, parallel and coherent.

The driver connects to WekaIO's cluster-aware front end module via a lockless queue said to be very efficient. It talks, via the networking component, to the right back end.

The back end modules provide data placement, data protection, metadata services, and tiering, if it has been defined, for cloud or on-premises object storage. Users can have as many backends as they want – installing more of them (meaning more servers) to get higher performance.

The back end talks via the networking layer to an SSD agent which has its own kernel bypass IO stack for low latency access to the SSDs, basically making them network-attached components. A 4K IO can take 150 microseconds.

For applications that don't run on Linux WekaIO has an NFS interface, for apps on Unix, Solaris, AIX or other servers, and SMB for Windows systems. There is native HDFS support for Hadoop applications.

WekaIO also supports S3 access for tiering to object storage.

We're told MatrixFS data services include both local snapshots and remote snapshots to the cloud, cloning, automated cloud tiering, and dynamic cluster rebalancing.

Here is a more detailed software architecture diagram:

The software components are:

File Services (Front-end) – manages multi-protocol connectivity,

File System Clustering (Back-end) – manages data distribution, data protection and file system,

SSD Access Agent – transforms the SSD into an efficient networked device,

Management Node – manages events, CLI, statistics, and call-home,

Object Connector – read and write to the object store.

WekaIO claims that bypassing the kernel means that Matrix’s I/O software stack is not only faster with lower latency, but also it is portable across different bare-metal, VM, containerized, and cloud instance environments.

It says its software is efficient, with a small resource footprint, typically about 5 per cent, leaving 95 per cent for application processing. It only uses the resources that are allocated to it, from as little as one server core and a small amount of RAM.

Data locality irrelevant

WekaIO's white paper states:

Which market areas is WekaIO looking at?

Electronic Design Automation (EDA),

Life sciences,

Machine learning, artificial intelligence,

Web 2.0, on-line content, and cloud services,

Porting any application to a public or private cloud,

Media and Entertainment (rendering, after-effects, color correction),

Financial trading and risk management,

General High-Performance Computing (HPC).

Performance

There are several performance references for Weka, such as a SPEC SFS 2014 software build benchmark.

When used in an autonomous car project a FlashBlade system supporting a single GPU server took 6,5 hours for a metadata "find" run. The WekaIO system took two hours.

A metadata ls command on a 1 million file directory took 55 secs with FlashBlade and 10 secs with WekaIO, the firm claimed.

DreamWorks Animation is using WekaIO software for burst-buffer style transient storage for its render and simulation workloads. Typically burst buffer applications need extra hardware, but the firm claims this is not the case here.

NVMe over fabrics startup Excelero has also seen its storage software used as a burst buffer by the way.

+RegComment

This is serious storage software with a lot of enthusiasm, both technical and – as you'd expect – marketing, behind it.

There are a bunch of suppliers who are credibly claiming to cut storage data access latency and increase storage bandwidth, both significantly, and WekaIO is one of them. Check out its white paper (registration required) – it's worth a careful read. ®