PEOPLE WHO HAVE LOST THEIR SPEECH WILL HAVE THE OPPORTUNITY TO SPEAK AGAIN.

Researchers headed by neurobiologist N. Mesgarani arrived at this discovery. A scientific publication appeared in Scientific Adventures that demonstrated to the world community the possibilities of a new technology for scanning the brain with the help of artificial intelligence.

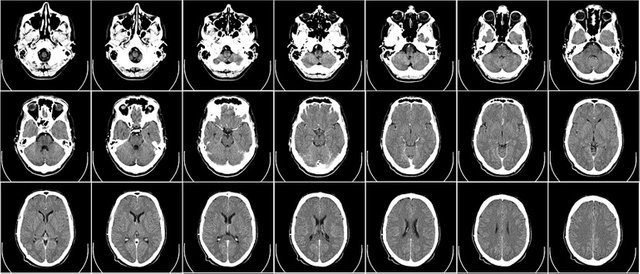

Machine learning technology allows you to read signals from the auditory cortex through a neurocomputer interface and transfer them into synthesized speech through an AI-based vocoder. It is important not to confuse this discovery with reading thoughts.

Intellectual systems are far from this. Neuroscientists set themselves the task of developing a new appliance that will allow us to return clear verbal speech to sick patients who have lost their voice.

LEARN MORE ABOUT THE RESEARCH

Five patients who are currently being treated for epilepsy were involved in technology testing. The team of neuroscientists with N. Masgarani used invasive electrocorticography to measure the neural activity of the subjects while listening to speech sounds. In other words, patients were asked to listen to simple recordings on which it was heard, as they consider from 0 to 10.

AI learned how to transfer brain signals into synthesized speech

Data from the auditory cortex was analyzed and forwarded to the vocoder, which transfered signals back into synthesized words. The output was robotic speech. To test the clarity of the transef of signals into simple words, the scientists invited an additional 11 people. For them, the task was to listen to the speech from the vocoder and accurately recognize the words. The result – in 75% of cases, participants were able to not only accurately identify words, but even were able to determine their affiliation by sex.

The research proved that machine learning is capable of read phonemes and individual words, and is able to find a connection between brain signals and speech. As N. Masgarani noted: “In this research, we want to give people who have lost the ability to speak, the ability to communicate with others. However, in speech there are not only individual words, but also sentences, intonation, emotional coloring and many other things. ”

Work on the research will continue. In the future, the scientific group aims to synthesize more complex words and whole sentences.

The complexity of machine learning and the use of neural networks here is that the system needs to be trained on a large amount of data from the brain signals of each participant. Here the individuality of each patient plays a big role, since people when listening to speech can reproduce non-identical brain waves. Other neuroscientists and neuroscientists are interested in how decoders trained on selected people will work, if applied to other patients.

There are questions to the technology. Is already known that it will become the fundamental foundation in the field of implantation of speech neuroprostheses. The research received approval from the global community. Now a larger number of participants will be involved in the work in order to eliminate possible statistical errors and go to the stage of synthesizing whole sentences.

Congratulations @imdnews! You have completed the following achievement on the Steem blockchain and have been rewarded with new badge(s) :

You can view your badges on your Steem Board and compare to others on the Steem Ranking

If you no longer want to receive notifications, reply to this comment with the word

STOPTo support your work, I also upvoted your post!

Do not miss the last post from @steemitboard:

Vote for @Steemitboard as a witness to get one more award and increased upvotes!

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit