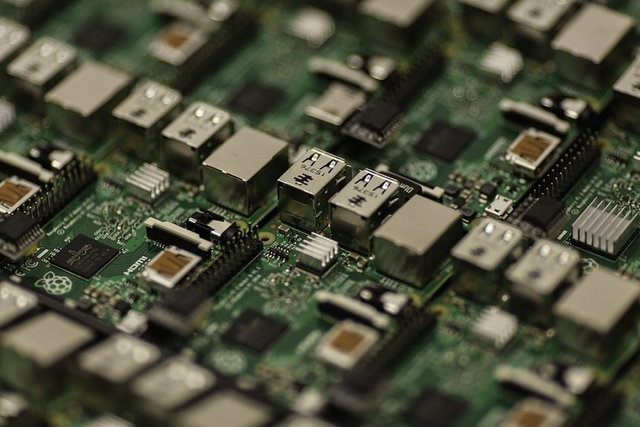

image source

The major performance parameters of the I/O subsystem is the speed. This subsystem should provide for the fastest transfer of data between the processing system should provide for the fastest transfer of data between the processing system and the application environment.

The I/O interface needs to be general in the sense that it should be easy to interface newer devices of differing characteristics to the system. Several bus standards and I/O protocols have evolved over the years. The I/O subsystem should accommodate such standards easily.

The most popular I/O structures are:

Programmed I/O

Interrupt mode I/O

Direct memory access.

Channels.

I/O processors.

In the programmed I/O structure, the CPU initiates the I/O operation and waits for either the input to provide the data or the output device to accept the data. Since I/O devices are slow compared to the CPU and the CPU has to wait for the slow I/O operations to be completed before continuing with other computations, this structure is inefficient.

It is possible to increase the efficiency, if the processor is made to go off and perform other tasks if possible while the I/O devices are generating or accepting data. Either way, the processor has to explicitly check for the availability or acceptance of data.

This is the simplest of the I/O structures since it requires a simple I/O device controller that can respond to the control commands from the CPU and all the I/O protocol is handled by the CPU. In this structure typically one or more processor registers are involved in data transfer.

In the interrupt mode I/O, some of the I/O control is transferred from the CPU to the I/O device controller. The CPU initiates the I/O operation and continues with other tasks (if any) and the I/O device interrupts the CPU once the I/O operation is complete. This I/O mode enhances the throughput of the system since the CPU is not idle during the I/O. This mode of I/O is required in application such as real-time control where the system has to respond to the changes in inputs which occur at unpredictable times.

The direct memory access (DMA) mode of I/O allows I/O devices to transfer data to and from the memory directly. This mode is useful when large volumes of data need to be transferred. In this structure the CPU initiates the DMA controller to perform an I/O operation and continues with its computational tasks.

The DMA controller requires the memory bus as needed and transfers data directly to and from the memory. The CPU and the DMA controllers compete for the memory access through the memory bus. The I/O control is thus transferred completely to the I/O device relieving the CPU from the I/O overhead. Note also that this structure does not require CPU registers to be involved in I/O operations, as in other structures described earlier.

The I/O channels are enhanced DMA controllers. In addition to performing data transfer in the DMA mode, they perform other I/O related operations such as error detection and correction, code conversion and control of multiple I/O devices. There are two types of I/O channels. These are;

- Selector channels.

- Multiplexer channels.

1. Selector channels; these are used with high-speed devices (such as disk and tape).

2. Multiplexer channels are used with low speed devices (such as terminals). Large computer systems of today typically have several I/O channels.

Traditionally, I/O channels were specially designed hardware elements to suit a particular CPU. With the advent of very large-scale integration (VLSI)

, low cost microprocessors came into being. The I/O channels were then implemented using microprocessors, programmed to perform the I/O operations of the channel. As the capabilities of microprocessors were enhanced to make them suitable for general-purpose processing, channels became I/O processors that handled some computational tasks on the data in addition to I/O.

The structure of modern day systems thus consists of a multiplicity of processors: a central processor and one or more I/O processors or front-end processors. With such multiple processor structure, the computational task is partitioned such that the subtasks are executed on various processors simultaneously (as far as possible). This is a simple form of parallel processing.

Interconnection Structures.

The subsystem of the computer system are typically interconnected by a bus structure. The function of this interconnection structure is to carry data, address, and control signals. Thus the bus structure can be organised either to consist of separate data, control, and address buses, or as a common bus carrying all the three types of signals or several buses (carrying all three types of signals) each interconnecting a specific set of subsystems. For instance, in multiple bus structures it is common to see buses designated as memory bus and I/O bus.

The registers and other resources within the CPU are also interconnected by either a single or a multiple-bus structure. But, data transfer on these buses does not involve elaborate protocols as on buses that interconnect system elements (CPU, Memory, I/O devices).

The major performance measure of the bus structure is its bandwidth, which is a measure of number of units of data it can transfer per second. The bandwidth is a function of the bus width (i.e., number of bits the bus can carry at a time), the speed of the interface hardware and the bus protocols adopted by the system.

The advantages of multiple-bus structures is the possibility of simultaneous transfers on multiple buses, thereby providing a high overall bandwidth. The disadvantage of this structure is the complexity of hardware.

Single-bus structure provides uniform-interfacing characteristics for all devices connected to it and simplicity of design, because of the single set of interface characteristics. Since this structure utilities a single transfer path, it can result in higher bottlenecks for transfer operations, compared to multiple-bus structures.

As computer architectures evolved into systems with multiple processors, several other interconnection schemes with varying performance characteristics have been introduced.

System considerations.

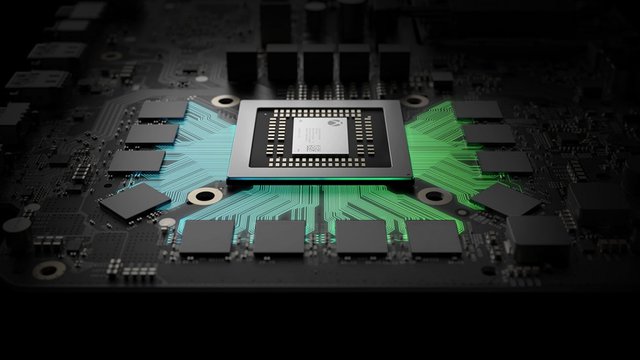

image source

All enhancements outline above were literally attempts to increase the throughput of the uniprocessor system. As hardware technology moved into the VLSI-era more and more functions were implemented in hardware, rather than software. Large instruction sets, large number of general-purpose registers, large memories became common. But the basic structure of the machine remained the one that was proposed by von Neumann.

With the current technology, it is possible to fabricate a complex processing system on a chip. Processors with 64-bit architectures along with a limited amount of memory and I/O interfaces are now fabricated as single chip systems. It is expected that the complexity of systems fabricated on a chip would continue to grow.

With the availability of low-cost microprocessors, the trend in architecture has been to design systems with multiple processors in such multiple processor architectures. The CPU is usually a general-purpose processor dedicated to basic computational functions. The coprocessors tend to be specialized. Some popular coprocessors are: numeric coprocessors that handle floating-point computations and memory management units (MMUs) that coordinate the main memory, cache, and virtual memory interactions.

There are also multiple processor architectures in which each processor is a general CPU (i.e., the CPU/ coprocessor distinction does not exist). There are many applications that can be partitioned into subtasks that can be executed in parallel. For such applications the multiple processor structure has been proved to be very cost-effective compared to building systems with a single powerful CPU.

Note that there are two aspects of the multiple processor structures that contribute to their enhanced performance. First, each coprocessor is dedicated for a specialized function and as such, it can be optimized to perform that function efficiently.

Second, all the processors in the system could be operating simultaneously (as far as the application allows it), thereby providing a higher throughput compared to single processor systems. Due to the second aspect, these multiprocessor systems can be called parallel-processing architectures. Thus, trends in technology have forced the implementation of parallel-processing structures.