AlphaGo Zero, the successor to AlphaGo, has beaten its predecessor in its own game. AlphaGo is renowned for beating the world's top players in the game called Go, an ancient Chinese board game that requires intuitive thinking as part of it's strategy. Until recently, computers could not make their own decisions based on intuition. Then Google's DeepMind developed AlphaGo to play the game of Go and eventually develop it's own strategies. It actually worked so well, even top Go players learned new things. The only thing that could beat AlphaGo was a newer verison of itself called AlphaGo Zero. AlphaGo Zero beating AlphaGo shows that the field of deep learning in AI has made a major advancement. Deep Learning is a subset of Machine Learning, and under this falls another classification called RL Reinforcement Learning or "Self Learning". This uses ANN (Advanced Neural Networks) to use data to make decisions.

After only three days of self-play, AlphaGo Zero was strong enough to defeat the version of itself that beat 18-time world champion Lee Se-dol — 100 games to nil. After 40 days, it had a 90 percent win rate against the most advanced version of the original AlphaGo software. DeepMind, the creators of AlphaGo and AlphaGo Zero, says this makes it arguably the best Go player in history, and it's non-human.

This was an example of "Self Play Reinforcement Learning" which AlphaGo Zero utilized. This allowed the computer to train itself from scratch and actually become better than its predecessor in the smallest timeframe. What AlphaGo Zero did was play Go millions of times with itself without human intervention, meaning it was unsupervised ML. Basically the neural network in the program for AlphaGo Zero is creating it's own "artificial knowledge". AlphaGo Zero learned from reinforcement based on a sequence of actions that had both consequences and inception. Basic RL is based on the Markov Decision Process. For every move AlphaGo or AlphaGo Zero makes, it looks at the probability of outcomes with the aid of powerful processors called TPUs arranged in an asynchronous distributed mode. To explain, asynchronous means that it does not rely on previous tasks to complete for execution. Asynchronous tasks process in parallel. This is important to machine learning because of how AlphaGo Zero responds to new data input without being programmed to do so. AlphaGo Zero did not require any human intervention in the sense that it learned to play Go by playing against itself until it could anticipate it's own moves and how those moves would affect the game's outcome.

What AlphaGo Zero accomplished were:

- Defeating the original AlphaGo 100 games to nil.

- Teaching itself to play Go without human knowledge.

- Achieved world class Go proficiency in 3 days.

- Accomplished with less hardware resources.

- Required less training (4.9 millions games vs. 30 million)

AlphaGo Zero accomplished all this with a simpler architecture that used only 4 TPU's compared to the original which used over 48 TPU's. This type of deep learning that leads to self training and mastery of a specific task can lead to other applications besides just playing a game like Go.

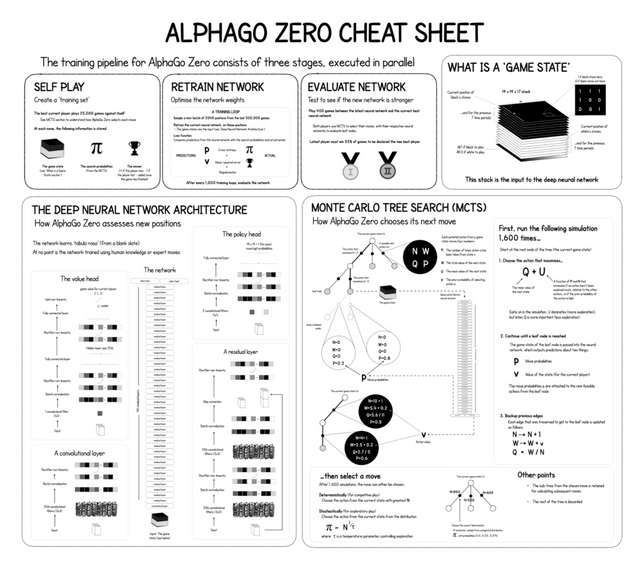

The process AlphaGo Zero went through includes the following:

- Self Play - Creating a training set

- Retrain - Optimize the network weights

- Evaluation - Compare winning and losing results

- Game State - Create stacks as input to the NN

- Deep NN - All moves are learned by AlphaGo Zero without requiring human intervention

- MCTS (Monte Carlo Tree Search) - Technique used by AlphaGo Zero to determine it's next move

Typically in supervised learning methods:

Y = f(X)

You have an input variable (X) to the output variable (Y) which is a mapping function that uses labeled training data.

In unsupervised or "Self Learning" methods:

Association rules can state relationships in the form:

If a person purchases item X, then he purchases item Y’ as : X -> Y

For example a person who likes items milk and sugar may likely also purchase coffee powder, though it is not always true. However it could be the outcome of many encounters, so we accept that as the result.

{milk,sugar} -> coffee powder

AlphaGo Zero uses these associations it has learned from playing against itself to create intuitive decision making.

According to Dr. David Silver, Lead Programmer of AlphaGo Zero: “By not using human data — by not using human expertise in any fashion — we’ve actually removed the constraints of human knowledge. It’s therefore able to create knowledge itself from first principles; from a blank slate [...] This enables it to be much more powerful than previous versions.”

(Source: applied-data.science/static/main/res/alpha_go_zero_cheat_sheet.png)

On December 5, 2017, DeepMind released AlphaZero, the successor to AlphaGo Zero. After 34 hours of learning, using only 4 TPU's on a single machine, it defeated AlphaGo Zero in the game of Go. While games like Go require strategy, it is intuition that makes it a unique game. Somehow, AlphaGo Zero and AlphaZero have developed their own machine intuition that allowed them to play the game much better than it's predecessors.

Perhaps the lesson learned from AlphaGo, AlphaGo Zero and AlphaZero is how AI can help advance humans to learn better. The original AlphaGo revealed many weaknesses in humans that the Go masters were all saying that it changed the way they looked at the game. If this encounter never happened no new insights about the game of Go would have ever happened. Many insights can be made that will help improve how we do things. Who knows maybe there will be a better solution to making the perfect pizza.

Further Reading:

AlphaGo Zero: Learning from Scratch

https://deepmind.com/blog/alphago-zero-learning-scratch/

AlphaGo Zero

https://www.cnet.com/news/google-deepmind-alphago-zero-ai-destroys-humans-all-on-its-own/

You have a minor grammatical mistake in the following sentence:

It should be its own instead of it's own.Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

Noted

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit