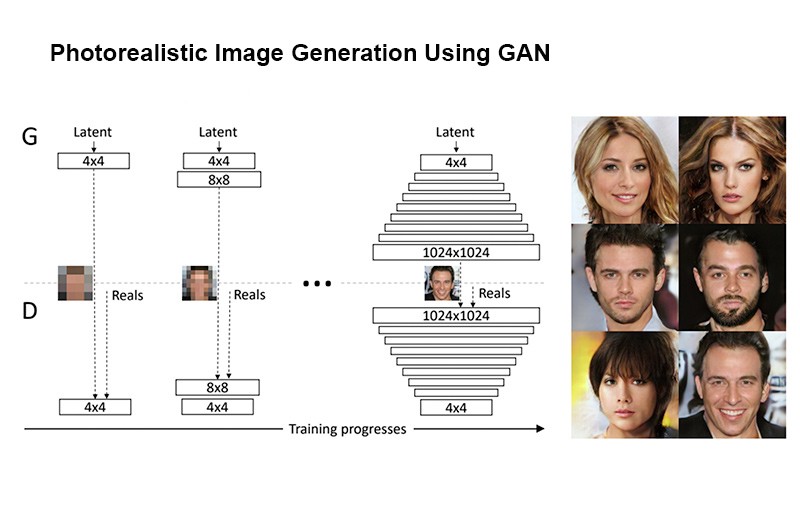

None of these faces belong to any real celebrity. You might be wondering what camera was used to take such depth and detail. Actually, these photos are “fake”. They were artificially created using deep machine learning techniques using GAN (Generative Adversarial Networks) neural networks. This was developed by nVidia researchers. The method uses ML trained to predict a probability distribution to sample from 2 classes what is “real” and what is “fake”. It works with a discriminator D to tell the differences. Through training, the discriminator network learns a function to tell the difference between the real and generated data. Two networks will take forward feeding data samples and minimize the function of the other using celebrity faces to generate their own unique faces. Not even the best available CGI can generate anything like this at the moment. It starts from 4 x 4 to scaled up 1024 x 1024 images. The researchers objective was not just to generate images, but to generate larger images 1024 x 1024 or > image resolution. The rendering used probabilistic determination to generate the images from unsupervised learning techniques. It takes the input of the photograph, then applies parallel network processing using the “Zero-sum game” framework. In this algorithm, backpropagation is used on both networks to generate the image using residual layers as a transition step between incrementally larger networks.

So let’s say we have 1024 x 1024 8-bit RGB image. There are 2³x8x1024x1024 possible arrangements of the pixel values in those images. Thousands, even millions of iterations are needed to generate the images. The discriminator then takes both real images (x) and generated images (G(z)), and produces a probability P(x) for them. The generator takes as input a vector of random numbers (z), and transforms it into the form of the data we are interested in creating.

What this means is that in the future, software can randomly generate faces or people or other objects. For example a designer who cannot find human models from an agency, can use this to generate their own models using unique faces and body types. Let’s say the designer wants a hybrid look that combines features of Naomi mixed with Kate Moss and Elsa Hosk with body parts from Martha Hunt and Candace Swanepoel (Naomi)(Kate)(Elsa)/(Martha)(Candace). These artificial models can then be edited to wear the clothing from the designer. It can be used for look books and catalogues as well and will have saved the designer production costs. So to get down to the point of this nVidia research … it has application in future special effects in cinema and animations. Remember the CGI used in Rogue One to make us believe Peter Cushing was the actual actor or the late Paul Walker appearing in FF7? Well with GAN techniques it takes things to another level. What this brings are more realistic looking human traits in animations and games that can also be applied to actors on the big screen.

GANs are really the biggest innovation that happened to ML in recent years and we're just scratching the surface of what is possible.

But I do have one concern: since images are very powerful, generated images could be used in a manipulative way. If you think about it, it is already possible to manipulate and fake images via Photoshop and the like, but also there GANs could open totally new possibilities.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit

Yes, it's application in cinema and video games will deliver more life-like images. They can create an artificial actor using this technique. For gaming, the characters will evolve with more realistic looking faces and features, and based on generated images. That way they don't even have to hire an actor.

Downvoting a post can decrease pending rewards and make it less visible. Common reasons:

Submit