Hand picked 8 unique frames from MPEG-2 video source, extracted full size uncompressed PNG images using FFMPEG. The 8 frames were within the video timestamp range of 00:28:01 to 00:28:03 where there seemed to be the best visual clarity of the page and only translational motion of the page.

The MATLAB code used was pulled from MATLAB Central and implemented a video super-resolution algorithm described in a recent journal article.

I modified the code to directly read in grayscale PNG images and simply let it run using default parameter settings (hr=255.0 and h=0.5). The supplied MATLAB code also implements a median filter near the end, which I left in and tends to remove some of the video salt and pepper noise.

Honestly, there is little to be recovered by super-resolution techniques in this situation due to the lack of translational motion that super-resolution techniques thrive on. Most of the motion is not simple linear motion, but more like rotation and bending of the pages which are not well handled by most super-resolution algorithms. However, a custom algorithm could easily be written for this challenge problem, and there are many weeks remaining before the end of the contest.

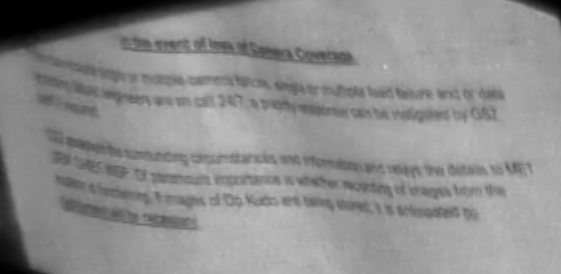

Others can probably do a better job reading the actual text, but what I see is this:

In the event of loss of Camera Coverage

??? ???? single or multiple camera failures, single or multiple feed failure and or data

????? ??? engineers are on call 24/7 a priority ????? can be ????? by GS2

??? ???? the surrounding circumstances and information and relays the details to MET

?? ?? ???. Of paramount importance is whether recording of images from the

????? is functioning. If images of Op Kudo are being stored, it is anticipated no

?????? will be necessary

The question marks are not meant to count letters, only to serve as an approximate placeholder for a word I cannot make out.