TLDR readers (ages 10 and up): Think of your brain as having computational ability. Talking to your pet cat or dog might use a few neurons (or more than a few, I don't judge--it's all relative). Trying to understand machine learning algorithms from a dry textbook might use up a few more neurons. Hence the more neurons you use the more computational power you need to solve the problem facing you whether it is getting an animal's attention or understanding technical jargon. In machine learning, the smallest unit of computational power can be thought of as a neuron where a set of input produces some output. The output could be a classification (Yes spam, No not spam). The accuracy is 60%. To increase the accuracy we need more computational power so we use the output of neuron 1 as input for neuron 2 and the accuracy goes up. Still it's only 61% so we keep going until marginally, there's minimal gain in accuracy. All these neurons together are a neural network just like our brain. This is over simplified and a neural net is only one algorithm to solve a problem, there are many many others, and many are beyond the scope of my interest. For the technical explanation, see a few scrolls down :)

Big data is large enough that a computer with machine learning algorithms could find patterns much faster than humans ever could. Machine learning is the automated stepping stone to eventual artificial intelligence. Many of the things we think of as artificial intelligence such as self-driving vehicles are essentially a cluster of machine learning algorithms. Philosophically, we don’t have true artificial intelligence yet, but the moniker is easily adopted because of the fascinating ‘intelligent’ tasks the machine is capable of.

Think of any mind numbing repetitive task, and a machine can do it with 100% accuracy, and things that have require analyzing new information that is very similar to the information it trained on will also have high accuracy >95%. For example using a data set of 10,000-20,000 handwritten digits(1-9) training set can give the machine an accuracy greater than 97% to recognize handwritten digits in the future.

Narrative Science, a Chicago based company automates writing of news reports. Kristian Hammond, the Chief Scientist at Narrative Science believes that we are only a little over a decade away where more than 90% of news will be written by machines analyzing data. Today, our state and national weather reports are mostly machine written generated after analyzing government provided data from organizations like the National Oceanic And Atmospheric Administration (NOAA). Kensho, a company in Cambridge automates algorithmic analysis in hedge funds typically done by ‘quants’ or the model building financial analysts. In fact, while a quant typically builds 1-2 models per week, a machine can built thousands of models per week. Similarly, IBM Watson scans hundreds of thousands of health records for medical insights in a matter of seconds compared to the weeks it would take a team of medical researchers to gather, analyze, and publish the findings.

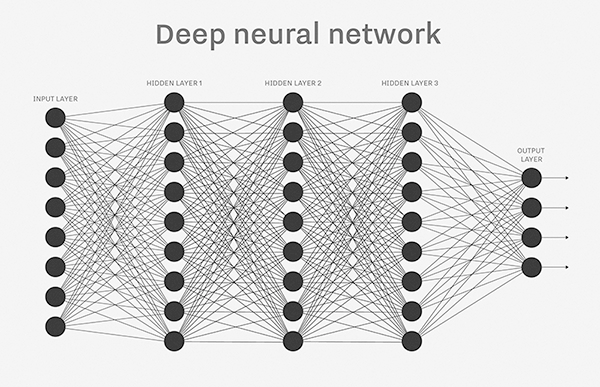

Neural Networks are only one form of machine learning algorithm popular because of the ability to improve the accuracy of the algorithm by using deeper and deeper hidden layers (also called deep learning). To do this, we needed to use higher and higher amounts of computational power. That is why, while initially developed in 1960, it only gained popularity recently because of the higher computational ability of graphics cards (GPU—graphical processing units) compared to CPU chips. Similarly mining bitcoin moved from CPU to GPU and now to ASICs.

WARNING:TECHNICAL EXPLANATION Neural Networks get their name based off a model of the human neuron, called the perceptron, who perceptron learning rule, first developed by Frank Rosenblatt in 1957 explains the algorithm’s building block. The perceptron learning rule expanded on the McCullock-Pitts (MCP) neuron description of learning. The MCP neuron has a number of inputs but only two outputs. It either fires or it doesn’t. It requires the sum of the inputs to pass a certain threshold before the neuron fires. What the Perceptron learning rule added was the concept of the activation function 𝛷(z). It basically assumes that each input, let’s call it a feature, has a specific weight where the product of the feature and weight would yield some value. This value would then be transformed using the activation function into a non-linear function that the machine can understand such as a logistic function (which takes values between 0 and 1). The number of thresholds depends on the number of final classifications. Email spam, which is a classic example has one threshold and two classifications (spam or not spam). If we use the product of the weights and inputs and use them as a second layer of inputs whose weights we need to find using the same or another activation function, then we have essentially created another neural layer. We can keep adding layers but there is diminishing marginal gain in the accuracy of the algorithm and it can get very computationally demanding. The activation function, this function that transforms the product of weights and inputs, is the focus of machine learning research because of the assumption that it yields the highest gain in initial accuracy, thus there is no right activation function. Researchers can try several and see which one yields the best accuracy, then add additional layers to the network to improve the accuracy.

While big data lead to machine learning, and machine learning is leading us to artificial intelligence, we are likely to hang out in the machine learning realm still refining our algorithms and improving our computational ability as well as increasing user adoption for many years to come. Which means we still have time to understand this fascinating world before it becomes overwhelming.

Referenced links:

Chris Woodford (2016). Neural Networks. Explainthatstuff.com. Accessed from < explainthatstuff.com/introduction-to-neural-networks.html>.

Brad Power (2015). Artificial Intelligence Is Almost Ready for Business. Harvard Business Review. Accessed from < https://hbr.org/2015/03/artificial-intelligence-is-almost-ready-for-business>.

Jane Wakefield (2015). Intelligent Machines: The jobs robots will steal first. BBC Technology. Accessed from < http://www.bbc.com/news/technology-33327659>

NOAA link: https://www.ncdc.noaa.gov/

National Institute of Standards and Technology (NIST) handwritten digits database: https://srdata.nist.gov/gateway/gateway?keyword=handwriting+recognition