Even in a world increasingly battered by weather extremes, the summer 2021 heat wave in the Pacific Northwest stood out. For several days in late June, cities such as Vancouver, Portland and Seattle baked in record temperatures that killed hundreds of people. On June 29, Lytton, a village in British Columbia, set an all-time heat record for Canada, at 121° Fahrenheit (49.6° Celsius); the next day, the village was incinerated by a wildfire.

Within a week, an international group of scientists had analyzed this extreme heat and concluded it would have been virtually impossible without climate change caused by humans. The planet’s average surface temperature has risen by at least 1.1 degrees Celsius since preindustrial levels of 1850–1900. The reason: People are loading the atmosphere with heat-trapping gases produced during the burning of fossil fuels, such as coal and gas, and from cutting down forests.

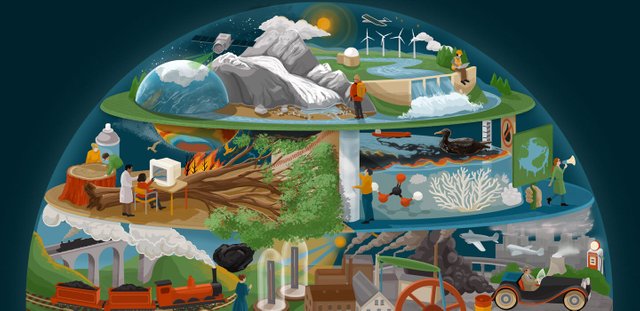

A little over 1 degree of warming may not sound like a lot. But it has already been enough to fundamentally transform how energy flows around the planet. The pace of change is accelerating, and the consequences are everywhere. Ice sheets in Greenland and Antarctica are melting, raising sea levels and flooding low-lying island nations and coastal cities. Drought is parching farmlands and the rivers that feed them. Wildfires are raging. Rains are becoming more intense, and weather patterns are shifting.

The roots of understanding this climate emergency trace back more than a century and a half. But it wasn’t until the 1950s that scientists began the detailed measurements of atmospheric carbon dioxide that would prove how much carbon is pouring from human activities. Beginning in the 1960s, researchers started developing comprehensive computer models that now illuminate the severity of the changes ahead.

Today we know that climate change and its consequences are real, and we are responsible. The emissions that people have been putting into the air for centuries — the emissions that made long-distance travel, economic growth and our material lives possible — have put us squarely on a warming trajectory. Only drastic cuts in carbon emissions, backed by collective global will, can make a significant difference.

“What’s happening to the planet is not routine,” says Ralph Keeling, a geochemist at the Scripps Institution of Oceanography in La Jolla, Calif. “We’re in a planetary crisis.”

One day in the 1850s, Eunice Newton Foote, an amateur scientist and a women’s rights activist living in upstate New York, put two glass jars in sunlight. One contained regular air — a mix of nitrogen, oxygen and other gases including carbon dioxide — while the other contained just carbon dioxide. Both had thermometers in them. As the sun’s rays beat down, Foote observed that the jar of CO2 alone heated up more quickly, and was slower to cool down, than the one containing plain air.

The results prompted Foote to muse on the relationship between CO2, the planet and heat. “An atmosphere of that gas would give to our earth a high temperature,” she wrote in an 1856 paper summarizing her findings.

Three years later, working independently and apparently unaware of Foote’s discovery, Irish physicist John Tyndall showed the same basic idea in more detail. With a set of pipes and devices to study the transmission of heat, he found that CO2 gas, as well as water vapor, absorbed more heat than air alone. He argued that such gases would trap heat in Earth’s atmosphere, much as panes of glass trap heat in a greenhouse, and thus modulate climate.

Today Tyndall is widely credited with the discovery of how what we now call greenhouse gases heat the planet, earning him a prominent place in the history of climate science. Foote faded into relative obscurity — partly because of her gender, partly because her measurements were less sensitive. Yet their findings helped kick off broader scientific exploration of how the composition of gases in Earth’s atmosphere affects global temperatures.

In 1859, John Tyndall used this apparatus to study how various gases trap heat. He sent infrared radiation through a tube filled with gas and measured the resulting temperature changes. Carbon dioxide and water vapor, he showed, absorb more heat than air does.

Humans began substantially affecting the atmosphere around the turn of the 19th century, when the Industrial Revolution took off in Britain. Factories burned tons of coal; fueled by fossil fuels, the steam engine revolutionized transportation and other industries. Since then, fossil fuels including oil and natural gas have been harnessed to drive a global economy. All these activities belch gases into the air.

Yet Swedish physical chemist Svante Arrhenius wasn’t worried about the Industrial Revolution when he began thinking in the late 1800s about changes in atmospheric CO2 levels. He was instead curious about ice ages — including whether a decrease in volcanic eruptions, which can put carbon dioxide into the atmosphere, would lead to a future ice age. Bored and lonely in the wake of a divorce, Arrhenius set himself to months of laborious calculations involving moisture and heat transport in the atmosphere at different zones of latitude. In 1896, he reported that halving the amount of CO2 in the atmosphere could indeed bring about an ice age — and that doubling CO2 would raise global temperatures by around 5 to 6 degrees C.

It was a remarkably prescient finding for work that, out of necessity, had simplified Earth’s complex climate system down to just a few variables. But Arrhenius’ findings didn’t gain much traction with other scientists at the time. The climate system seemed too large, complex and inert to change in any meaningful way on a timescale that would be relevant to human society. Geologic evidence showed, for instance, that ice ages took thousands of years to start and end. What was there to worry about?

One researcher, though, thought the idea was worth pursuing. Guy Stewart Callendar, a British engineer and amateur meteorologist, had tallied weather records over time, obsessively enough to determine that average temperatures were increasing at 147 weather stations around the globe. In a 1938 paper in a Royal Meteorological Society journal, he linked this temperature rise to the burning of fossil fuels. Callendar estimated that fossil fuel burning had put around 150 billion metric tons of CO2 into the atmosphere since the late 19th century.

Like many of his day, Callendar didn’t see global warming as a problem. Extra CO2 would surely stimulate plants to grow and allow crops to be farmed in new regions. “In any case the return of the deadly glaciers should be delayed indefinitely,” he wrote. But his work revived discussions tracing back to Tyndall and Arrhenius about how the planetary system responds to changing levels of gases in the atmosphere. And it began steering the conversation toward how human activities might drive those changes.

When World War II broke out the following year, the global conflict redrew the landscape for scientific research. Hugely important wartime technologies, such as radar and the atomic bomb, set the stage for “big science” studies that brought nations together to tackle high-stakes questions of global reach. And that allowed modern climate science to emerge.

One major effort was the International Geophysical Year, or IGY, an 18-month push in 1957–1958 that involved a wide array of scientific field campaigns including exploration in the Arctic and Antarctica. Climate change wasn’t a high research priority during the IGY, but some scientists in California, led by Roger Revelle of the Scripps Institution of Oceanography, used the funding influx to begin a project they’d long wanted to do. The goal was to measure CO2 levels at different locations around the world, accurately and consistently.

The job fell to geochemist Charles David Keeling, who put ultraprecise CO2 monitors in Antarctica and on the Hawaiian volcano of Mauna Loa. Funds soon ran out to maintain the Antarctic record, but the Mauna Loa measurements continued. Thus was born one of the most iconic datasets in all of science — the “Keeling curve,” which tracks the rise of atmospheric CO2.

When Keeling began his measurements in 1958, CO2 made up 315 parts per million of the global atmosphere. Within just a few years it became clear that the number was increasing year by year. Because plants take up CO2 as they grow in spring and summer and release it as they decompose in fall and winter, CO2 concentrations rose and fell each year in a sawtooth pattern. But superimposed on that pattern was a steady march upward.

“The graph got flashed all over the place — it was just such a striking image,” says Ralph Keeling, who is Keeling’s son. Over the years, as the curve marched higher, “it had a really important role historically in waking people up to the problem of climate change.” The Keeling curve has been featured in countless earth science textbooks, congressional hearings and in Al Gore’s 2006 documentary on climate change, An Inconvenient Truth.

Each year the curve keeps going up: In 2016, it passed 400 ppm of CO2 in the atmosphere as measured during its typical annual minimum in September. Today it is at 413 ppm. (Before the Industrial Revolution, CO2 levels in the atmosphere had been stable for centuries at around 280 ppm.)

Around the time that Keeling’s measurements were kicking off, Revelle also helped develop an important argument that the CO2 from human activities was building up in Earth’s atmosphere. In 1957, he and Hans Suess, also at Scripps at the time, published a paper that traced the flow of radioactive carbon through the oceans and the atmosphere. They showed that the oceans were not capable of taking up as much CO2 as previously thought; the implication was that much of the gas must be going into the atmosphere instead.

“Human beings are now carrying out a large-scale geophysical experiment of a kind that could not have happened in the past nor be reproduced in the future,” Revelle and Suess wrote in the paper. It’s one of the most famous sentences in earth science history.

Here was the insight underlying modern climate science: Atmospheric carbon dioxide is increasing, and humans are causing the buildup. Revelle and Suess became the final piece in a puzzle dating back to Svante Arrhenius and John Tyndall. “I tell my students that to understand the basics of climate change, you need to have the cutting-edge science of the 1860s, the cutting-edge math of the 1890s and the cutting-edge chemistry of the 1950s,” says Joshua Howe, an environmental historian at Reed College in Portland, Ore.

Observational data collected throughout the second half of the 20th century helped researchers gradually build their understanding of how human activities were transforming the planet.

Ice cores pulled from ice sheets, such as that atop Greenland, offer some of the most telling insights for understanding past climate change. Each year, snow falls atop the ice and compresses into a fresh layer of ice representing climate conditions at the time it formed. The abundance of certain forms, or isotopes, of oxygen and hydrogen in the ice allows scientists to calculate the temperature at which it formed, and air bubbles trapped within the ice reveal how much carbon dioxide and other greenhouse gases were in the atmosphere at that time. So drilling down into an ice sheet is like reading the pages of a history book that go back in time the deeper you go.

Read the article from here- https://www.sciencenews.org/article/climate-change-crisis-history-research-carbon-human-impact