2篇关于uWSGI如何更好配置的文章,这2天找到的uwsgi+nginx docker默认配置只能用于验证应用,无法在大型网站上运行。

Configuring uWSGI for Production: The defaults are all wrong

Engineering Manager Peter Sperl and Software Engineer Ben Green of Bloomberg Engineering’s Structured Products Applications group wrote the following article to offer some tips to other developers about avoiding known gotchas when configuring uWSGI to host services at scale。

[uwsgi]

strict = true

master = true

enable-threads = true

vacuum = true ; Delete sockets during shutdown

single-interpreter = true

die-on-term = true ; Shutdown when receiving SIGTERM (default is respawn)

need-app = true

disable-logging = true ; Disable built-in logging

log-4xx = true ; but log 4xx's anyway

log-5xx = true ; and 5xx's

harakiri = 60 ; forcefully kill workers after 60 seconds

py-callos-afterfork = true ; allow workers to trap signals

max-requests = 1000 ; Restart workers after this many requests

max-worker-lifetime = 3600 ; Restart workers after this many seconds

reload-on-rss = 2048 ; Restart workers after this much resident memory

worker-reload-mercy = 60 ; How long to wait before forcefully killing workers

cheaper-algo = busyness

processes = 128 ; Maximum number of workers allowed

cheaper = 8 ; Minimum number of workers allowed

cheaper-initial = 16 ; Workers created at startup

cheaper-overload = 1 ; Length of a cycle in seconds

cheaper-step = 16 ; How many workers to spawn at a time

cheaper-busyness-multiplier = 30 ; How many cycles to wait before killing workers

cheaper-busyness-min = 20 ; Below this threshold, kill workers (if stable for multiplier cycles)

cheaper-busyness-max = 70 ; Above this threshold, spawn new workers

cheaper-busyness-backlog-alert = 16 ; Spawn emergency workers if more than this many requests are waiting in the queue

cheaper-busyness-backlog-step = 2 ; How many emergency workers to create if there are too many requests in the queue

--

Understanding uwsgi, threads, processes, and GIL

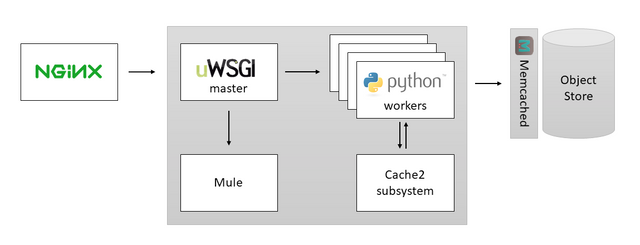

uWSGI works by creating an instance of the python interpreter and importing the python files related to your application. If you configure it with more than one process, it will fork that instance of the interpreter until there are the required number of processes. This is roughly equivalent to just starting that number of python interpreter instances by hand, except that uWSGI will handle incoming HTTP requests and forward them to your application. This also means that each process has memory isolation—no state is shared, so each process gets its own GIL.

Thus, using only processes for workers will give you the best performance if your aim is just to optimize throughput. However, processes come with tradeoffs. The main problem is that if your application benefits from sharing state and resources within the process, pre-forking makes this untenable. If you want an in-process cache for something, for example, your cache hit ratio would be much greater if all of your workers were housed in one process and could share the same cache. An important implication of this is that processes are very memory inefficient—memory isolation often requires that a lot of data is duplicated. (As a sidenote, there are ways to take advantage of copy on write semantics by loading things at import time, but that's a story for another day.)

For this reason, uWSGI also allows your workers to live within threads in the same process. These threads solve the problems mentioned above regarding shared state—now your workers can share the same cache, for example. However, it also means they share the same GIL. When more than one thread needs CPU time, it will not be possible for them to make progress concurrently. In fact, GIL contention and context switching will make your application run slower, on net.

For IO-bound applications where workers spend so much time waiting for IO that GIL contention is rare, this sounds like it shouldn't be a problem. And if your application is like most web applications that spend a large part of its time talking to other services or a database, it's probably IO bound. So all good right?

In reality, thread based uWSGI workers almost never work flawlessly for any python web application of even moderate complexity. The reason for this is primarily the ecosystem and the assumptions that people make writing python code—many libraries and internal code are flagrantly, unapologetically, and inconsolably NOT threadsafe. Even if your application is running smoothly today with thread based workers, you'll likely run into some hard-to-debug problem involving thread safety sooner than later.

Moreover, so called "IO bound" applications spend way more time on CPU than most developers realize, especially in python. Python code executes very slowly compared to most runtimes, and it's not uncommon for simple CRUD apps to spend ~20% of its time running python code as opposed to blocking on IO. Even with two threads, that's a lot of opportunity for GIL contention and further slowdowns.

My main point is this: Whatever bottleneck you're running into likely has to do with the fact that you're running 2 threads for each process. **So unless shared state or memory utilization is very important to you, consider replacing that configuration with 4x processes, 1x threads instead and see what effect it has.**

Citation: Nearly 2 years debugging and tuning performance of python applications of many flavors at Uber justtrustmei'veseensomeshit.

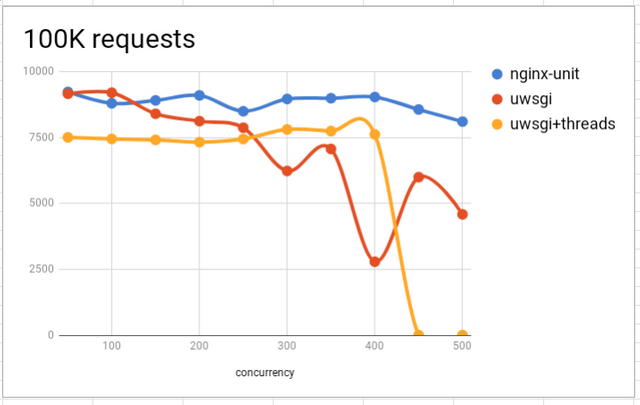

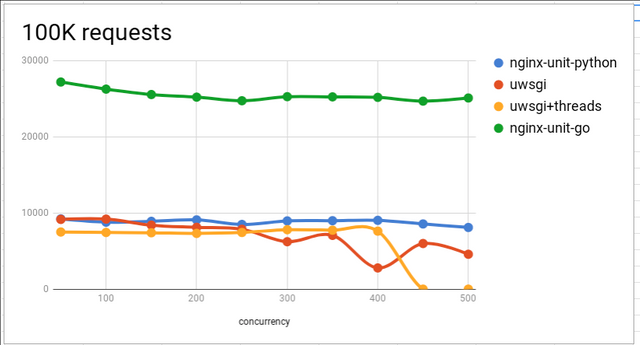

Concurrency comparison between NGINX-unit and uWSGI nginx-unit和uWSGI的benchmark, 记录下,有机会实测下。

![image.png]

( )

)